Hi,

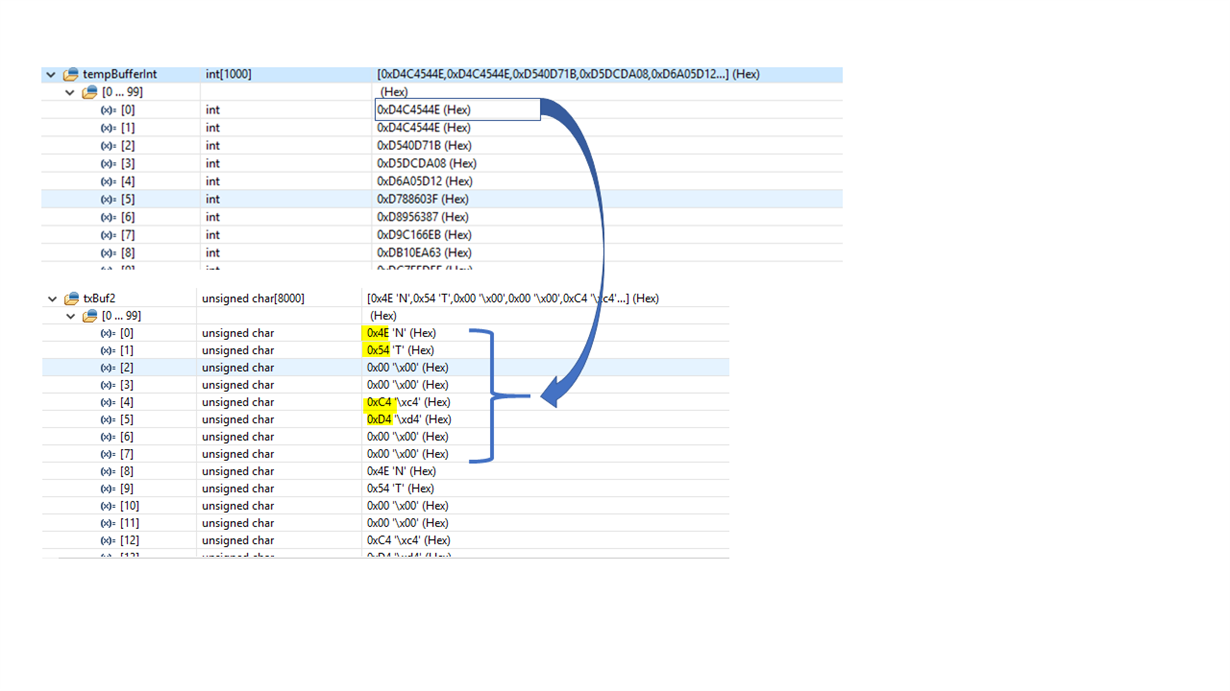

I'm trying to send a sine wave to the codec using the mcaspPlayBk.c demo code. The generated sine wave looks like this in Code Composer:

It's encoded using 32-bit signed integers and interleaved into the transmit buffer wiht LSB first:

Then I'm sending the data to the transmit buffer to the MCASP controller.

//reassemble into 2-channel buffer

interleave2(tempBufferInt, AUDIO_BUF_SIZE/8, (void *)txBufPtr[lastSentTxBuf]);

/*

memcpy((void *)txBufPtr[lastSentTxBuf],

tempBuffer,

AUDIO_BUF_SIZE);

*/

/*

** Send the buffer by setting the DMA params accordingly.

** Here the buffer to send and number of samples are passed as

** parameters. This is important, if only transmit section

** is to be used.

*/

BufferTxDMAActivate(lastSentTxBuf, NUM_SAMPLES_PER_AUDIO_BUF,

(unsigned short)parToSend,

(unsigned short)parToLink);

The problem is the output is scrambled in the transmit buffer txBuf:

I think the samples from the sine wave aren't being sent to the codec correctly.

thank you,

Scott