Tool/software: Linux

Dear Experts,

We got a package loss issue when transferring data via Ethernet port on AM3352:

the basic block diagram is as blow:

Description:

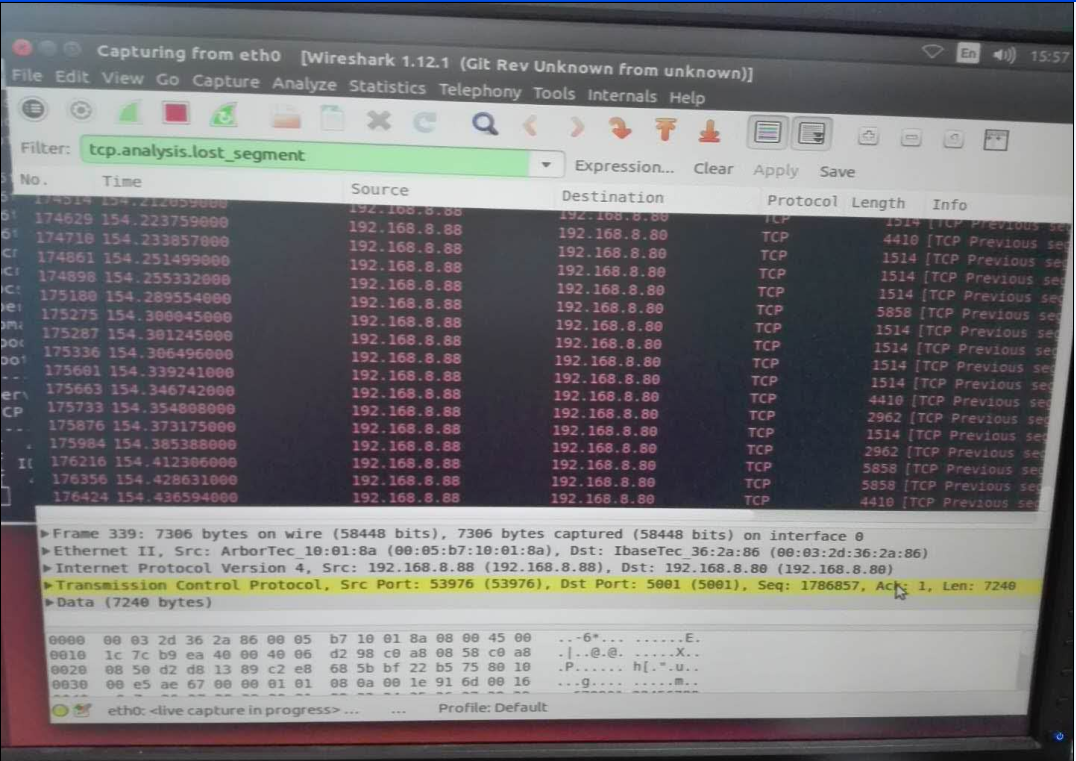

PC1 work as client and PC2 work as server, AM3352 is reconfigured as bridge mode, use "Iperf -s" and "iperf -c" respectively on 2 PCs to transfer the data and use wireshark to monitor the data package, and significant data loss was observed during the transmission.

Test condition:

1. ti-processor-sdk-linux-am335x-evm-03.02.00.05

2. AM3352 is reconfigured as bridge mode

3. CPSW kernel configuration

4. am335x-evm.dts

5. am33xx.dtsi configuration:

Could you give me some guidance on how to eliminate the data loss problem, thanks a lot.