Tool/software: Linux

HI ALL

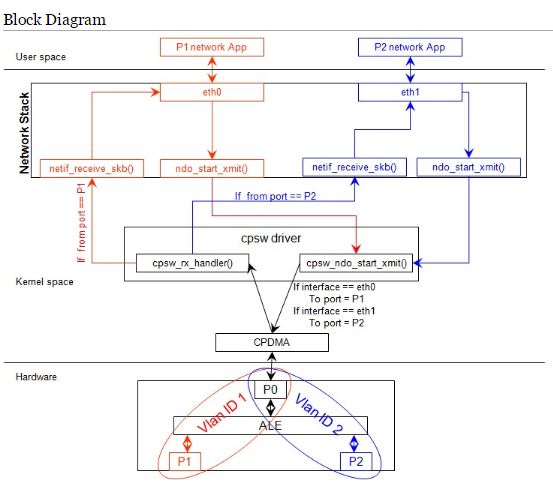

Just know we use the am5728 to do network distribution and we have two ethernet, eth0 and eth1.

But we countered an issues.

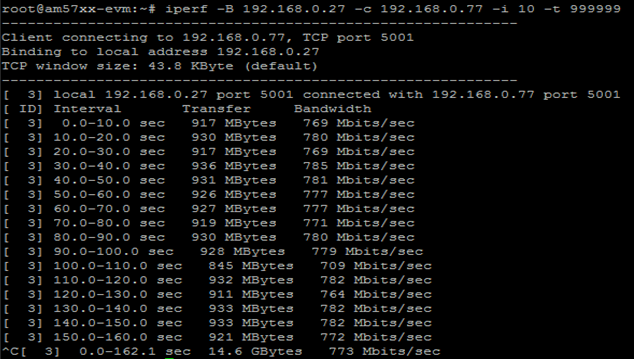

The eth0 can receive or send network package at 300Mb/s and the linux system can work well.

The eth1 also can receive or send network package at 300Mb/s and the linux system can work well.

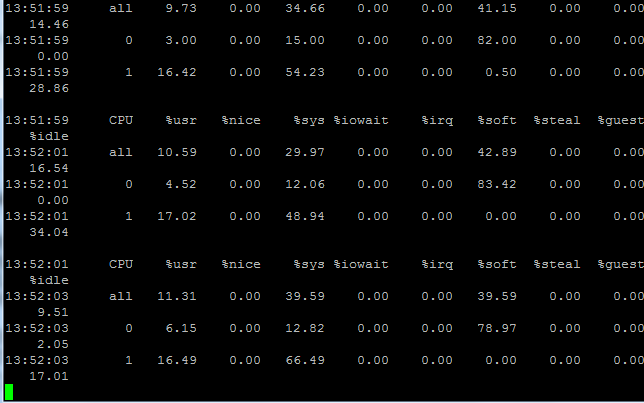

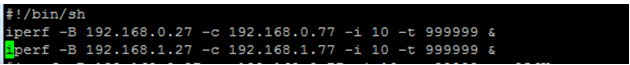

However if we use the eth0 and eth1 together and the both ethernet card send or receive at 200Mb/s.

the eth1 will crashed and droped package and linux system will print watchdog time out error like below:

root@am57xx-evm:~# [ 22.078811] ------------[ cut here ]------------

[ 22.083590] WARNING: CPU: 0 PID: 3 at net/sched/sch_generic.c:316 dev_watchdog+0x258/0x25c

[ 22.091967] NETDEV WATCHDOG: eth1 (cpsw): transmit queue 0 timed out

[ 22.098347] Modules linked in: bc_example(O) sha512_generic sha512_arm sha256_generic sha1_generic sha1_arm_neon sha1_arm md5 xfrm_user xfrm4_tunnel cbc ipcomp xfrm_ipcomp esp4 ah4 af_key xfrm_algo bluetooth rpmsg_proto xhci_plat_hcd xhci_hcd pru_rproc usbcore pruss_intc pruss rpmsg_rpc dwc3 udc_core usb_common snd_soc_simple_card snd_soc_simple_card_utils snd_soc_omap_hdmi_audio ahci_platform libahci_platform libahci pvrsrvkm(O) libata omap_aes_driver omap_sham pruss_soc_bus omap_wdt scsi_mod ti_vpe ti_sc ti_csc ti_vpdma rtc_omap dwc3_omap rtc_palmas extcon_palmas extcon_core rtc_ds1307 omap_des snd_soc_tlv320aic3x des_generic crypto_engine omap_remoteproc virtio_rpmsg_bus rpmsg_core remoteproc sch_fq_codel uio_module_drv(O) uio gdbserverproxy(O) cryptodev(O) cmemk(O)

[ 22.167354] CPU: 0 PID: 3 Comm: ksoftirqd/0 Tainted: G O 4.9.59-ga75d8e9305 #1

[ 22.175739] Hardware name: Generic DRA74X (Flattened Device Tree)

[ 22.181856] Backtrace:

[ 22.184331] [<c020b29c>] (dump_backtrace) from [<c020b558>] (show_stack+0x18/0x1c)

[ 22.191933] r7:00000009 r6:600f0013 r5:00000000 r4:c1022668

[ 22.197619] [<c020b540>] (show_stack) from [<c04cd680>] (dump_stack+0x8c/0xa0)

[ 22.204875] [<c04cd5f4>] (dump_stack) from [<c022e3d4>] (__warn+0xec/0x104)

[ 22.211865] r7:00000009 r6:c0c0fddc r5:00000000 r4:ee8a5dc8

[ 22.217548] [<c022e2e8>] (__warn) from [<c022e42c>] (warn_slowpath_fmt+0x40/0x48)

[ 22.225063] r9:ffffffff r8:c1002d00 r7:ee05e294 r6:ee05e800 r5:ee05e000 r4:c0c0fda0

[ 22.232843] [<c022e3f0>] (warn_slowpath_fmt) from [<c07db6f0>] (dev_watchdog+0x258/0x25c)

[ 22.241052] r3:ee05e000 r2:c0c0fda0

[ 22.244639] r4:00000000

[ 22.247185] [<c07db498>] (dev_watchdog) from [<c02919d8>] (call_timer_fn.constprop.2+0x30/0xa0)

[ 22.255920] r10:40000001 r9:ee05e000 r8:c07db498 r7:00000000 r6:c07db498 r5:00000100

[ 22.263779] r4:ffffe000

[ 22.266323] [<c02919a8>] (call_timer_fn.constprop.2) from [<c0291ae8>] (expire_timers+0xa0/0xac)

[ 22.275143] r6:00000200 r5:ee8a5e78 r4:eed38480

[ 22.279779] [<c0291a48>] (expire_timers) from [<c0291b94>] (run_timer_softirq+0xa0/0x18c)

[ 22.287992] r9:00000001 r8:c1002080 r7:eed38480 r6:c1002d00 r5:ee8a5e74 r4:00000001

[ 22.295769] [<c0291af4>] (run_timer_softirq) from [<c0232fbc>] (__do_softirq+0xf8/0x234)

[ 22.303892] r7:00000100 r6:ee8a4000 r5:c1002084 r4:00000022

[ 22.309576] [<c0232ec4>] (__do_softirq) from [<c0233138>] (run_ksoftirqd+0x40/0x4c)

[ 22.317265] r10:00000000 r9:00000000 r8:ffffe000 r7:c1013ff0 r6:00000001 r5:ee861540

[ 22.325123] r4:ee8a4000

[ 22.327669] [<c02330f8>] (run_ksoftirqd) from [<c024ec74>] (smpboot_thread_fn+0x154/0x268)

[ 22.335970] [<c024eb20>] (smpboot_thread_fn) from [<c024adb0>] (kthread+0x100/0x118)

[ 22.343746] r10:00000000 r9:00000000 r8:c024eb20 r7:ee861540 r6:ee8a4000 r5:ee861580

[ 22.351606] r4:00000000 r3:ee898c80

[ 22.355197] [<c024acb0>] (kthread) from [<c0207c88>] (ret_from_fork+0x14/0x2c)

[ 22.362448] r8:00000000 r7:00000000 r6:00000000 r5:c024acb0 r4:ee861580

[ 22.369202] ---[ end trace 175f3c4f1129894a ]---

Have you encountered this problem?

My hardware is a custom board, the am5728 chip version is ES2.0

My software is Processor SDK 4.2 release.