Hello,

Can you please give a more detailed explanation about the fields of the IPU UNICACHE CACHE_OCP register and the AMMU pages POLICY registers

and more specifically about what is , how does in affect ,when should it be enabled

-) EXCLUSION

-)PRELOAD (when will the preload happen if enabled)

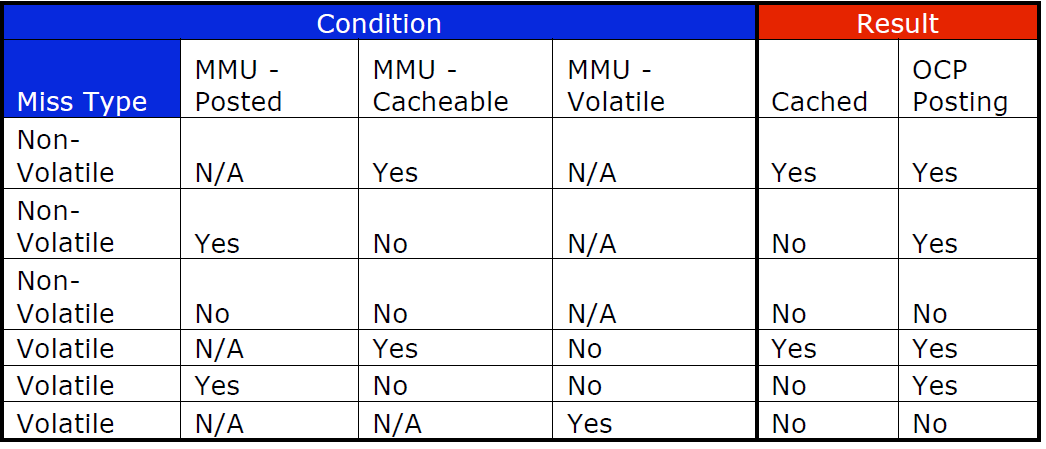

-) VOLATILE

-) L1_ALLOCATE - what are the sidebands??

-) posted / non posted - when will it be advised to use posted (no confirmation) instead of non-posted

if possible small usage example for each to illustrate the usage and reasoning will be appreciated

Thanks