I am beginning the long journey of attempting to optimize my audio compression algorithm for real-time operation. Currently it does NOT appear that it is able to run fast enough for my application. I am seeking the guidance of the optimization ghurus on this forum.

========================================

Test configuration:

Logic PD Experimenter with OMAP-L138 SOM

CCS4 4.1.3.00034, DSP/BIOS 5.41.02.14

========================================

I successfully implemented (with a *lot* of help from the ghurus of EDMA and McASP) a ping-pong buffered EDMA3 transfer of 48000 sample/sec 16-bit stereo audio in I2S format to/from the McASP, which is then sent to/from the on-board AIC3106 audio codec. As a temporary alorithm test for the C6748, I am merely copying the input buffer to the output buffer. This works well in real-time and I have accurately benchmarked the DMA transfer time of the audio samples which arrive at a rate of 96000 samples/second. I transfer 512 total samples during the DMA transfer, so that the time my algorithm can consume is 5.3mS (512/96000). I have benchmarked this time using DSP/BIOS STS functions and gethtime() and verified that the CPU cycles between DMA transfers is ~1,600,000, which works out to 5.3mS/(1/300MHz) with a 300MHz CPU clock.

Unfortunately, when I remove the test alogorithm and insert the actual audio compression algorithm, all hell breaks loose. CCS4 consistently crashes with differing error messages (some are posted HERE), and sometimes hangs requiring a task manager kill.

I have determined that I can compile in either Debug or Release, with full symbolc debugging ON (-g), and optmization off (blank), and the code will run, but the audio is very distotred and CCS4 typically crashes sooner or later. I have insterted gethtime() before and after the encoder and decoder. When the code is actually running the encode time is typically around 960,000 cycles, and the decode around 750,000 cycles, which unfortunately is just over 100% CPU utilization.

However, if I try some rudimentary optimization by EITHER turning on optimization (any value other than blank), OR turning off symbolic debugging (no -g), the system either crashes, hangs, or stops giving statistics. The same thing happens if I comment out the decode portion of the algorithm in an attempt to reduce the processing time of the algorithm.

I am currently stumped and frustrated with the constant crashing, illogical outcome of my optimization attempts, and lack of DSP/BIOS statistics when the code actually runs.

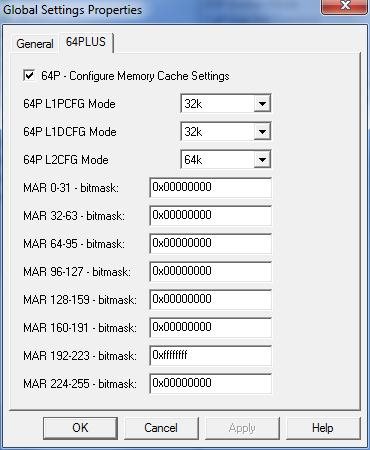

I have read a great deal about code optimization techniques, including the Ti Wiki and this very nice presentation (http://processors.wiki.ti.com/images/6/6e/C64p_cgt_optimization.pdf), but cannot seem to get past the CCS4 crashes, hangs, and lack of statistics.

Can someone give me some pointers for things that may be more obivous to the more experienced programmers?

Thx

MikeH