Tool/software: Code Composer Studio

1、 Test :

(1)PCIE gen1:SPEED –> 2.5Gb/S、LANE WIDTH -> X2;

A15@1GHz -- linux

DSP@500MHz --TI-RTOS,config PCIE driver,run as RC

FPGA run as EP

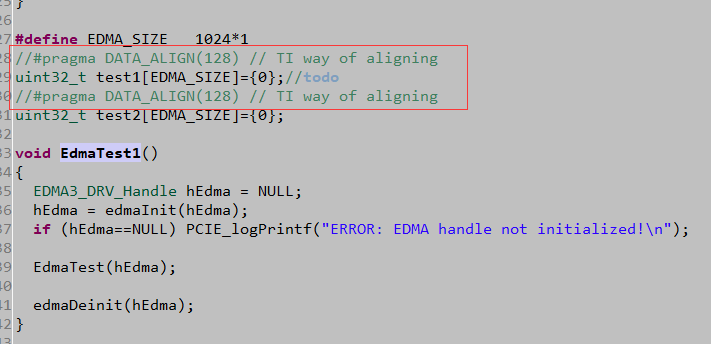

program use《C:\ti\pdk_am57xx_1_0_4\packages\ti\drv\pcie\example\sample》,not change any driver/bar config

2、 DSP continuity write BAR window:

test like: *(int *)0x21000100=0x1234;

*(int *)0x21000104=0x4567;

TLP time :

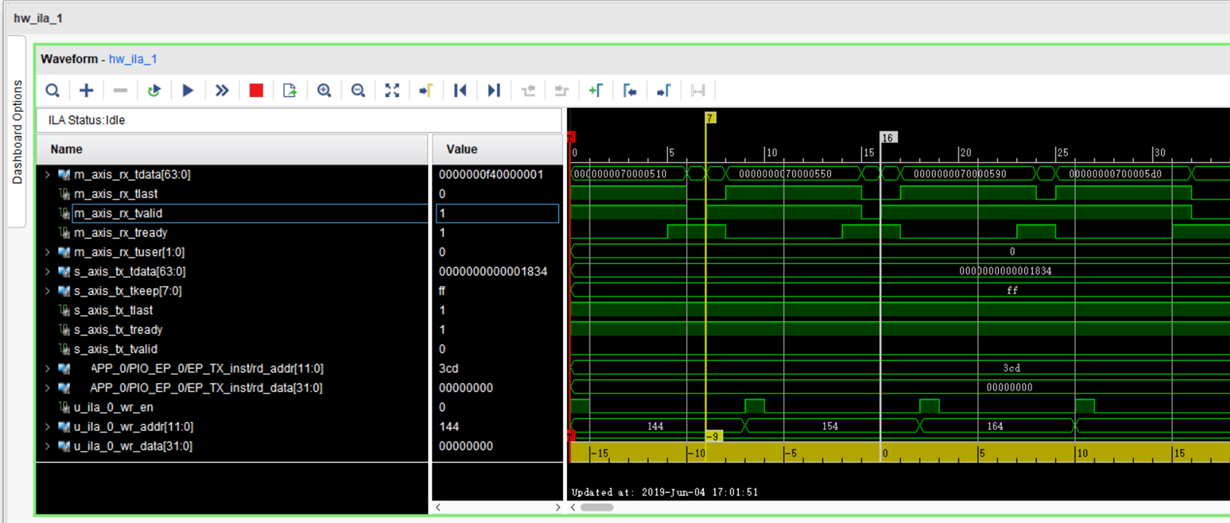

M_axis_rx_xxx are DSP send TLP signal,S_axis_tx_xxx are FPGA reply TLP signal. From above,2 write TLP use 9 CLK time(9*8ns=72ns);

3、DSP continuity read BAR window:

test like : int A=*(int *)0x21000100;

int B=*(int *)0x21000104;

TLP time:

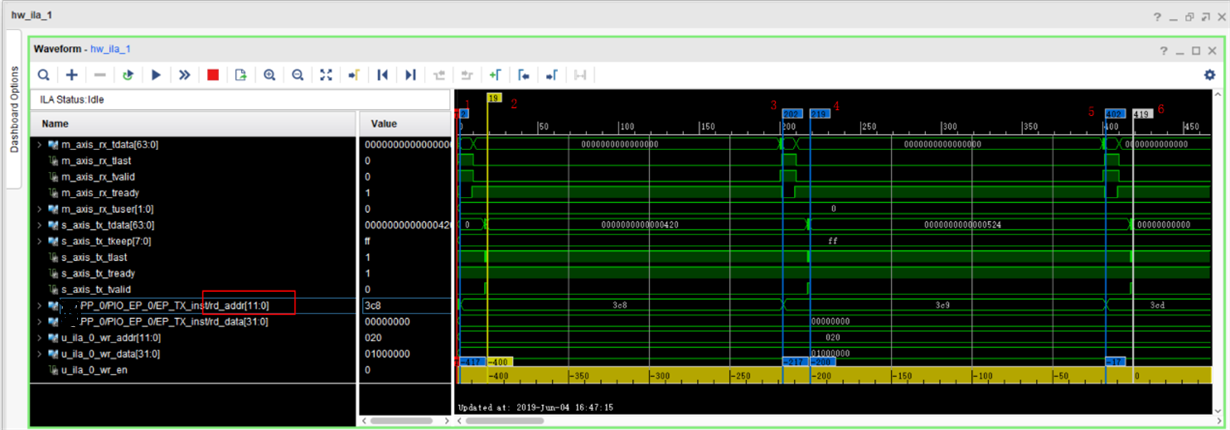

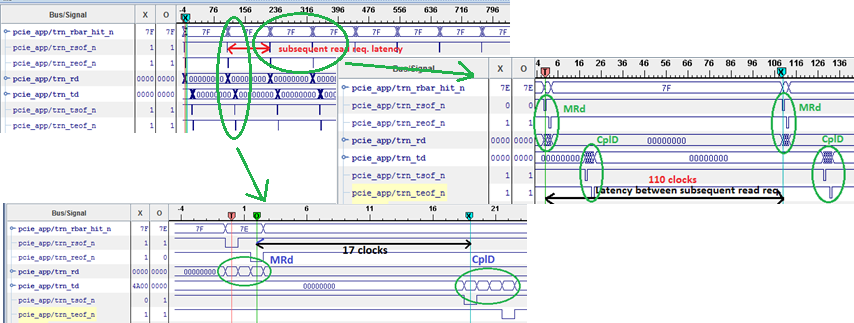

M_axis_rx_xxx are DSP send TLP signal,S_axis_tx_xxx are FPGA reply TLP signal,Red number 1,3,5 are DSP continuity read 3 times BAR window send TLP timestamp,Red number 2,4,6 are FPGA reply DSP TLP timestamp;

From abve,1st DSP read,FPGA reply in 19-2=17 CLK(17*8=136ns),but between 2 DSP reading TLP the time used(Red number 1,3)202-2=200 clk,that's 1.6us

And I test:

T1=TSCL;

int A=*(int *)0x21000100;

T2=TSCL;

time used:T2-T1=1.6us is the same as above.

Thanks