Hello,

We are looking for advice on troubleshooting a stall seen in Linux on our AM3352 board. We are testing with the kernel v4.19 from PROCESSOR-SDK-LINUX-AM335 v06.01 (20-Oct-2019) to match TI, but we are also experiencing the stalls in kernel v4.14.

Sometimes the board runs for a day with no stall, and sometimes it hangs after just an hour or 2 of run.

When the stall occurs, we are sending and receiving a lot of serial and Ethernet data.

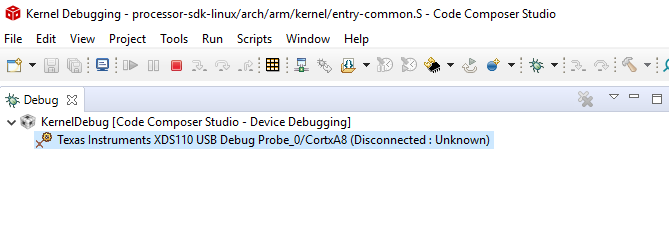

After the stall, all the normal I/O interfaces including serial ports are unresponsive, so we have to read the __log_buf using XDS110 to capture the stall info. JTAG seems like our only debug path after the stall.

I am not seeing much info in this log to fault isolate the location of failure, so setting up to perform program trace using the ETB. Sharing this log with the group to see if any of you have insight from similar debug experiences.

Please send your thoughts/feedback/advice. Thanks! Chris Norris

rcu: INFO: rcu_preempt self-detected stall on CPU

rcu: 0-...!: (1 ticks this GP) idle=8fe/1/0x40000004 softirq=69056/69056 fqs=0

rcu: (t=4682 jiffies g=181829 q=198)

rcu: rcu_preempt kthread starved for 4682 jiffies! g181829 f0x0 RCU_GP_WAIT_FQS(5) ->state=0x402 ->cpu=0

rcu: RCU grace-period kthread stack dump:

rcu_preempt I 0 10 2 0x00000000

Backtrace:

[<c0925f38>] (__schedule) from [<c0926700>] (schedule+0x58/0xc4)

r10:c0e144c4 r9:c0e14ac0 r8:c0e14aa0 r7:db87dedc r6:c0e03048 r5:c0e14ac0

r4:ffffe000

[<c09266a8>] (schedule) from [<c092a348>] (schedule_timeout+0x194/0x284)

r5:c0e14ac0 r4:00014e0f

[<c092a1b4>] (schedule_timeout) from [<c0180a64>] (rcu_gp_kthread+0x76c/0xc28)

r9:c0e143f4 r8:00000005 r7:ffffe000 r6:c0e14aa0 r5:00000001 r4:db87c000

[<c01802f8>] (rcu_gp_kthread) from [<c0148a60>] (kthread+0x158/0x160)

r7:db87c000

[<c0148908>] (kthread) from [<c01010e8>] (ret_from_fork+0x14/0x2c)

Exception stack(0xdb87dfb0 to 0xdb87dff8)

dfa0: 00000000 00000000 00000000 00000000

dfc0: 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000

dfe0: 00000000 00000000 00000000 00000000 00000013 00000000

r10:00000000 r9:00000000 r8:00000000 r7:00000000 r6:00000000 r5:c0148908

r4:db82ec00

Task dump for CPU 0:

myApp R running task 0 1242 683 0x00000002

Backtrace:

[<c010beb0>] (dump_backtrace) from [<c010c198>] (show_stack+0x18/0x1c)

r7:c0e143f4 r6:000002ab r5:c0e03048 r4:da8f2a00

[<c010c180>] (show_stack) from [<c014e824>] (sched_show_task.part.1+0xd4/0x104)

[<c014e750>] (sched_show_task.part.1) from [<c0153658>] (dump_cpu_task+0x38/0x3c)

r6:c0e03104 r5:00000000 r4:c0e143f4

[<c0153620>] (dump_cpu_task) from [<c0182254>] (rcu_dump_cpu_stacks+0x90/0xd0)

[<c01821c4>] (rcu_dump_cpu_stacks) from [<c0181abc>] (rcu_check_callbacks+0x608/0x8d0)

r10:c0e14aa0 r9:00000000 r8:c0e030f8 r7:c0e143f4 r6:c0e14814 r5:c0e14880

r4:c0e143f4 r3:16a3dd13

[<c01814b4>] (rcu_check_callbacks) from [<c01874f0>] (update_process_times+0x3c/0x64)

r10:200b0193 r9:c0197cfc r8:00000000 r7:00000117 r6:00000000 r5:da8f2a00

r4:ffffe000

[<c01874b4>] (update_process_times) from [<c0197ad4>] (tick_sched_handle+0x5c/0x60)

r7:00000117 r6:dd4d57c1 r5:da969eb0 r4:c0e16500

[<c0197a78>] (tick_sched_handle) from [<c0197d4c>] (tick_sched_timer+0x50/0xac)

[<c0197cfc>] (tick_sched_timer) from [<c0188158>] (__hrtimer_run_queues.constprop.4+0x18c/0x224)

r7:ffffe000 r6:c0e15c00 r5:c0e16500 r4:c0e15c40

[<c0187fcc>] (__hrtimer_run_queues.constprop.4) from [<c01888f8>] (hrtimer_interrupt+0xec/0x2fc)

r10:7fffffff r9:c0e15d30 r8:ffffffff r7:00000003 r6:200b0193 r5:ffffe000

r4:c0e15c00

[<c018880c>] (hrtimer_interrupt) from [<c0119f90>] (omap2_gp_timer_interrupt+0x30/0x38)

r10:c0e4c3fe r9:db807100 r8:00000010 r7:da969e08 r6:00000000 r5:00000010

r4:c0e07f80

[<c0119f60>] (omap2_gp_timer_interrupt) from [<c016f660>] (__handle_irq_event_percpu+0x6c/0x140)

[<c016f5f4>] (__handle_irq_event_percpu) from [<c016f768>] (handle_irq_event_percpu+0x34/0x84)

r10:00001cc4 r9:da968000 r8:db808000 r7:da969fb0 r6:db807100 r5:00000010

r4:c0e03048

[<c016f734>] (handle_irq_event_percpu) from [<c016f81c>] (handle_irq_event+0x64/0x90)

r6:00000000 r5:00000010 r4:db807100

[<c016f7b8>] (handle_irq_event) from [<c0172f50>] (handle_level_irq+0xc0/0x164)

r5:00000010 r4:db807100

[<c0172e90>] (handle_level_irq) from [<c016e7c8>] (generic_handle_irq+0x2c/0x3c)

r5:00000010 r4:c0e4abb0

[<c016e79c>] (generic_handle_irq) from [<c016ef88>] (__handle_domain_irq+0x5c/0xb0)

[<c016ef2c>] (__handle_domain_irq) from [<c0452dfc>] (omap_intc_handle_irq+0x3c/0x98)

r9:da968000 r8:db808000 r7:da969ee4 r6:ffffffff r5:200b0113 r4:c0e7f17c

[<c0452dc0>] (omap_intc_handle_irq) from [<c0101a0c>] (__irq_svc+0x6c/0xa8)

Exception stack(0xda969eb0 to 0xda969ef8)

9ea0: 00404040 c0a02a3c c0e4c1c0 00000000

9ec0: 00000040 00000014 00000000 00000000 db808000 00211c48 00001cc4 da969f5c

9ee0: da969f60 da969f00 c012e3dc c0102224 200b0113 ffffffff

r5:200b0113 r4:c0102224

[<c0102180>] (__do_softirq) from [<c012e3dc>] (irq_exit+0x114/0x118)

r10:00001cc4 r9:00211c48 r8:db808000 r7:00000000 r6:00000000 r5:00000014

r4:c0e4abb0

[<c012e2c8>] (irq_exit) from [<c016ef8c>] (__handle_domain_irq+0x60/0xb0)

[<c016ef2c>] (__handle_domain_irq) from [<c0452dfc>] (omap_intc_handle_irq+0x3c/0x98)

r9:00211c48 r8:10c53c7d r7:10c5387d r6:ffffffff r5:600b0030 r4:c0e7f17c

[<c0452dc0>] (omap_intc_handle_irq) from [<c0101e14>] (__irq_usr+0x54/0x80)

Exception stack(0xda969fb0 to 0xda969ff8)

9fa0: 00000000 0020fe38 ffffffff 0020fe38

9fc0: 00211c38 b6b7a7ac 00211c40 b6b7a7a4 00000000 00211c48 00001cc4 001b7c51

9fe0: 00000000 b628fbe8 0008d0b9 b6ae1926 600b0030 ffffffff

r5:600b0030 r4:b6ae1926