Part Number: TDA2EXEVM

Hi,

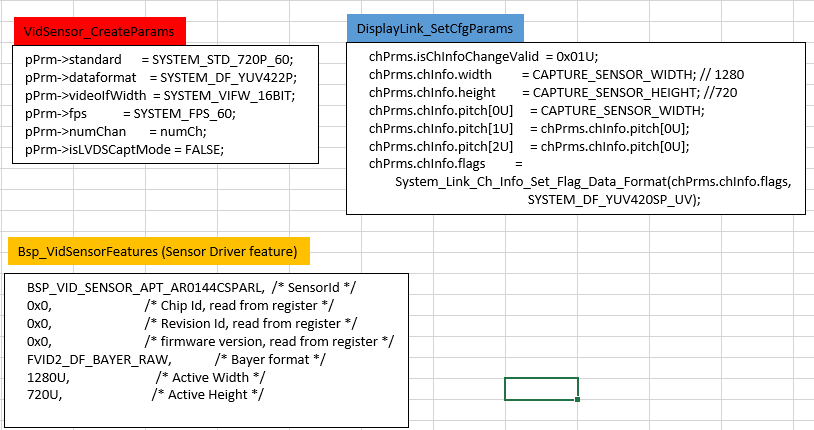

We are working enable a customized Aptina camera module(AR0140 w/ parallel interface output) which connected with TDA2X Vision board.

The board information as following:

Vision SDK Version : [REL_VISION_SDK_03_07_00_00]

FVID2 Version : [FVID_02_01_00_01]

BSP Version : [PDK_01_10_03_xx]

Platform : [EVM]

SOC : [TDA2XX]

SOC Revision : [ES2.0]

Board Detected : [Vision]

EEPROM Board Info Header Mismatch!!

Base Board Revision : [REV A]

Daughter Card Revision: [REV D]

As the user guide of VisionSuper28 Vision Application Board (pg#5),

we connected the sensor board with I2C_2_ (SDA/CLK) and Expansion connector (MUX1 MUX2, VIN1A).

ps: default CPLD image.

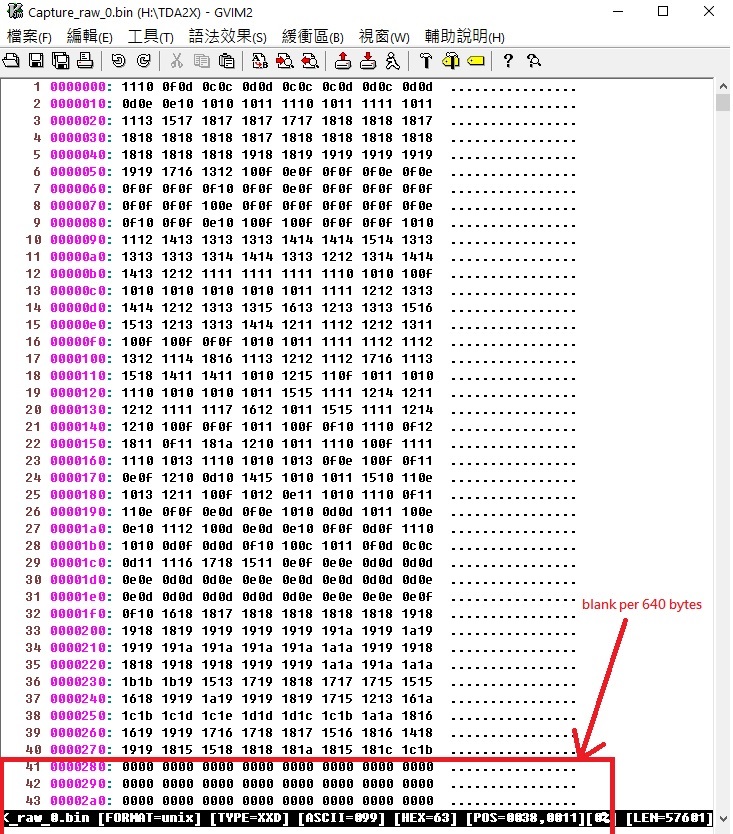

we tried configure the DIP switch of Video Config (SW3), but it showed the I2C error message when initialize the sensor configuration (1280*720@30fps):

Could you teach me how to solve the issue and preview camera image? Thanks.

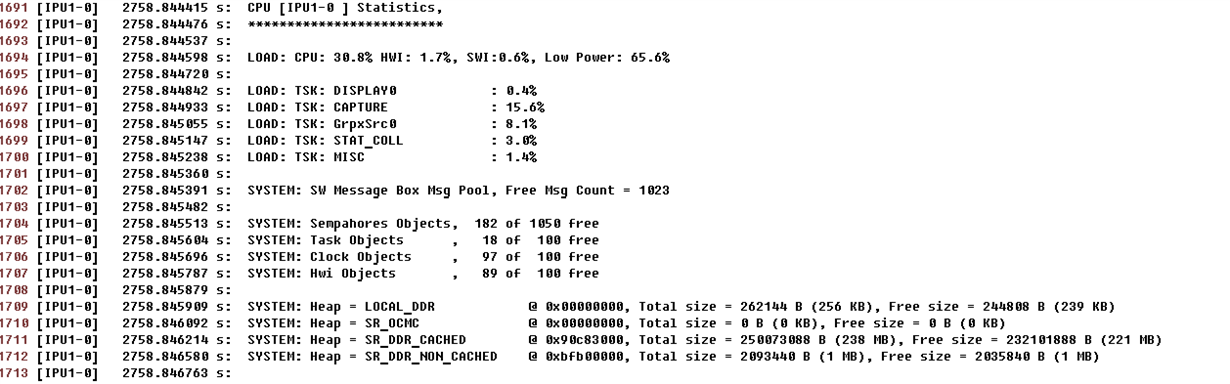

[IPU1-0] 7.487464 s: src/bsp_deviceI2c.c @ Line 1568:

[IPU1-0] 7.487555 s: Bus busy detected recover I2C bus !!!

[IPU1-0] 7.487647 s: src/bsp_deviceI2c.c @ Line 923:

[IPU1-0] 7.487738 s: I2C2: DEV 0x10: WR 0x301a = 0x00d9 ... ERROR !!!

[IPU1-0] 7.487891 s: src/bsp_deviceI2c.c @ Line 945:

[IPU1-0] 7.487952 s: I2C2: Error timeout 3 ms!!!

[IPU1-0] 7.690721 s: src/bsp_deviceI2c.c @ Line 1568:

[IPU1-0] 7.691026 s: Bus busy detected recover I2C bus !!!

[IPU1-0] 7.691118 s: src/bsp_deviceI2c.c @ Line 923:

[IPU1-0] 7.691209 s: I2C2: DEV 0x10: WR 0x301a = 0x30d8 ... ERROR !!!

[IPU1-0] 7.691301 s: src/bsp_deviceI2c.c @ Line 945:

[IPU1-0] 7.691392 s: I2C2: Error timeout 4 ms!!!

BRs,

Sam. Hsieh