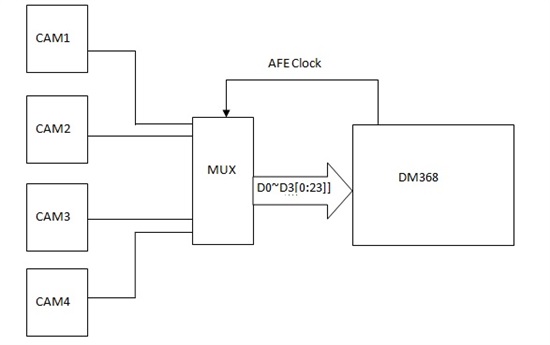

I have a customer discussing to run 4 CCD sensors into a FPGA, then merge as one 4xD1 resolution HD/2 video, needs an option to output 4 D1 video output locally with separated RCA connectors, Does DM368 will be good fit? or Which processors will be better? Thanks

-

Ask a related question

What is a related question?A related question is a question created from another question. When the related question is created, it will be automatically linked to the original question.