Tool/software:

Hi Ti Team,

I would like to create an offline data processing in Python based on the TrueGroundSpeed demo for AWRL6432 AOP. I am referring to the content and setup from this post:

AWRL6432: TrueGroundSpeed Conveyor Belt Configuration using 6432AOP

I have already created Range-Doppler plots and also a simple CFAR evaluation for a single range bin. I get plausible results so far.

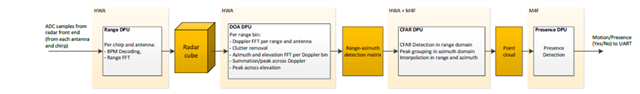

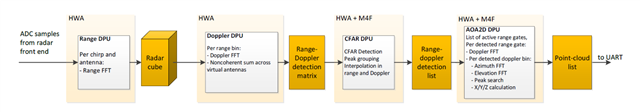

But there are still some uncertainties how the signal processing is implemented internally on the 6432. (I mainly used the Motion/Presence Detection Tuning Guide and the DPU description in the TI Resource Explorer as a basis).

Questions for offline processing:

How and in which step do I have to perform the BPM demodulation?

Should I also use the "compRangeBiasAndRxChanPhase" values for calibration in my offline signal processing?

Is it correct that I also have to use a rectangular window after the 2D FFT and set the first Doppler bin to "0"?

I am of course trying to search for this information in the source code of the demo in CSS. It would still be very helpful to have detailed documentation to refer to, like the tuning guide.

best regards

Tobias