Hi everyone!

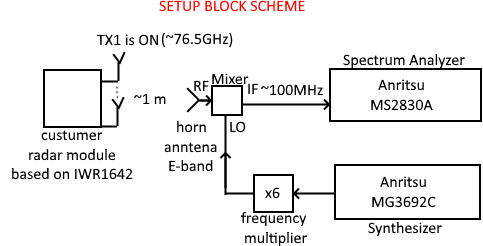

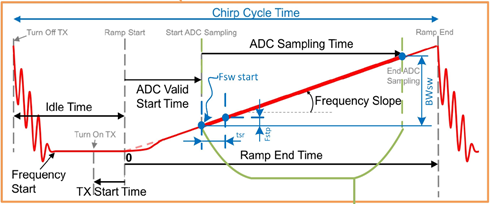

I make the radar on the IWR1642 ICs. I program the next chirp parameters (see image#1):

Frequency start: 1425452105 (76.49546177 GHz);

Idle Time: 400 (4 usec);

TX Start Time: -400 (-4 usec);

ADC Valid Start Time: 200 (2 usec);

ADC Sampling Time: 2 usec;

Ramp End Time: 6 usec;

tsr: 0.25 usec;

BWsw: 4 MHz.

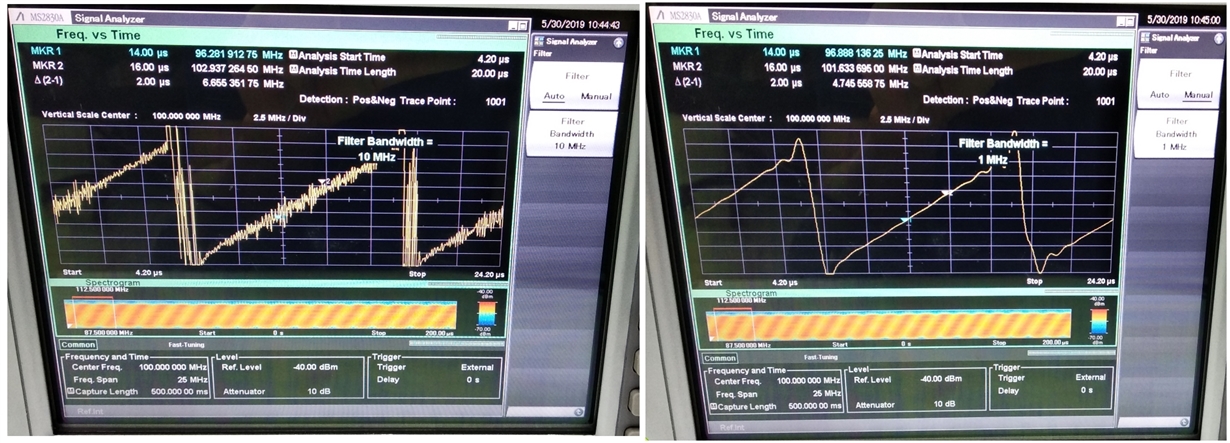

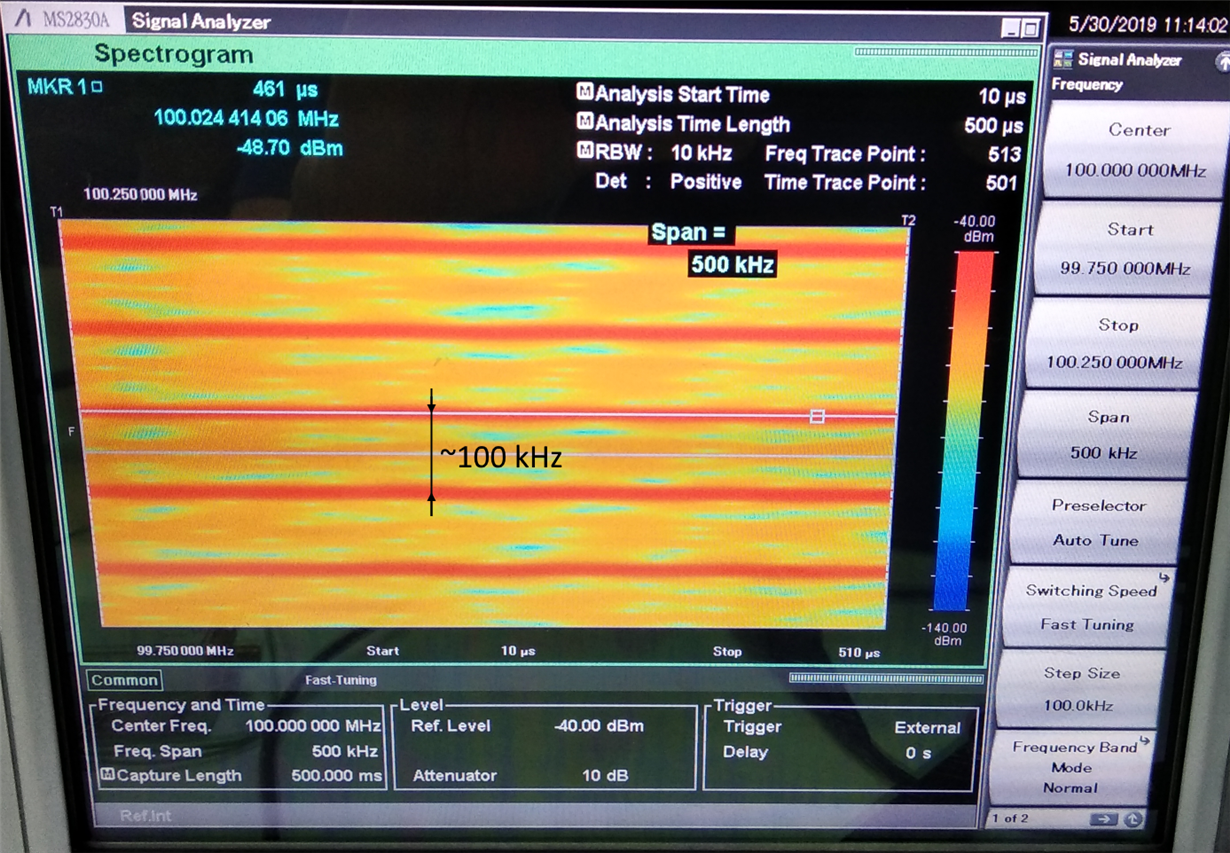

Image#2 shows a downconversion chirp frequency vs time. It is seen that in the working area saw nonlinear. My application is sensitive to this. How can a saw be made more linear? Increasing the guard intervals does not correct the situation.