Other Parts Discussed in Thread: SYSCONFIG

Tool/software:

Hello,

I'm starting a new thread regarding an issue related to the LBT algorithm in the TI 15.4 stack messages transmission.

I'm working on an application based on the collector and sensor examples provided in the TI 15.4 stack. The hardware is based on CC1312, using SDK version 8.30.01.01.

TI 15.4 Stack Configuration:

* Mode: Beacon-enabled

* Frequency Band: 863–869 MHz

* Regulatory Type: ETSI

* PHY Type: 200 kbps, 2-GFSK

* MAC Beacon Order (BO): 10 (~4.9 s)

* MAC Superframe Order (SO): 3 (~38 ms RX window)

The application operates in beacon mode. The test setup consists of a collector and two sensor devices. There are two types of beacons:

* General beacon: Not intended for any specific sensor - sensors do not respond in normal conditions.

* Dedicated beacon: Addressed to a specific sensor - only the intended sensor responds.

Under normal conditions, the system works as expected with these beacon types. However, issues arise when the collector sends data packets to the sensors using indirect (AUTO_REQUEST_ON) data transfer. In this case, during each beacon interval:

* The sensor that receives a dedicated beacon sends a response.

* Another sensor, for which data is pending, sends a data request to retrieve the data.

Problem: Occasionally, RF packet collisions occur, leading to lost messages, despite all transmissions theoretically fitting within the superframe duration.

Debugging Setup:

Used RF output debug signals to observe TX/RX timing on GPIOs (Debugging RF output). Below is a summary of the test cases and findings based on sniffer logs and oscilloscope traces:

Test Cases:

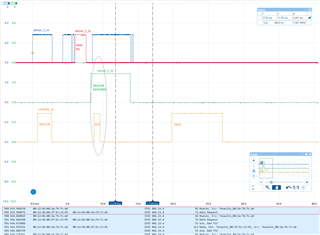

Case 1 – Expected behavior:

* Collector sends a beacon dedicated to sensor_2 (data pending for sensor_1).

* Sensor_2 responds to its beacon.

* Collector ACKs the response.

* Sensor_1 sends DATA_RQ, receives ACK and data from the collector, and sends final ACK.

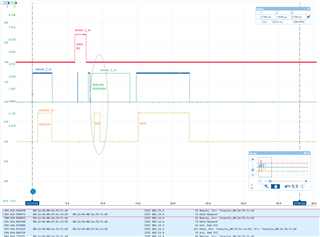

Case 2 – Expected behavior:

* Collector sends a general beacon (no response from sensor_2).

* Sensor_1 sends DATA_RQ, receives ACK and data from collector, and sends ACK.

Case 3 – Unexpected behavior:

* Collector sends a beacon dedicated to sensor_2 (data pending for sensor_1).

* Sensor_1 sends DATA_RQ first:

* Collector sends ACK and sensor_2 responds to the beacon at the same time.

* Result: RF collision, packets are lost.

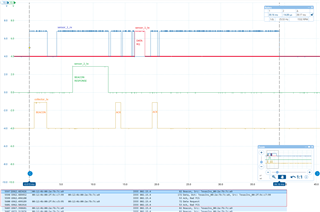

Case 4 – Unexpected behavior:

* Collector sends a beacon dedicated to sensor_2 (data pending for sensor_1).

* Sensor_2 responds to the beacon and receives ACK.

* Sensor_1 sends DATA_RQ, receives ACK, but the data packet is not transmitted from the collector.

Summary:

In cases 3 and 4, overlapping transmissions or lost packets are observed. This suggests that multiple devices may be transmitting simultaneously. We experimented with adjusting parameters such as macMinBE, macMaxBE, CONFIG_MAC_MAX_CSMA_BACKOFFS. However, the problem persists.

Main Questions:

What could be causing these packet collisions or losses and how exactly does the LBT mechanism operate in such beacon-enabled, data transmission scenarios?

Is it possible to avoid these conflicts and what parameters should be fine-tuned to improve reliability?

Any insights, guidance, or recommendations are highly appreciated.