Hello,

I'm implementing an application based on ti154stack examples "collector" and "sensor". MCU's are CC1312, SDK 6.41.00.17.

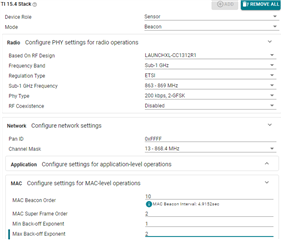

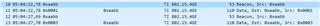

Mode: beaconed; BO:10 (~4.9s); SO: 2(~19ms RX); Freq: 868MHz; Regulation: ETSI

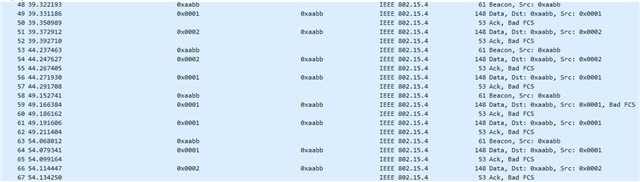

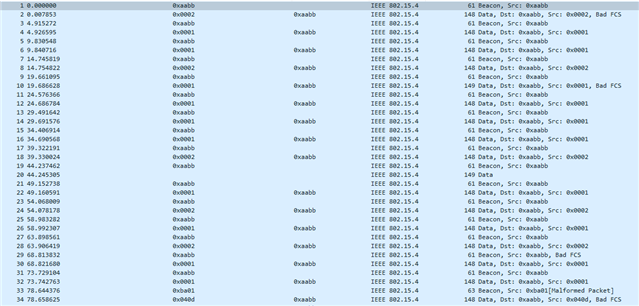

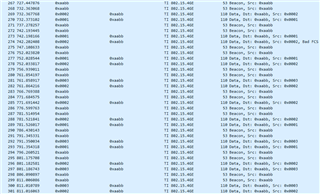

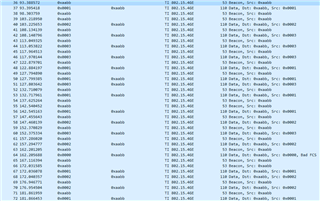

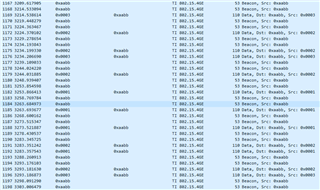

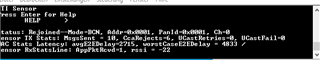

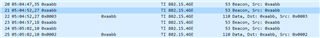

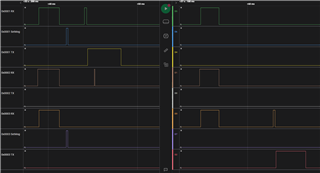

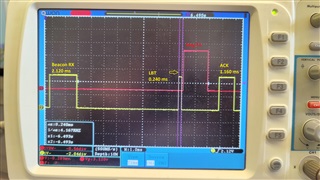

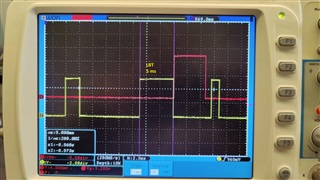

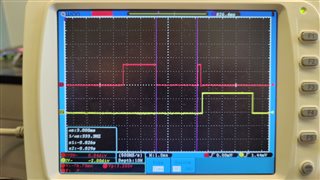

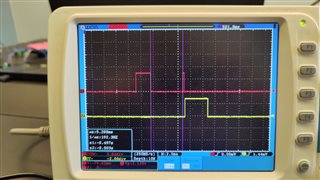

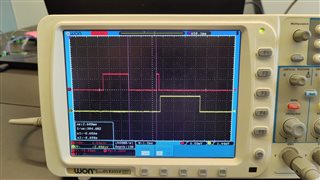

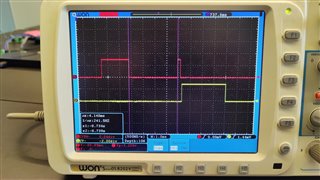

Application uses beaconed mode, devices are battery powered. Idea of communication is that Collector coordinates when particular sensor sends data packets. Basically collector fills beacon payload from which contents sensor device knows when it is expected to send message. Message should be delivered within same beacon RX time portion of collector. I have two types of messages from sensor: tracking and alarms. With tracking is all good, since only one device per beacon is sending. Other story with alarm type message. Every second beacon announces that any of the sensors can send alarm type of message if needs to. There might be zero or several devices with alarm messages pending. My aim is to let sensors send data with disabled CSMA_BACKOFFS, RETRIES and not waiting ACK. Sensor will know if message were delivered from next beacon contents. If there are several sensors with alarm messages pending, only one will be able to deliver since other will detect that channel is occupied. In this case I expect message to be dropped and announced as not delivered. Most of the times application behaves like I described. But there are cases when two sensors tries to send alarm within same beacon, one delivers successfully while the other one also delivers successfully but on next beacon or even two beacons later. This behaviour interfares with tracking messages and is not welcome.

From this arises two questions:

- Is there a possibility on collector application to know when beacon has been sent exactly? Or when beacon RX part finished? I need to know when it is safe to set next beacon payload.

- Sensor has configuration and message txOptions:

/* Number of max data retries */

#define CONFIG_MAX_RETRIES 0

/*! MAC MAX CSMA Backoffs */

#define CONFIG_MAC_MAX_CSMA_BACKOFFS 0

txOptions.ack = false

txOptions.indirect = true

txOptions.pendingBit = false

txOptions.noRetransmits = true

txOptions.noConfirm = false

txOptions.useAltBE = false

txOptions.usePowerAndChannel = false

txOptions.useGreenPower = false

Can you confirm that message should be dropped if LBT detected hat channel is not accessible? If yes, can you point out what can cause such message to be delivered several beacons later (there a no other messages in TX queue)?