Hi All ,

In our custom design we have interfaced TMS320C6678 DSP to an FPGA on EMIF16 interface. We intend to use the EMIF for accessing the FIFO memory on FPGA for transferring data to and from FPGA. EMIIF16 is running at 166 MHz clock. The EMIF 16 timings programmed on the DSP are mentioned below :

1) Write setup : 2 cycles - 12 ns

2) Write strobe: 4 cycles -24 ns

3) Write hold : 1 cycle - 6 ns

4) ReadSetup :2 cycles - 12 ns

5) ReadStrobe 8 cycles : 48 ns

6) Read hold : 1 cycle : 6ns

7) Turn around time : 2 cycles - 12ns

Extended wait is disabled.

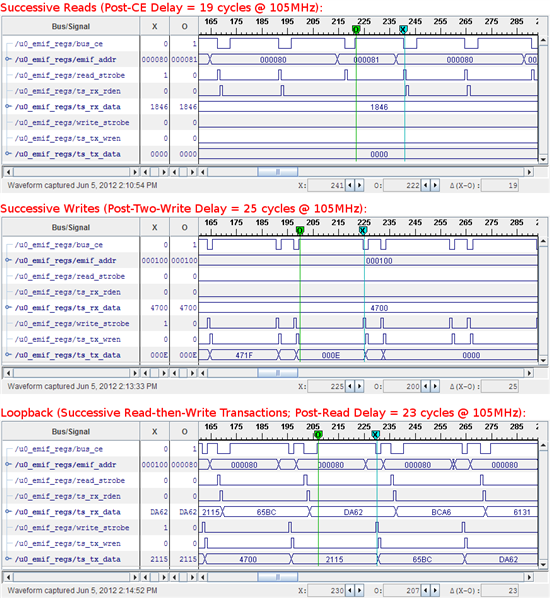

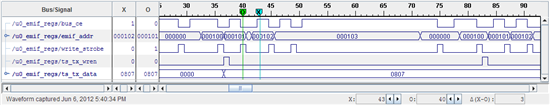

With the above mentioned settings we ran a throughput test to estimate the data rate we are able to achieve on the EMIF bus. The test is a non-BIOS project that continuously does an EMIF write to the FPGA FIFO register in a loop. The test shows a data rate of 98 Mbps for the transfer. According to the timings that we have programmed for the write we should be getting a theoretical throughput of 16 bits /( write setup +write hold + write stobe) ~ 350 Mbps. We understand that the throughput can be lesser than the theoritical max due to code overhead but what we are seeing is more than 50% loss. We probed the signals on the EMIF bus and observed that there was a 24 cycle delay seen in the waveforms between two writes ! We are unable to find the cause of this huge delay . Does anyone have an idea where this delay could be ccoming from ? I am attaching the code which does the EMIF configuration for your reference.

/* FOR CHIP SELECT 0 */

hEmif16Cfg->A0CR = (0 \

| (1 << 31) /* selectStrobe */ \

| (0 << 30) /* extWait */ \

| (1 << 26) /* writeSetup 12 ns */ \

| (3 << 20) /* writeStrobe 24 ns */ \

| (0 << 17) /* writeHold 6 ns */ \

| (1 << 13) /* readSetup 12 ns */ \

| (7 << 7) /* readStrobe 48 ns */ \

| (0 << 4) /* readHold 6 ns */ \

| (1 << 2) /* turnAround 12 ns */ \

| (1 << 0)); /* asyncSize 16-bit bus */ \

/* Set the wait polarity */

CSL_FINS(hEmif16Cfg->AWCCR, EMIF16_AWCCR_WP0, CSL_EMIF16_AWCCR_WP0_WAITLOW);

CSL_FINS(hEmif16Cfg->AWCCR, EMIF16_AWCCR_CE0WAIT, CSL_EMIF16_AWCCR_CE0WAIT_WAIT0);

hEmif16Cfg->AWCCR = (0x80 /* max extended wait cycle */ \

| (0 << 16) /* CE0 uses WAIT0 */ \

| (0 << 28)); /* WAIT0 polarity low */ \

-Anil