Other Parts Discussed in Thread: DAC8551

Hello,

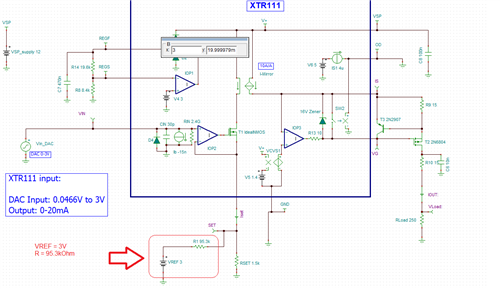

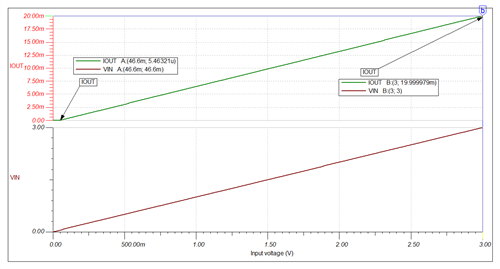

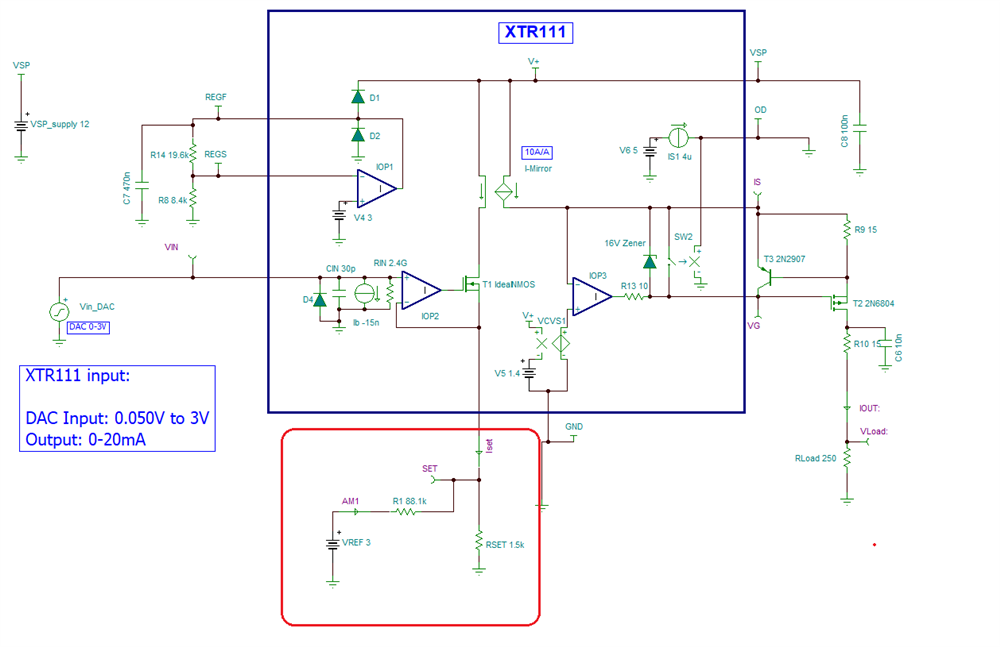

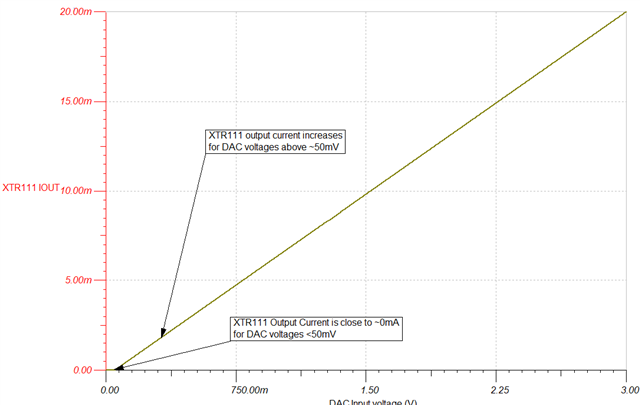

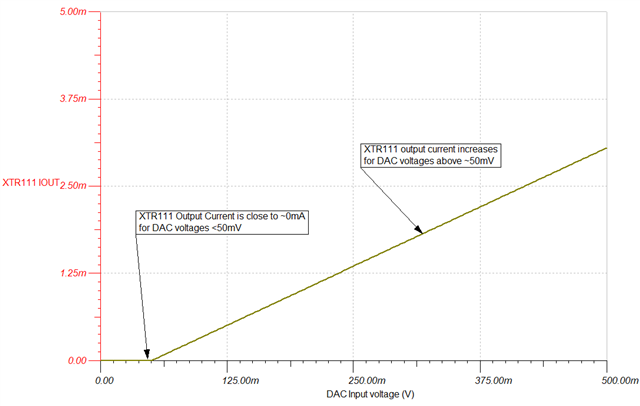

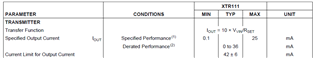

I want to use XTR111 for converting 0V-3V input voltage (Vvin) to 0mA-20mA current output.

We have below queries:

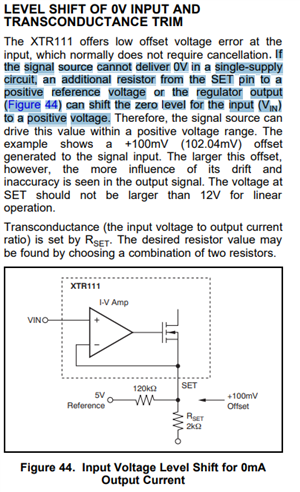

1. As datasheet mentioned specified output current is 0.1 to 25mA, so can it be possible to use convert this 0mA with 0V input voltage?

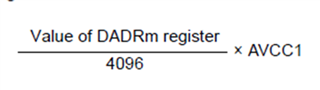

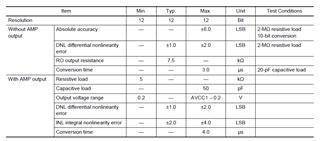

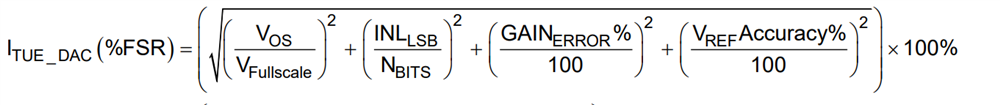

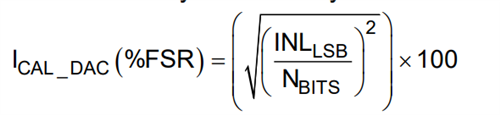

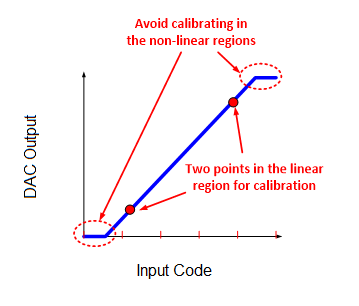

2. In our application we are using inbuilt DAC of microcontroller which will give maximum 0.0466V instead of 0V as per its accuracy, which will provide nonzero mA current at output XTR111. Can it be possible to minimize this error? so that we will get 0 mA as starting value.

-- Nikhil