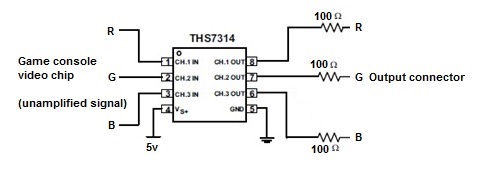

I was hoping someone here could help me. I currently run a website that's dedicated to retro video games. A few of the pages on my site contain how-to guides for adding a THS7314 to older game consoles to amplify their RGB-output. My guides basically describe:

RGB out from the game console's video encoder chip -> THS7314 -> resistors -> display

Your average person following these guides will run the video output through a video switch (along with their other game consoles) and into either a CRT RGB monitor (such as a Sony PVM), video processor (that converts the signal to VGA or HDMI) or the SCART RGB input of a PAL display.

When I first wrote these guides, it seemed 75k Ohm were the recommended resistors to use. After testing, the output of the modified consoles was brighter than unmodified consoles (using their stock RGB-out). I switched the guides to recommend 100k ohm resistors and everything has been perfect ever since: The brightness is exactly the same as unmodified consoles and the picture quality is perfect. Also, I haven't found (or been notified of) any issues with the THS7314 + 100k ohm resistor combination in any scenario.

I was recently contacted by someone who claimed using 100k ohm resistors instead of 75k ohm is bad. The person seemed extremely knowledgeable, however I'd rather come here to and ask TI and it's experts directly: Will using resistors different than 75k ohm have any potential negative side-effects for displays?

Sorry for the lengthy post, but I want to make sure I'm providing the proper information to my readers. Thanks in advance for any help you can provide.