Hi team,

My customer provided some questions in terms of timing interval as follows.

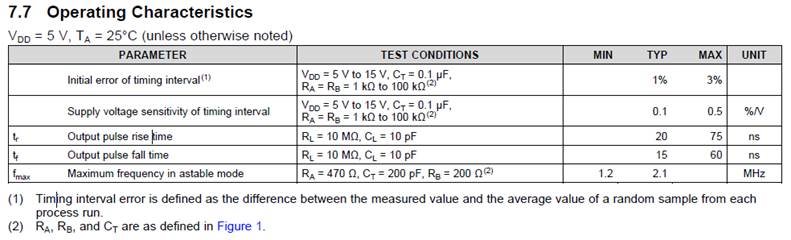

Datasheet shows "Timing interval error is defined as the difference between the measured value and the average value of a random sample from each process run.".

- How many samples are assumed in this sentence?(only 1 CLK or many other?)

-

If above sentence assumes 1CLK, what about 1000CLK?

The customer understands as below.

For example, when designing with a CLK period of 1 sec,

CLK cycle: 0.99 sec → 1.01 sec → 0.99 sec → ... and so on, the average value is 1 sec, so the error as a whole system(CLK) is 0%, but the error per 1 CLK is 1%.

CLK cycle: 1.01 sec → 1.01 sec → 1.01 sec → ... and so on, the average value is 1.01 sec, so the error as a whole system(CLK) is 1%, but the error per CLK is 0%.

Which understanding is correct?(or wrong?)

I'm looking forward to hearing back from you.

Best regards,

Shota Mago