as per

PLL_flat = PLL_FOM + 20 × log(Fvco/Fpd) + 10 × log(Fpd / 1Hz)

PLL_flicker (offset) = PLL_flicker_Norm + 20 × log(Fvco/ 1GHz) – 10 × log(offset / 10kHz)

PLL_Noise = 10 ×log(10 PLL_Flat / 10 + 10 PLL_flicker / 10 )

as per above formula PLL_Noise (of chip only) do not depend on any loop filter as variable, why does Phase noise of PLL chip only vary with loop filter in simulation ??

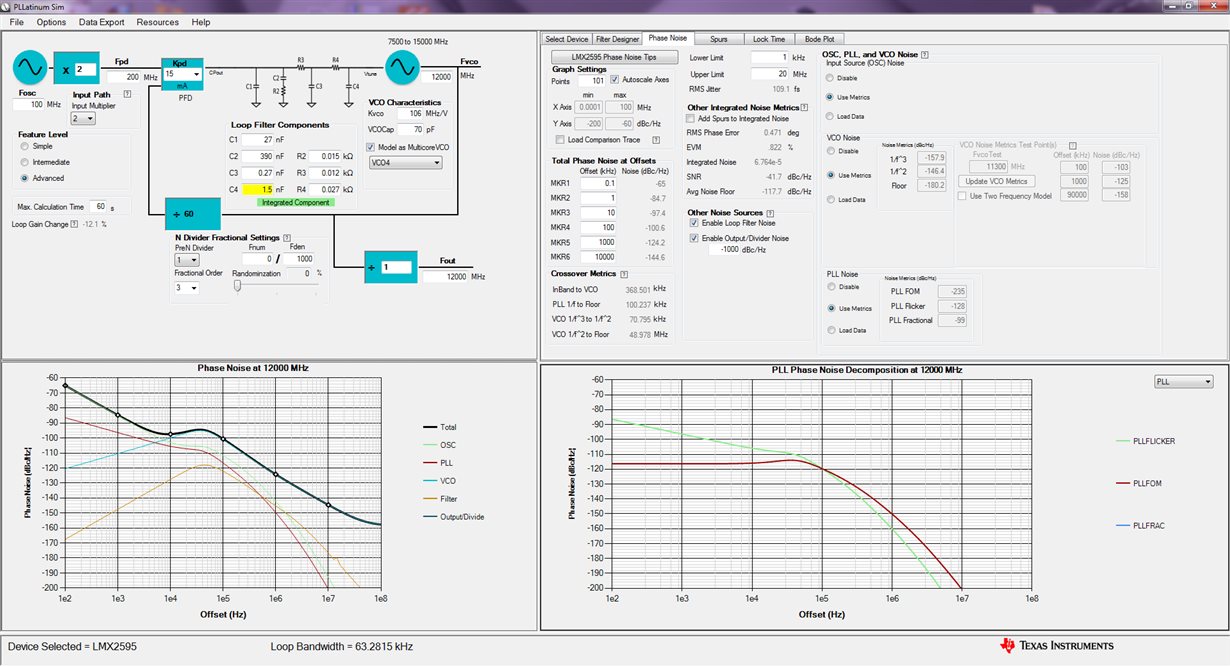

Case1 R2 is 15 ohm in PLL_FOM graph shows PN of -115 dBc/Hz at 1 kHz offset, -115 dBc/Hz at 10 kHz offset, -120 dBc/Hz at 100 kHz offset, -150 dBc/Hz at 1 MHz offset. (Plot attached)

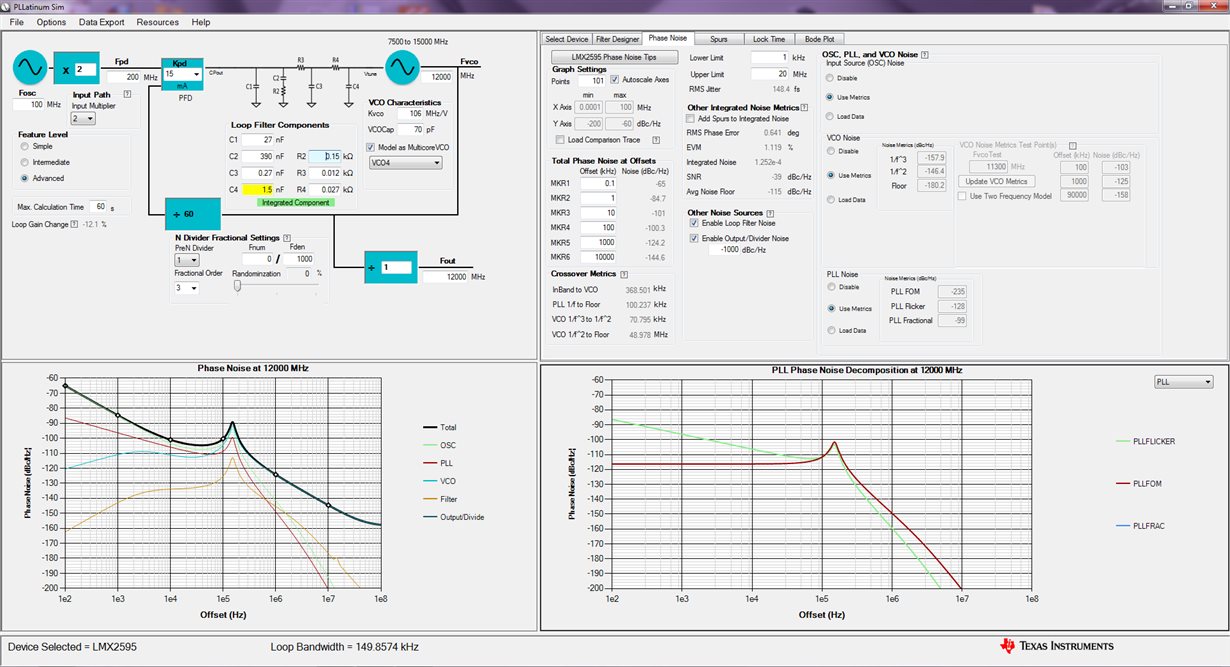

Case2 R2 is 150 ohm in PLL_FOM graph shows PN of -115 dBc/Hz at 1 kHz offset, -115 dBc/Hz at 10 kHz offset, -110 dBc/Hz at 100 kHz offset, -150 dBc/Hz at 1 MHz offset. (and a kink up to -100 dBc/Hz around 130k) (Plot attached)

why should PLL_FOM show up changes when none of its variables are changing?