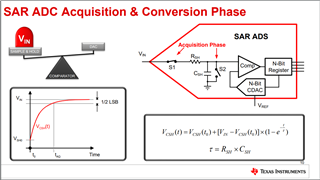

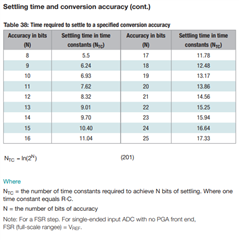

1. What is the maximum source impedance? Section 9.1.1 shows a 500 ohm ohm resistor and a 26pF capacitor and 4pF input capacitance. If this combination is to be charged during Tacq = 350ns within 5 time constants, the total impedance is 2.3K, so the source impedance is a maximum of 1.8K. Are my assumptions correct?

2. Is the center pad connected to GND or floating?

3. Can the voltage on Vin be higher than VA if the current is limited? If yes, what is the maximum current?