Hi,

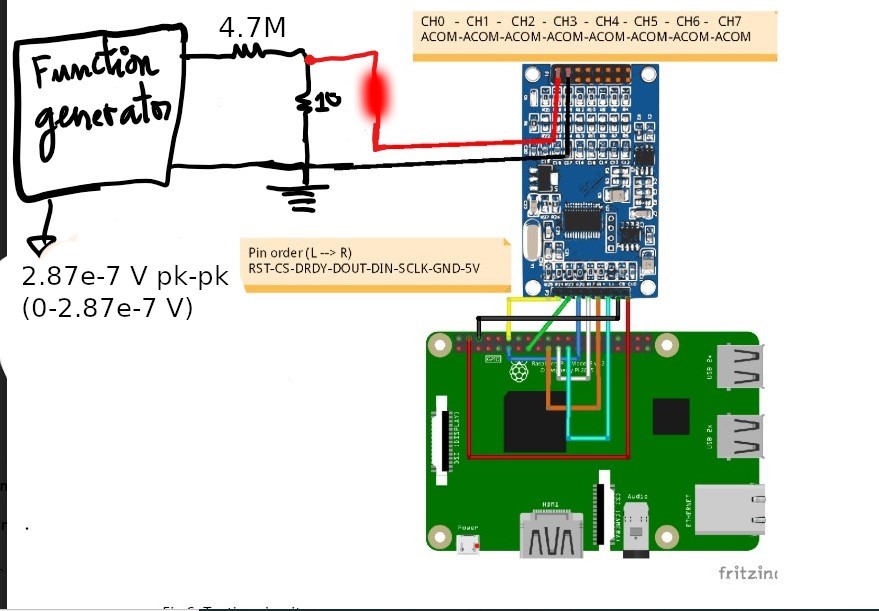

I generated a sinusoidal 2Hz 135mV pk-pk voltage (with min value = 0V and max value = 135mV) using a function generator (SWF-7000). I'm using a voltage divider with one 10 Ohms resistor and a 4.7 Mega Ohms to divide the signal. The output signal is taken on the 10 Ohms resistor so the the new signal should be 135mV*(10/(10 +4.78*10e6)) = 2.8723e-7V pk-pk voltage (with min value = 0V and max value = 2.8723e-7V). I'm sampling the 2.8723e-7V pk-pk with an ADS1256 with a sampling rate of 50SPS using the common mode and VREF = 2.5V, PGA = 1 with buffer OFF. The ADS1256 I'm using is implemented on the "High -Precision ADS1256 24-Bit 8 Channel ADC board" https://www.amazon.com/High-precision-ADS1256-Channel-Digital-Converter/dp/B072C2LY17. I have attached the schematic of the board provided by the manufacturer.

I keep having noisy reading whenever I try to read small signal in the order of micro volts. For some period of time, the signal is being read properly and some other time all I get noise. I'm not why this is happening. I first thought the problem was with the SPI frequency so I connected the DRDY pin to the oscilloscope and the frequency I read was 49.07Hz which is pretty to the 50Hz I'm expecting. Also, I'm not sure why the amplitude of the voltage on the pin is 2.64V pk-pk (is this normal?) I thought the amplitude voltage should be 5V pk-pk since I'm supplying 5V to the ADS1256.

The noise I'm talking about can be seen in the second image. For some period of time, the shape of the signal (sinusoidal) is the same as the expected signal but then I get a noise for a few seconds then it goes to normal. I'm not sure why I'm getting those noise interval.

Let me know if you need more information regarding this problem.

Thanks

Fig 1. Oscilloscope reading of DRDY pin (yellow signal) for a Date Rate of 50SPS

Fig 2. Output Voltage on channel 0

Fig 3. Circuit Set up