Part Number: SN75C185

I have a customer currently evaluating the SN75C185 for a RS-232 application.

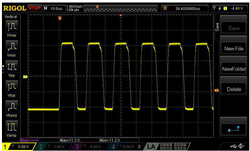

They observed a difference in the length of time a single bit was HIGH or LOW, as shown in the attached image.

The waveform was measured at the output of the RS-232 driver IC.

- Baudrate = 115200bps

- The period is calculated as 8.7µs, and HIGH time is ~1.2µs too long, while LOW is ~1.2µs too short

From the DS, tPLH (L->H propagation delay) is 1.2µs typical, while tPHL (H->L propagation delay) is 2.5µs typical.

From this, it makes sense that the "HIGH" time would be longer than the "LOW" time...

But, from the standpoint of the RS-232-C standard, is this behavior (observed variation in H/L time length vs baudrate) acceptable?