Stumbled upon previous discussions about the general purpose timers on these boards, and since I am not permitted to reply to any of them since they are now locked, I thought I would spawn a discussion about them again since we are stuck with no possibility of success on this it seems.

I have read everything possible on the subject, and am still left wondering, "How could they have gotten even the most basic functionality wrong?" Hear me out.

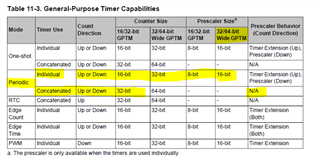

All we need to do is the following: From an 80MHz system clock, we need a 32-bit register to count, or tick, or however you express it (change) at a rate of 1MHz. This means the LSB of the timer should increment once for every 1usec of time that has elapsed. Conventional prescalers to timers would dictate a setting of 80 on the prescaler, which would fire the counter once every 80 system clock cycles. We then need to be able to read the raw value of the timer throughout the lifecycle of the program, even if it wraps around. Unsigned integer math easily allows this with overflow, as long as you only overflow once. Since we never have a delay longer than 1 hr and 12 min (the max time of a 32-bit counter running at 1MHz), this has been doable in any other processor on the market. That is it. Nothing could be simpler than that. I have nothing against TivaWare, the libraries, the effort that went into them. I do not want this conversation devolving into a discussion about programming style. Although I do not plan on accessing the registers directly, as I would like to use TivaWare libraries, I'd gladly do so if TivaWare doesn't natively support it. Just tell us how this can be achieved. Please.

We have no need for interrupts, and certainly do NOT want to configure a sys tick timer to interrupt our processor 1 Million times per second to increment a counter value in software. That is absolute madness, as has also been suggested by TI on other threads. Interrupts are absolutely 100% out of the question. Multiplication and scaling of each read operation by querying the current system clock (or programatically specifying it intsead) is also absolutely out of the question. The timer should be able to be configured to WHATEVER resolution and time base you choose. Else, small delays of 1usec or thereabouts would also needlessly incur the overhead of said computations. All I would like to do is read the current value of the 1MHz counter in software, and compare it with a previously known value by a simple subtraction (1 clock cycle), and a comparison against a duration (also one clock cycle), and none of this multiplication and division scaling crap to arrive at an answer. Ok?

I would like someone to explain to me in very simple and no uncertain terms how this is possible using a TSMC processor of any flavor, with the so called general purpose timers.