Other Parts Discussed in Thread: C2000WARE

Hello,

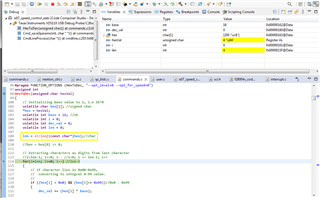

C functions that work on ARM CPU correctly produces crazy values on x49c CPU even after adding #pragma shown in the code below. Oddly the call to strln() is showing 4 characters when only 2 exist in header passed bytes. This is not debug related since the function still fails to produce correct hex2decimal conversions out of debug. I have also tested strtol() for same conversions, is not converting hexadecimal characters to base 16 integers as it should, seemingly related to this same issue of strln().

Also modified hex2dec() below several ways to get correct conversions and the only near correct answer requires multiplication of input hex char base 16 by decimal base 10. And then 0xC8/200 decimal is converted to 238 decimal, not exactly the correct answer.

Oddly debug stepping F5, F6, F7 has turtle slow speed problems since TM4C129x Stellaris debug probe (8Mhz) speeds over these same C code functions shown below. Why would a more advanced XDS110 debug probe than XDS100v2 behave worse when it should behave better, even at 8.5MHz it does not?

XDS110 probe step into strln() takes ridiculous time in Do while process CCS v12.2.

x49c: TI v21.6.1.LTS compiler

TM4C129 ARM Cortex M4: TI v20.2.6.LTS compiler