- Ask a related questionWhat is a related question?A related question is a question created from another question. When the related question is created, it will be automatically linked to the original question.

This thread has been locked.

If you have a related question, please click the "Ask a related question" button in the top right corner. The newly created question will be automatically linked to this question.

HI,

Used SDK8.2

Problem phenomenon:

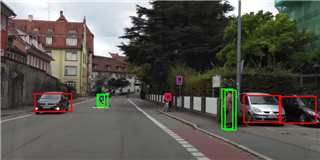

Using the 8bit method to quantify the yolox model will cause the large target detection position becomes smaller

Experimental record:

I have trained a variety of yolox models, and there will be a similar problem that the large target detection position becomes smaller to varying degrees.

1. Using 16bit quantization can be improved, but the project cannot accept the increase in inference time too much.

2. Using the Troubleshooting Guide for Accuracy/Functional Issues provided by TI to investigate, where is the specific problem that has not been located?

3. Try to use the method suggested by TIDL-RT: Quantization, and the experimental conclusion also shows that the large target detection position becomes smaller. (excluding the use of QAT)

Hope TI can give better suggestions or solutions.

img-8bit:

16bit

We use mixed precision to reduce the quantization accuracy loss for object detection layers.

See an example here: https://github.com/TexasInstruments/edgeai-benchmark/blob/master/configs/detection.py#L314

It is seen that moving the first and last convolution layers to 16bit provides removes most of the accuracy loss. Such layers can be specified using the parameter: 'advanced_options:output_feature_16bit_names_list'

(Here I am referring to our onnxruntime-tidl that offloads supported layers to TIDL - this is what edgeai-benchmark uses). TIDL-RT will have a similar parameter to specify layers to be put in 16bits - you can get the information for TIDL documentation.

Thanks,Manu

I had previously experimented with mixed precision methods at some specific detection layers,I also found this method to improve the problem. Tt increases inference time about 3~4ms, which is unacceptable for our function.

Try putting the first convolution layer and last convolutions layers (last convolution in all branches) - only these to 16 bit. This should not increase the complexity significantly.

Hell Chao Yang

I am working on the TDA4 EVM .

We want to run yolox on our EVM . Could you help us ?

We‘ve completed the application of yolov5.

Tks a lot !

Option 1: (simplest)

You can use https://github.com/TexasInstruments/edgeai-modelmaker

It's very simple to use this repository.

Option 2:

Other option is you can yourself train using https://github.com/TexasInstruments/edgeai-mmdetection

and compile using this example: github.com/.../benchmark_custom.py

Hello Manu :

My problem is as below

We want to konw how to use it on the EVM.

I've transform the onnx file to the yolox_net.bin and yolox_io.bin files.

Tks a lot !

By the way , you can also try to set the value of the quantizationStyle = 3 .(base on the yolox_m_ti_lite_45p5_64p2.onnx and yolox_m_ti_lite_metaarch.prototxt at the url github.com/.../edgeai-yolox)

The following images are the different value of the quantizationStyle. And I set the confidence_threshold = 0.4 (In the yolox_m_ti_lite_metaarch.prototxt)

quantizationStyle = 2

quantizationStyle = 3

The impoter file is as below:

modelType = 2 numParamBits = 8 numFeatureBits = 8 quantizationStyle = 3 #quantizationStyle = 2 inputNetFile = "../../test/testvecs/models/public/onnx/yolox_m_ti_lite_45p5_64p2.onnx" outputNetFile = "../../test/testvecs/config/tidl_models/onnx/yolox_m_ti_lite_45p5_64p2/tidl_net_yolox_m_ti_lite_45p5_64p2.bin" outputParamsFile = "../../test/testvecs/config/tidl_models/onnx/yolox_m_ti_lite_45p5_64p2/tidl_io_yolox_m_ti_lite_45p5_64p2_" inDataNorm = 1 inMean = 0 0 0 inScale = 1.0 1.0 1.0 inDataFormat = 1 inWidth = 640 inHeight = 640 inNumChannels = 3 numFrames = 1 inData = "../../test/testvecs/config/detection_list.txt" perfSimConfig = ../../test/testvecs/config/import/device_config.cfg inElementType = 0 #outDataNamesList = "convolution_output,convolution_output1,convolution_output2" metaArchType = 6 metaLayersNamesList = "../../test/testvecs/models/public/onnx/yolox_m_ti_lite_metaarch.prototxt" postProcType = 2

Good luck !

Hi,Manu

Try putting the first convolution layer and last convolutions layers (last convolution in all branches) - only these to 16 bit. This should not increase the complexity significantly.

I tried this method,but it doesn't solve the problem I'm having.

Currently I do the following:

I use 16bit for the convolution of the position in the three branches of yolox.

outputFeature16bitNamesList = "1203,1206,1238,1233,1236,1279,1218,1221,1258"

I found this method to improve the problem. Tt increases inference time about 3ms