The compiled yolov5 is deployed on the TDA4, however, it can not output the correct result.

In comparison, this model can output correct results in TIDL simulation inferring on my server.

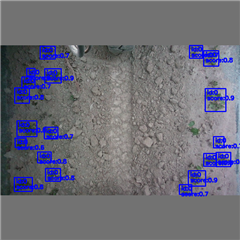

The two images below show the outcome between TIDL simulation and TDA4 inferring, using the same image.