Hi,

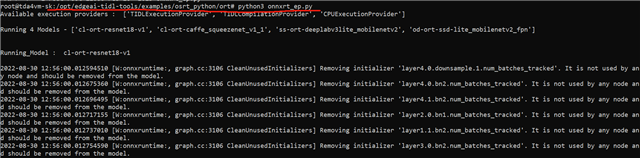

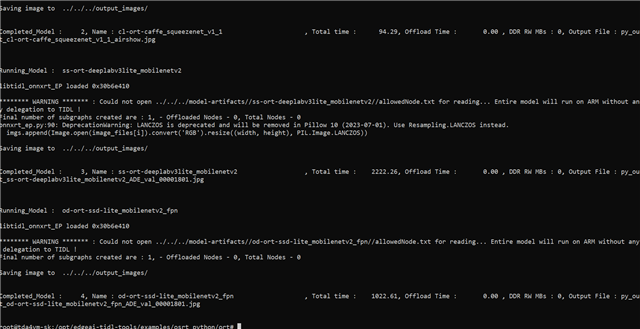

My sdk version is Processor SDK Linux for Edge AI 08.04.00. The model can be successfully compiled on PC. Inferencing can also be completed using this script

[/opt/edgeai-tidl-tools/examples/osrt_python/ort/onnxrt_ep.py] normally on EVM.

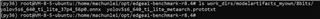

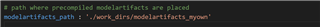

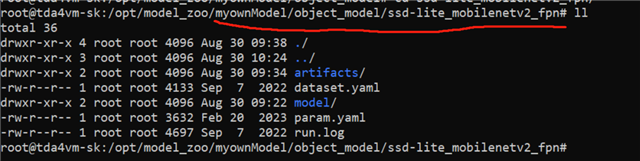

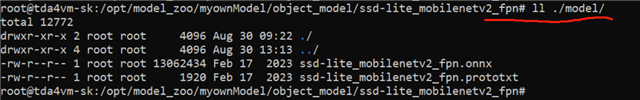

But, i want to use the script [root@tda4vm-sk:/opt/edge_ai_apps/apps_python# ./app_edgeai.py ../configs/object_detection.yaml] to inference. So, i created a new folder under model_zoon. I copy od-ort-ssd-lite_mobilenetv2_fpn model to the folder.

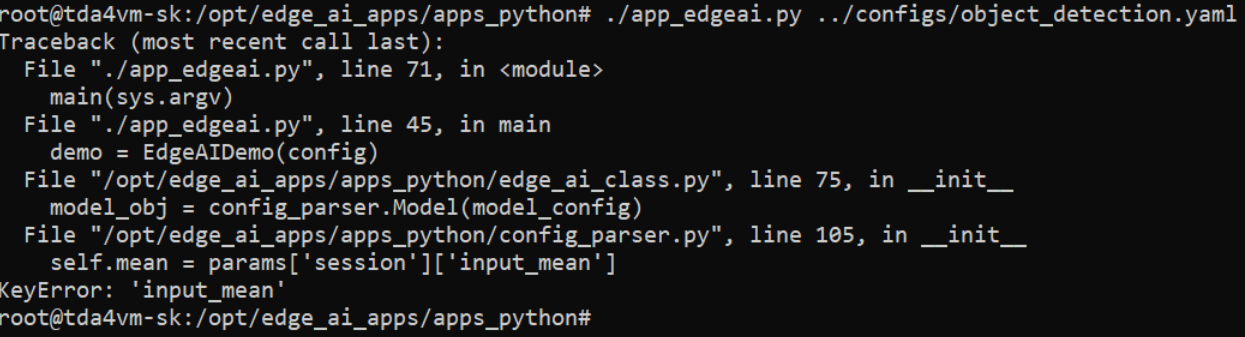

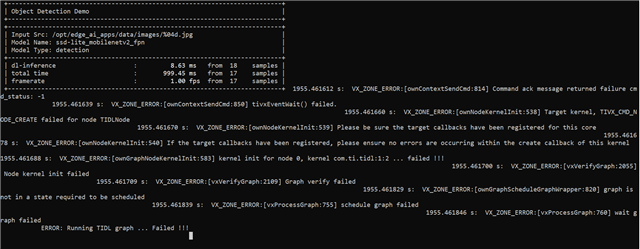

When I was running the script [/app_edgeai.py ../configs/object_detection.yaml], there was an error.

I found that param.yaml file content is less than the SDK 's own model.

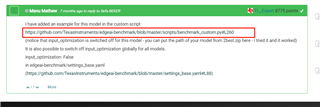

I manually modified the param.yaml file according to the param.yaml file format under [/opt/model_zoo/ONR-OD-8030-ssd-lite-mobv2-fpn-mmdet-coco-512x512]. Although can run, but there is no detection box.

My question:

1. How is param.yaml file obtained ? Manually modified ?

2. How to solve the problem in the last picture ?

Thanks,

Maiunlei