- Ask a related questionWhat is a related question?A related question is a question created from another question. When the related question is created, it will be automatically linked to the original question.

This thread has been locked.

If you have a related question, please click the "Ask a related question" button in the top right corner. The newly created question will be automatically linked to this question.

Hello:

After testing the data interaction between Linux system and R core, it takes a long time to complete a complete read and write cycle using the RPMSG API provided by TI. The data interaction takes too long, so the API provided by TI official can not meet our needs.

Can an A53 core running Linux and a bare metal R5F CPU send interrupts to each other? Trigger the peer party to directly read and write data to the physical address of the agreed shared memory area? Specifically include the following:

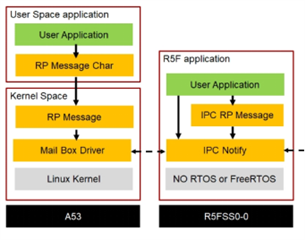

1). How does A53 CPU Linux send a signal to R5F CPU like IPC notify between R5F CPU and R5F CPU?Can the Mail Box driver of Linux OS in A53 CPU and IPC Notify of R5F CPU be invoked separately and send interrupt signals to each other?

How to achieve this? Are there any relevant examples?

2) Linux systems running on the A53 CPU and bare-metal R5F CPU do not use RP Message for data sending and receiving.

The shared MSRAM memory area between the Linux system and the R5F CPU is directly used for data transfer, and the data is directly copied to the MSRAM shared memory area through the memcpy function for linux system and R5F CPU to read and write access.

Is this method feasible? How to achieve this? Are there any relevant examples?

Hello,

Double-checking on your tests

1) What do you mean by "it takes a long time to complete a complete read and write cycle"?

2) What kind of latencies are you seeing? What kind of latencies are you expecting?

3) Are you looking for a specific response latency, or do you care more about data throughput rate? If you only care about the throughput, what throughput are you are looking for?

4) Are you using RT Linux or regular Linux?

5) Are you willing to share your testing methodology with me to verify? You can send it offline through Tony if you prefer.

Briefly discussing your other questions

I want us to focus on answering the above questions first. However, here are very quick responses to your questions:

RPMsg IPC is the only form of IPC TI supports between Linux and remote cores (though we do also have a shared memory example where RPMsg is used as the notify mechanism here: https://git.ti.com/cgit/rpmsg/rpmsg_char_zerocopy/ ).

You could theoretically implement your own mailbox-only messaging between Linux and remote cores, but TI would not be able to support you there. There is not currently a way for the low-level Linux mailbox driver to communicate directly to Linux userspace.

Yes, shared memory on either DDR or SRAM is definitely possible. Access latencies to SRAM will be lower, though you will also have less space to use on SRAM. The zerocopy example linked above was implemented with DDR, but the same concepts would apply.

Regards,

Nick

Hello Nick,

This problem is not entirely my responsibility, some of the other colleagues in charge of testing.

I asked for their opinion and gave a brief answer.

The data size of each transfer between R5F and the linux system is 496 bytes. Define a GPIO in R5F to observe the time, and the program processing flow is as follows:

gpio_High->R5F/ RPMessage_send()(No Block)-> Linux /read()(Block) ->Linux/write()(Block) -> R5/ RPMessage_recv()(Block)-> gpio _Low。

Observe how long the gpio stays high. The exact time was not recorded at the time. More than 100us if I'm not mistaken. I don't have time to test today.

Our expectations include the execution time of the function.

The size of the interactive data is 496 bytes. The entire data interaction process takes no more than 40us.

Linux/writeToR5F() –> R5F/RPMessage_recv()(Interrupt reception in R core) –>R5F/RPMessage_send() –> Linux/readFromR5F()= T < 40us

We pay more attention to response latency, and the smaller the latency, the better.

We want to optimize the data transfer under the premise of 400 bytes. There is a certain contradiction between these two indicators, throughput and response delay should be paid attention to simultaneously.

The minimum transmission requirement is 300Byte, and the data exchange frequency between Linux and R core is required to be 8Khz, in other words, the execution is completed at 125us.

regular Linux,and xenomai real-time patch has been implemented for Linux.

The program runs in the real time domain.

5). >I need to ask my colleagues if they can provide this. The answer to question 1) is that I have been testing for a bit longer. I don't know if the example is still there, we need to find it

>You could theoretically implement your own mailbox-only messaging between Linux and remote cores, but TI would not be able to support you there.

Why can't TI provide support for Linux mailbox driver?

Regards,

Huang

Hello Huang,

I am going to reply based on the information Tony shared with me offline:

Usecase

Linux is doing a task where it needs to complete within 125 us. The things that need to happen within that 125 us are:

1) Linux writes data to the R5F

2) the R5F processes that data

3) The R5F writes data back to Linux

4) (unclear) maybe Linux also has to do some tasks like sending data out a networking port?

Design feedback

Ok, let us take a step back. Before we try to do any optimization of RPMsg timings or IPC timings, we should talk about your design itself.

RPMsg is NOT appropriate you have a hard cycle time of 125 us.

Even real-time (RT) Linux itself might not be a good fit for a design where the cycle time can NEVER take longer than 125 us. That is because RT Linux is NOT actually a real-time operating system. For more details, see https://e2e.ti.com/support/processors-group/processors/f/processors-forum/1085663/faq-sitara-multicore-system-design-how-to-ensure-computations-occur-within-a-set-cycle-time

JUST the worst case Linux interrupt response latency (that’s just the time for Linux to respond to an interrupt signal and switch contexts – it does not include actually running code after you switch contexts) can easily be in the range of 50-100 us depending on what software you have running on the RT Linux. You will need to run your own tests to see how your software system performs. For more info, see https://software-dl.ti.com/processor-sdk-linux/esd/AM64X/09_01_00_08/exports/docs/devices/AM64X/linux/RT_Linux_Performance_Guide.html#stress-ng-and-cyclic-test and https://e2e.ti.com/support/processors-group/processors/f/processors-forum/1183526/faq-linux-how-do-i-test-the-real-time-performance-of-an-am3x-am4x-am6x-soc

Polling based on a Linux timer is an option that would take out all the data copies, etc involved in sending an RPMsg. HOWEVER, a Linux timer interrupt will still be subject to the interrupt response latency we talked about above. So for your extremely tight cycle times, even polling based on a Linux timer might take more time than you are hoping for.

There are a couple of other others for trying to get around the interrupt response latency, but they all have their tradeoffs. For more information, please refer to that LINUX CORES section of the “Cycle Time” FAQ I linked above.

Regards,

Nick

Hello Nick,

Thank you very much for your analysis!

Our project team has read your response. Your performance analysis of rpmsg on Linux has also deepened my understanding of rpmsg.

Our project team also had some discussion about your reply.New data interaction methods are also being tested.

Regards,

Huang