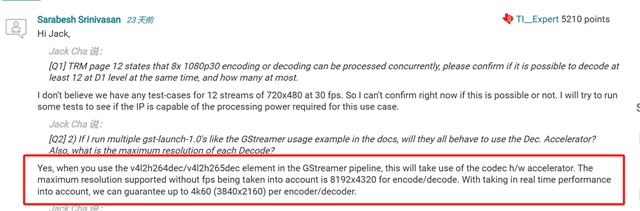

Other Parts Discussed in Thread: TDA4VH, AM69A

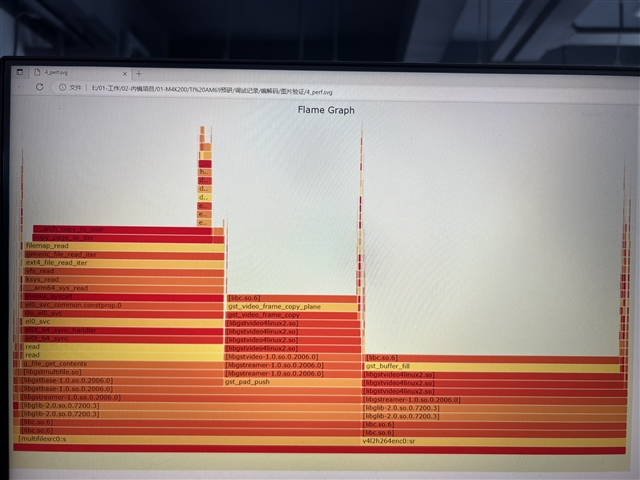

Hello, I want to test the real-time hardware encoding and decoding of the AM69 board. Our requirement is to achieve simultaneous real-time encoding of two streams of 4K@60, without dropping frames.

During testing, we found that the encoding capability was somewhat insufficient when encoding two streams of 4K@60 simultaneously. Please help us look into this issue. Below is the testing plan:

The testing plan is as follows:

- First, decode the MP4 into a 3840216060 NV12 format file. (This file was provided by you last time.)

gst-launch-1.0 filesrc location=./bbb4k60_hevc.mp4 ! qtdemux name=demuxer demuxer.! h265parse ! queue ! v4l2h265dec ! rawvideoparse width=3840 height=2160 format=nv12 framerate=60/1 ! filesink location=/tmp/bbb4k60_hevc.nv12

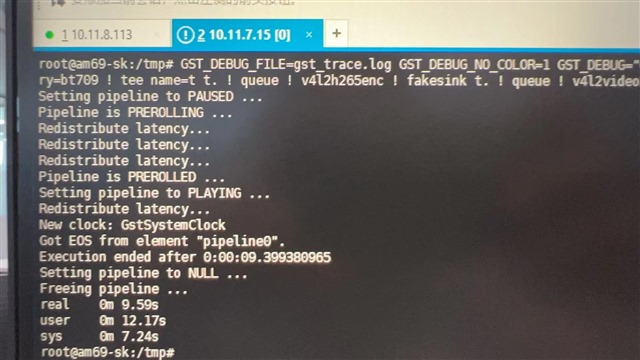

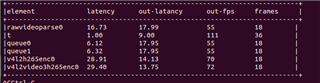

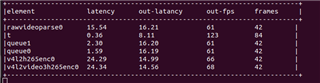

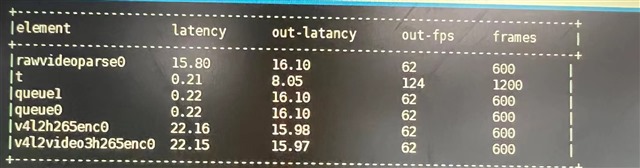

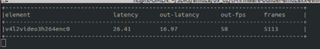

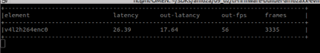

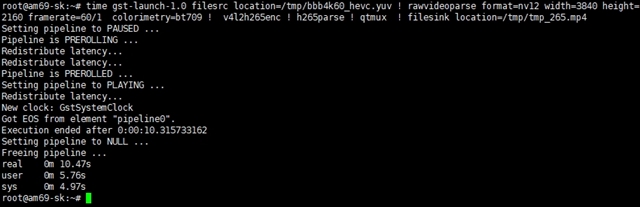

- Encode the 3840216060 NV12 format file and save it as an MP4 file. The expected time is a little over 10 seconds, and the original video duration is 10 seconds. It barely achieves the encoding capability of 1 stream of 4K@60 frames.

time gst-launch-1.0 filesrc location=/tmp/bbb4k60_hevc.yuv ! rawvideoparse format=nv12 width=3840 height=2160 framerate=60/1 colorimetry=bt709 ! v4l2h265enc ! h265parse ! qtmux ! filesink location=/tmp/tmp_265.mp4

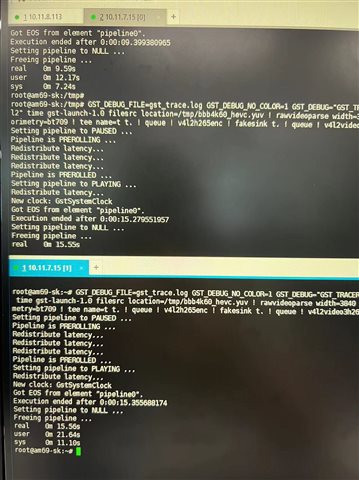

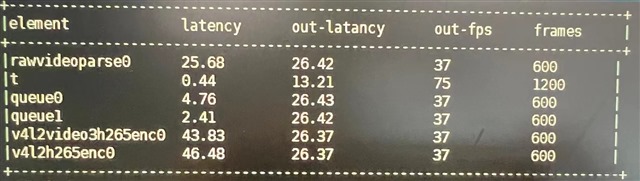

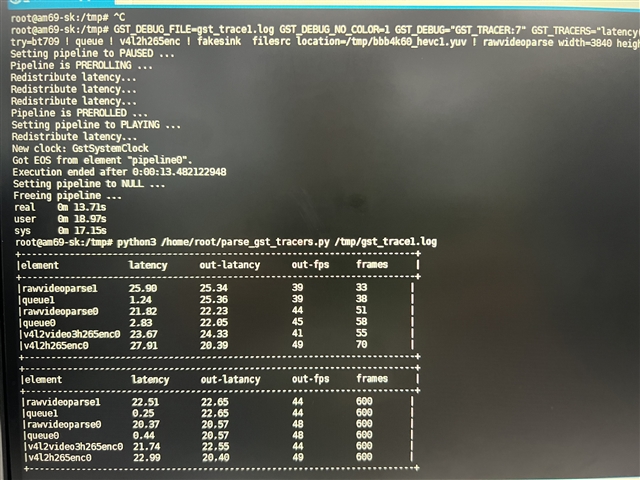

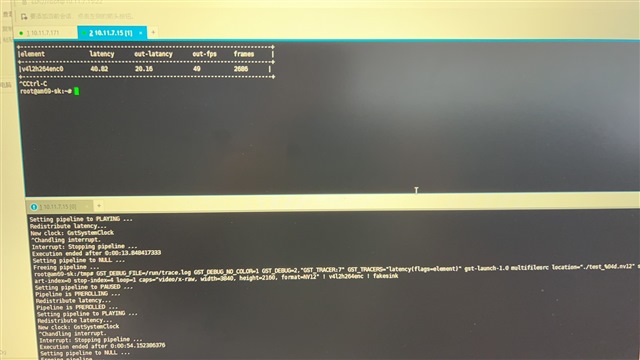

If I simultaneously start two threads for encoding, I find that it takes 15 seconds for both threads to complete the encoding, which cannot achieve the real-time encoding speed of two streams of 4K@60

thanks!