Other Parts Discussed in Thread: AM69A

Tool/software:

Dear Sir,

We have a custom onnx model , which is working as expected with tidl_j721e_08_02_00_11 (PSDK 8.2)

But when the same model is imported with PSDK 9.2 (J721S2) we are observing garbage values with its output in PC Emulation.

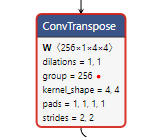

We have also observed that the number of layers detected in the same model with PSDK 8.2 is 95 whereas with 9.2 it is 107.

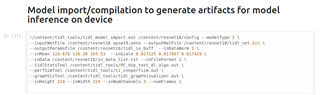

What could be the possible reason for the same, and how we can debug and resolve the issue?

Thanks and Regards,

Vyom Mishra