Other Parts Discussed in Thread: TDA4VM

Tool/software:

hello,i referenced edgeai-tidl-tools/docs/custom_model_evaluation.md at master · TexasInstruments/edgeai-tidl-tools · GitHub edgeai-tidl-tools/examples/osrt_python/README.md at master · TexasInstruments/edgeai-tidl-tools · GitHub

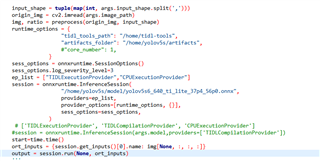

compile in x86 pc

i tried to add new arguments in edgeai-tidl-tools/examples/orst_python/model_configs.py

models_configs = {

############ onnx models ##########

'yolov8n-ori' : {

'model_path' : os.path.join(models_base_path, 'yolov8n.onnx'),

'mean': [0, 0, 0],

'scale' : [0,0,0],

'num_images' : numImages,

'num_classes': 80,

'model_type': 'detection',

#'od_type' : 'YoloV',

'session_name' : 'onnxrt' ,

'framework' : ''

},

}

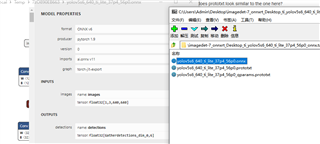

edgeai-tidl-tools/examples/orst_python/ort/onnxrt_ep.py的

models = [

#"cl-ort-resnet18-v1",

# "od-ort-ssd-lite_mobilenetv2_fpn"

'yolov8n-ori'

]

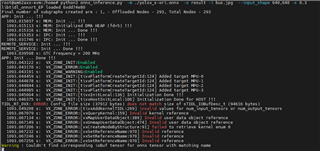

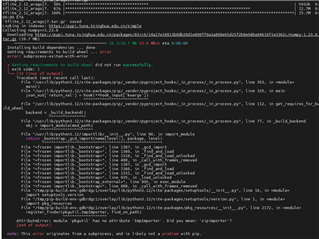

Here's the error:

====================================================================================================

Command : python3 tflrt_delegate.py in Dir : examples/osrt_python/tfl Started

Running 0 Models - []

Command : python3 onnxrt_ep.py in Dir : examples/osrt_python/ort Started

Available execution providers : ['TIDLExecutionProvider', 'TIDLCompilationProvider', 'CPUExecutionProvider']

Running 1 Models - ['yolov8n-ori']

Process Process-1:

Traceback (most recent call last):

File "/home/zxb/.pyenv/versions/3.10.15/lib/python3.10/multiprocessing/process.py", line 314, in _bootstrap

self.run()

File "/home/zxb/.pyenv/versions/3.10.15/lib/python3.10/multiprocessing/process.py", line 108, in run

self._target(*self._args, **self._kwargs)

File "/home/zxb/Desktop/ti/edgeai-tidl-tools/examples/osrt_python/ort/onnxrt_ep.py", line 221, in run_model

download_model(models_configs, model)

File "/home/zxb/Desktop/ti/edgeai-tidl-tools/examples/osrt_python/common_utils.py", line 240, in download_model

model_path = models_configs[model_name]["session"]["model_path"]

KeyError: 'session'

Running_Model : yolov8n-ori

============================================================================================================================

can i trouble ti to give some examples of yolov8,thanks

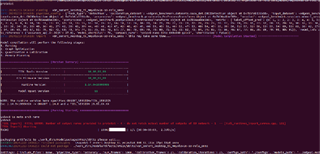

,But it won't generate protoxt files. When there was a Module NotFoundError: there was no module named 'edgeai_torchmodelopt', I copied edgeai-tensorlab-main\edgeai-modeloptimization\torchmodelopt to mmyolo\projects\easydeploy\tools\export_onnx.py and then encountered an ImportError: unable to import the name “_parse_stack_trace”(C:\Users\Admin\.conda\envs\mmyolo\lib\site-packages\torch\fx\graph.py).

,But it won't generate protoxt files. When there was a Module NotFoundError: there was no module named 'edgeai_torchmodelopt', I copied edgeai-tensorlab-main\edgeai-modeloptimization\torchmodelopt to mmyolo\projects\easydeploy\tools\export_onnx.py and then encountered an ImportError: unable to import the name “_parse_stack_trace”(C:\Users\Admin\.conda\envs\mmyolo\lib\site-packages\torch\fx\graph.py).