Other Parts Discussed in Thread: AM68A

Tool/software:

Hi Team,

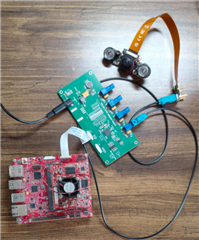

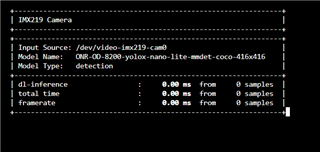

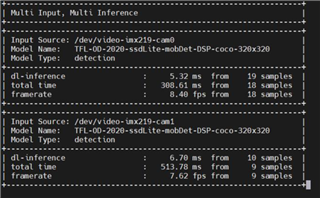

I'm currently working on integrating the IMX219-160_IR-CUT_Camera module with the AM68A board via the CSI2 interface.

I'm able to stream video successfully at 1920x1080 resolution using the following pipeline:

root@am68a-sk:/opt/edgeai-gst-apps# gst-launch-1.0 v4l2src device=/dev/video-imx219-cam0 io-mode=2 ! queue leaky=2 ! video/x-bayer,width=1920,height=1080,format=rggb ! tiovxisp sensor-name=SENSOR_SONY_IMX219_RPI dcc-isp-file=/opt/imaging/imx219/linear/dcc_viss.bin format-msb=7 sink_0::dcc-2a-file=/opt/imaging/imx219/linear/dcc_2a.bin sink_0::device=/dev/v4l-imx219-subdev0 ! video/x-raw,format=NV12 ! timeoverlay ! tiovxmultiscaler name=split0 src_0::roi-startx=0 src_0::roi-starty=0 src_0::roi-width=1280 src_0::roi-height=720 src_2::roi-startx=0 src_2::roi-starty=0 src_2::roi-width=1280 src_2::roi-height=720 target=0 ! queue ! video/x-raw,width=320,height=320 ! tiovxdlpreproc model=/opt/model_zoo/TFL-OD-2020-ssdLite-mobDet-DSP-coco-320x320 out-pool-size=4 ! application/x-tensor-tiovx ! tidlinferer target=1 model=/opt/model_zoo/TFL-OD-2020-ssdLite-mobDet-DSP-coco-320x320 ! post_0.tensor split0. ! queue ! video/x-raw,width=1920,height=1080 ! post_0.sink tidlpostproc name=post_0 model=/opt/model_zoo/TFL-OD-2020-ssdLite-mobDet-DSP-coco-320x320 alpha=0.400000 viz-threshold=0.600000 top-N=5 display-model=true ! queue ! mosaic.sink_0 tiovxmosaic name=mosaic sink_0::startx="<0>" sink_0::starty="<0>" ! kmssink driver-name=tidss sync=false

APP: Init ... !!!

3476.128820 s: MEM: Init ... !!!

3476.128885 s: MEM: Initialized DMA HEAP (fd=8) !!!

3476.129008 s: MEM: Init ... Done !!!

3476.129020 s: IPC: Init ... !!!

3476.190767 s: IPC: Init ... Done !!!

REMOTE_SERVICE: Init ... !!!

REMOTE_SERVICE: Init ... Done !!!

3476.201408 s: GTC Frequency = 200 MHz

APP: Init ... Done !!!

3476.201666 s: VX_ZONE_INFO: Globally Enabled VX_ZONE_ERROR

3476.201938 s: VX_ZONE_INFO: Globally Enabled VX_ZONE_WARNING

3476.202038 s: VX_ZONE_INFO: Globally Enabled VX_ZONE_INFO

3476.202846 s: VX_ZONE_INFO: [tivxPlatformCreateTargetId:134] Added target MPU-0

3476.203756 s: VX_ZONE_INFO: [tivxPlatformCreateTargetId:134] Added target MPU-1

3476.205391 s: VX_ZONE_INFO: [tivxPlatformCreateTargetId:134] Added target MPU-2

3476.205670 s: VX_ZONE_INFO: [tivxPlatformCreateTargetId:134] Added target MPU-3

3476.205820 s: VX_ZONE_INFO: [tivxInitLocal:126] Initialization Done !!!

3476.205877 s: VX_ZONE_INFO: Globally Disabled VX_ZONE_INFO

Number of subgraphs:1 , 129 nodes delegated out of 129 nodes

Setting pipeline to PAUSED ...

Pipeline is live and does not need PREROLL ...

Pipeline is PREROLLED ...

Setting pipeline to PLAYING ...

New clock: GstSystemClock

Redistribute latency...

0:00:04.8 / 99:99:99.

However, when I try to stream at the camera's maximum supported resolution (3264x2464) using the same pipeline with updated width and height, I encounter an error:

root@am68a-sk:/opt/edgeai-gst-apps# gst-launch-1.0 v4l2src device=/dev/video-imx219-cam0 io-mode=2 ! queue leaky=2 ! video/x-bayer,width=3264,height=2464,format=rggb ! tiovxisp sensor-name=SENSOR_SONY_IMX219_RPI dcc-isp-file=/opt/imaging/imx219/linear/dcc_viss.bin format-msb=7 sink_0::dcc-2a-file=/opt/

imaging/imx219/linear/dcc_2a.bin sink_0::device=/dev/v4l-imx219-subdev0 ! video/x-raw,format=NV12 ! timeoverlay ! tiovxmultiscaler name=split0 sr

c_0::roi-startx=0 src_0::roi-starty=0 src_0::roi-width=1280 src_0::roi-height=720 src_2::roi-startx=0 src_2::roi-starty=0 src_2::roi-width=1280 src_2::roi-h

eight=720 target=0 ! queue ! video/x-raw,width=320,height=320 ! tiovxdlpreproc model=/opt/model_zoo/TFL-OD-2020-ssdLite-mobDet-DSP-coco-320x320 out-

pool-size=4 ! application/x-tensor-tiovx ! tidlinferer target=1 model=/opt/model_zoo/TFL-OD-2020-ssdLite-mobDet-DSP-coco-320x320 ! post_0.tensor

split0. ! queue ! video/x-raw,width=1920,height=1080 ! post_0.sink tidlpostproc name=post_0 model=/opt/model_zoo/TFL-OD-2020-ssdLit

e-mobDet-DSP-coco-320x320 alpha=0.400000 viz-threshold=0.600000 top-N=5 display-model=true ! queue ! mosaic.sink_0 tiovxmosaic name=mosaic sink_0::star

tx="<0>" sink_0::starty="<0>" ! kmssink driver-name=tidss sync=false

APP: Init ... !!!

3718.199423 s: MEM: Init ... !!!

3718.199467 s: MEM: Initialized DMA HEAP (fd=8) !!!

3718.199586 s: MEM: Init ... Done !!!

3718.199599 s: IPC: Init ... !!!

3718.251133 s: IPC: Init ... Done !!!

REMOTE_SERVICE: Init ... !!!

REMOTE_SERVICE: Init ... Done !!!

3718.258316 s: GTC Frequency = 200 MHz

APP: Init ... Done !!!

3718.258583 s: VX_ZONE_INFO: Globally Enabled VX_ZONE_ERROR

3718.258657 s: VX_ZONE_INFO: Globally Enabled VX_ZONE_WARNING

3718.258714 s: VX_ZONE_INFO: Globally Enabled VX_ZONE_INFO

3718.259491 s: VX_ZONE_INFO: [tivxPlatformCreateTargetId:134] Added target MPU-0

3718.261561 s: VX_ZONE_INFO: [tivxPlatformCreateTargetId:134] Added target MPU-1

3718.261894 s: VX_ZONE_INFO: [tivxPlatformCreateTargetId:134] Added target MPU-2

3718.262060 s: VX_ZONE_INFO: [tivxPlatformCreateTargetId:134] Added target MPU-3

3718.262078 s: VX_ZONE_INFO: [tivxInitLocal:126] Initialization Done !!!

3718.262086 s: VX_ZONE_INFO: Globally Disabled VX_ZONE_INFO

Number of subgraphs:1 , 129 nodes delegated out of 129 nodes

Setting pipeline to PAUSED ...

Pipeline is live and does not need PREROLL ...

Pipeline is PREROLLED ...

Setting pipeline to PLAYING ...

New clock: GstSystemClock

ERROR: from element /GstPipeline:pipeline0/GstV4l2Src:v4l2src0: Failed to allocate required memory.

Additional debug info:

/usr/src/debug/gstreamer1.0-plugins-good/1.22.12/sys/v4l2/gstv4l2src.c(950): gst_v4l2src_decide_allocation (): /GstPipeline:pipeline0/GstV4l2Src:v4l2src0:

Buffer pool activation failed

Execution ended after 0:00:00.025853731

Setting pipeline to NULL ...

ERROR: from element /GstPipeline:pipeline0/GstV4l2Src:v4l2src0: Internal data stream error.

Additional debug info:

/usr/src/debug/gstreamer1.0/1.22.12/libs/gst/base/gstbasesrc.c(3134): gst_base_src_loop (): /GstPipeline:pipeline0/GstV4l2Src:v4l2src0:

streaming stopped, reason not-negotiated (-4)

Freeing pipeline ...

APP: Deinit ... !!!

REMOTE_SERVICE: Deinit ... !!!

REMOTE_SERVICE: Deinit ... Done !!!

3719.060723 s: IPC: Deinit ... !!!

3719.063066 s: IPC: DeInit ... Done !!!

3719.063103 s: MEM: Deinit ... !!!

3719.063482 s: DDR_SHARED_MEM: Alloc's: 37 alloc's of 94292515 bytes

3719.063581 s: DDR_SHARED_MEM: Free's : 37 free's of 94292515 bytes

3719.063610 s: DDR_SHARED_MEM: Open's : 0 allocs of 0 bytes

3719.063634 s: MEM: Deinit ... Done !!!

APP: Deinit ... Done !!!-

Camera module: IMX219-160_IR-CUT_Camera

-

Interface: CSI2

-

Board: AM68A

-

Working resolution: 1920x1080

-

Desired resolution: 3264x2464

-

SDK:10.1

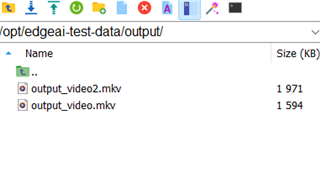

I've confirmed that the sensor is capable of this resolution. Are any bandwidth restrictions, driver limitations, or additional settings required to achieve full-resolution streaming?Any guidance or suggestions would be greatly appreciated!

Noushad