Other Parts Discussed in Thread: TDA4VH

Tool/software:

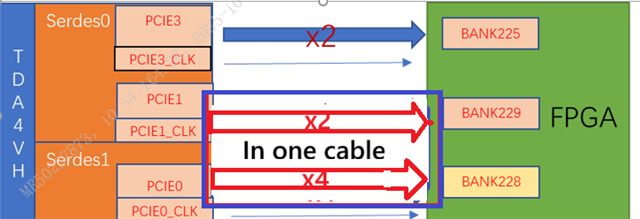

HW: TDA4VH , PCIEx4 connect FPGA, FPGA read from TDA4VH 's DDR. we test de best bandwith is 1.8GB/S .

1 . please, is there any problem ON TDA4VH ?

2 . what is the MAX speed ON TDA4VH pciex4 ?

Hi Chen,

The numbers that we measure using PCIe SSD card are published here: https://software-dl.ti.com/jacinto7/esd/processor-sdk-linux-j784s4/10_01_00_05/exports/docs/devices/J7_Family/linux/Release_Specific_Performance_Guide.html#pcie-nvme-ssd

In theory, each PCIe lane should be able to run at 1GB/s, but there will be some overhead from header and DMA initialization. We have found that DMA was the bottleneck in our EP/RC examples we package in with the SDK, so it could be the same is happening in the FPGA case as well.

An experiment to see if DMA is bottleneck would be to see if you can start multiple streams of data to PCIe that each uses a different DMA channel. If total performance doubles, then most likely DMA is bottleneck.

Regards,

Takuma

we don't use SOC DMA to send data by pcie, FPGA as DMA to move data from DDR by pcie.

PCIe SSD card is Width x2 and J72xx , we want to know the performance Width x4 and j784s4.

Hi Chen,

We don't have numbers we publish on the SDK for x4 width, but we have seen the following internally:

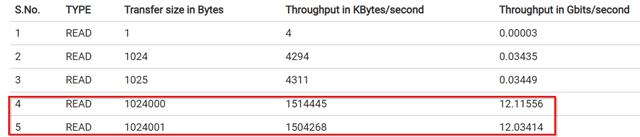

With SDK 10.0 Linux Kernel,the following are the results for RC to EP data transfer with pcitest utility using DMA:

| S.No. | TYPE | Transfer size in Bytes | Throughput in KBytes/second | Throughput in Gbits/second |

| 1 | READ | 1 | 4 | 0.00003 |

| 2 | READ | 1024 | 4294 | 0.03435 |

| 3 | READ | 1025 | 4311 | 0.03449 |

| 4 | READ | 1024000 | 1514445 | 12.11556 |

| 5 | READ | 1024001 | 1504268 | 12.03414 |

| 6 | WRITE | 1 | 4 | 0.00003 |

| 7 | WRITE | 1024 | 4360 | 0.03488 |

| 8 | WRITE | 1025 | 4447 | 0.03558 |

| 9 | WRITE | 1024000 | 1326244 | 10.60995 |

| 10 | WRITE | 1024001 | 1322281 | 10.57825 |

| 11 | COPY | 1 | 4 | 0.000032 |

| 12 | COPY | 1024 | 4361 | 0.034888 |

| 13 | COPY | 1025 | 4360 | 0.034888 |

| 14 | COPY | 1024000 | 1100749 | 8.805992 |

| 15 | COPY | 1024001 | 1101597 | 8.812776 |

This test was performed with an AM69-SK acting as RC and with a J784S4-EVM acting as EP.

Negotiated Link-Width: x4 and Link-Speed: 8 GT/s (Gen 3).

All tests (Read/Write/Copy) were between RC and EP with DMA enabled (-d option in pcitest command).

Following commands were run on RC:

Read => pcitest -d -r -s <size-in-bytes>

Write => pcitest -d -w -s <size-in-bytes>

Copy => pcitest -d -c -s <size-in-bytes>

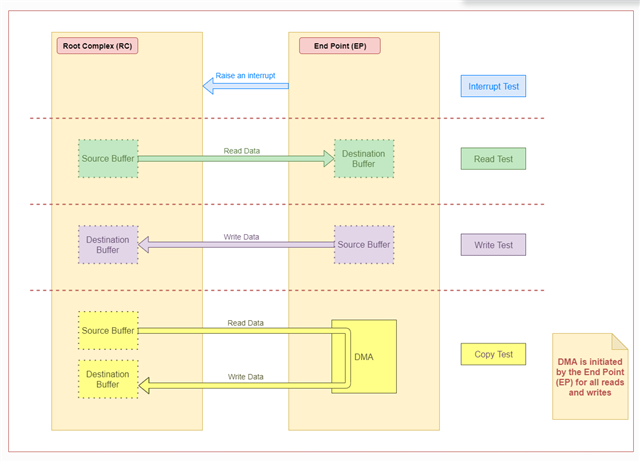

Read => Source Buffer in RC and Destination Buffer in EP.

Write => Source Buffer in EP and Destination Buffer in RC.

Copy => Source and Destination Buffers in RC. EP initiates a read from the RC's Source Buffer followed by a write to the RC's Destination Buffer.

Regards,

Takuma

As a side note, is TDA4VH/J784S4 negotiating to PCIe gen 3 speeds and x4 width? This information can be obtained from "lspci -vvv" and/or dmesg.

Regards,

Takuma

Hi Takuma,

Why maximum throughput of "PCIex4 and Link-Speed: 8 GT/s (Gen 3)" is 1.2G byte/S, it should be more than 3.5 GB/s in theory.

log as below:

/cfs-file/__key/communityserver-discussions-components-files/791/5481.dmesg.txt

/cfs-file/__key/communityserver-discussions-components-files/791/5481.lspci.txt

thanks.

Hi Chen,

PCIe interface itself can reach 8GT/s per lane. However, the way data is fed to PCIe controller often causes a bottleneck. For example, queueing multiple I/O requests and doing asynchronous read/writes can increase read/write speed. A tool called "fio" is a good example benchmarking tool that implements these which you may reference.

Regards,

Takuma

hi Takuma

1 . do you have test result with J784S4-EVM acting as RC.

2 . your test result ,Is there any different between J784S4-EVM acting as RC or EP ?

3 . can we increase PCIEx4 performance on TDA4VH, at least 2.2GB /S .

Hi Chen,

AM69-SK is the industrial equivalent part number of J784S4, and in terms of hardware functionality/performance they are the same. So performance numbers that I have posted previously can be interpreted as the test result of J784S4 acting as both RC and EP.

You can increase performance using the methods I have described in my previous post, assuming the bottleneck is due to how your software is feeding in data to PCIe controller.

Regards,

Takuma

Hi ,

Our test environment is FPGA read data from J784S4 EVM's DDR driectlly, there are no software run on J784S4 EVM board, we only config pcie.

Like FPGA <==> 4VH PCIe controller <==> 4VH DDR , No software . we want to know which is the bottleneck ? and the hardware design performance upper limit?

Hi Chen,

I see from lspci logs that pci_endpoint_test kernel module is in use. Software running on J784S4 EVM will be from pci_endpoint_test.c, which is not optimized for performance/benchmarking, but more for showing functionality.

Hardware design's performance upper limit is 8GT/s in theory. Example is the benchmarking numbers I have shared with the example benchmarking software used.

Regards,

Takuma

Regards,

Takuma

hi,

We config J784S4 EVM as RC, We didn't use software to move data. FPGA read data pass through PCIe driectlly. please NOTE it ,thank you .

the example you shared didn't reach the speed for theory , can you do some test for PCIe x4 to make it reach the MAX speed for theory, thank you very much.

Hi Chen,

I think there is a miscommunication or misunderstanding for the software for J784S4. Software is a necessity for transfer to occur from J784S4 regardless of EP or RC. If no software is running, nothing will set up BAR address, negotiate link training, set up transfers, etc, so transactions will not occur.

I can see in your logs the following line: "Kernel modules: pci_endpoint_test". This means the pci_endpoint_test kernel module is in-use. You might be trying to say there is no user software that you have created that is running on the J784S4 EVM (which is true), but what I am saying is that you are using the demo software that is provided by the upstream Linux kernel for testing functionality (which is what the shared logs indicate).

We do not have J784S4 numbers published, but they use the same PHY and PCIe controller as J7200 that we published here: https://software-dl.ti.com/jacinto7/esd/processor-sdk-linux-j784s4/10_01_00_05/exports/docs/devices/J7_Family/linux/Release_Specific_Performance_Guide.html#pcie-nvme-ssd. Here 1.5GB/s is achieved using PCIex2, which is close to the theoretical values. The benchmarking software used is fio.

Regards,

Takuma

Hi Chen,

This past E2E forum conversation is a good read: https://e2e.ti.com/support/processors-group/processors/f/processors-forum/1478254/tda4vh-q1-slow-writing-speed-in-nvme-ssd/5675836?tisearch=e2e-sitesearch&keymatch=fio#5675836

The developer started with 350 MB/s, and drastically increased write speeds using different options. I think similar issues are happening for read/write with FPGA.

Regards,

Takuma

hi,

Our plan didnot use software to send data by pcie. FPGA read data from J784S4 EVM's DDR driectlly .

Hi Chen,

Based on previous logs, my understanding is that J784S4 is configured as RC and FPGA is configured as EP. A read from FPGA EP will be a write from J784S4 RC perspective.

For example, "Read Test" in below diagram that reads data from RC:

Above example for Read Test from EP perspective will need a call to pci_endpoint_test_write from RC perspective.

Regards,

Takuma

hi ,

actuality , we didnot use pci_endpoint_test.ko , FPGA can move data from DDR driectlly.

Hi Takuma,

Can BU test according to customer's use case? for example connect 2 pcs EVM with cable, read from endpoint side? it is better to get real test data to analysis on.

Hi Tony,

Can BU test according to customer's use case? for example connect 2 pcs EVM with cable, read from endpoint side? it is better to get real test data to analysis on.

The numbers that we have are what was posted in April 17 on this thread.

actuality , we didnot use pci_endpoint_test.ko , FPGA can move data from DDR driectlly.

Based off of the lspci logs shared on April 18 on this thread, pci_endpoint_test kernel driver is in-use. Is there a different software setup that you are testing? Below logs are what I am referencing:

/cfs-file/__key/communityserver-discussions-components-files/791/5481.lspci.txt

Regards,

Takuma

Hi Takuma,

The numbers that we have are what was posted in April 17 on this thread.

| 4 | READ | 1024000 | 1514445 | 12.11556 |

| 5 | READ | 1024001 | 1504268 | 12.03414 |

The max throughput in the table is 12Gbps at 4x lane, is it the best result already? can it be improved? customer think it should reach at least 2GByte/s which is 50% theoretical throughput. How do you think what is the max throughput can achieve and how to achieve with customer's use case.

Based off of the lspci logs shared on April 18 on this thread, pci_endpoint_test kernel driver is in-use. Is there a different software setup that you are testing? Below logs are what I am referencing:

I don't understand the context, @chen chen Can you comment?

Hi Takuma,

We didn’t load pci_endpoint_test.ko , and our scheme don‘t use function of pci_endpoint_test.ko, log as below. Do you think is it necessary for my use case? will it help to improve throughput?

[ 61.479100] [egalax_i2c]: reset touchscreen !!

lspci -vv

0000:00:00.0 PCI bridge: Texas Instruments Device b00d (prog-if 00 [Normal decode])

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 498

Bus: primary=00, secondary=01, subordinate=01, sec-latency=0

I/O behind bridge: [disabled]

Memory behind bridge: 10100000-101fffff [size=1M]

Prefetchable memory behind bridge: [disabled]

Secondary status: 66MHz- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- <SERR- <PERR-

BridgeCtl: Parity- SERR+ NoISA- VGA- VGA16- MAbort- >Reset- FastB2B-

PriDiscTmr- SecDiscTmr- DiscTmrStat- DiscTmrSERREn-

Capabilities: [80] Power Management version 3

Flags: PMEClk- DSI- D1+ D2- AuxCurrent=0mA PME(D0+,D1+,D2-,D3hot+,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [90] MSI: Enable+ Count=1/1 Maskable+ 64bit+

Address: 0000000001000000 Data: 0000

Masking: 00000000 Pending: 00000000

Capabilities: [b0] MSI-X: Enable- Count=1 Masked-

Vector table: BAR=0 offset=00000000

PBA: BAR=0 offset=00000008

Capabilities: [c0] Express (v2) Root Port (Slot+), MSI 00

DevCap: MaxPayload 256 bytes, PhantFunc 0

ExtTag- RBE+

DevCtl: CorrErr+ NonFatalErr+ FatalErr+ UnsupReq+

RlxdOrd+ ExtTag- PhantFunc- AuxPwr- NoSnoop+

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L1, Exit Latency L1 <8us

ClockPM- Surprise- LLActRep- BwNot+ ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s (ok), Width x4 (downgraded)

TrErr- Train- SlotClk- DLActive- BWMgmt- ABWMgmt+

SltCap: AttnBtn- PwrCtrl- MRL- AttnInd- PwrInd- HotPlug- Surprise-

Slot #0, PowerLimit 0.000W; Interlock- NoCompl-

SltCtl: Enable: AttnBtn- PwrFlt- MRL- PresDet- CmdCplt- HPIrq- LinkChg-

Control: AttnInd Off, PwrInd Off, Power+ Interlock-

SltSta: Status: AttnBtn- PowerFlt- MRL+ CmdCplt- PresDet- Interlock-

Changed: MRL- PresDet- LinkState-

RootCap: CRSVisible-

RootCtl: ErrCorrectable- ErrNon-Fatal- ErrFatal- PMEIntEna+ CRSVisible-

RootSta: PME ReqID 0000, PMEStatus- PMEPending-

DevCap2: Completion Timeout: Range B, TimeoutDis+ NROPrPrP- LTR+

10BitTagComp+ 10BitTagReq- OBFF Via message, ExtFmt+ EETLPPrefix+, MaxEETLPPrefixes 1

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- LN System CLS Not Supported, TPHComp- ExtTPHComp- ARIFwd+

AtomicOpsCap: Routing- 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR+ OBFF Disabled, ARIFwd-

AtomicOpsCtl: ReqEn- EgressBlck-

LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS-

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance De-emphasis: -6dB

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete+ EqualizationPhase1+

EqualizationPhase2+ EqualizationPhase3+ LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [100 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES+ TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap+ ECRCGenEn- ECRCChkCap+ ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

RootCmd: CERptEn+ NFERptEn+ FERptEn+

RootSta: CERcvd- MultCERcvd- UERcvd- MultUERcvd-

FirstFatal- NonFatalMsg- FatalMsg- IntMsg 0

ErrorSrc: ERR_COR: 0000 ERR_FATAL/NONFATAL: 0000

Capabilities: [150 v1] Device Serial Number 00-00-00-00-00-00-00-00

Capabilities: [300 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

Capabilities: [4c0 v1] Virtual Channel

Caps: LPEVC=0 RefClk=100ns PATEntryBits=1

Arb: Fixed- WRR32- WRR64- WRR128-

Ctrl: ArbSelect=Fixed

Status: InProgress-

VC0: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable+ ID=0 ArbSelect=Fixed TC/VC=ff

Status: NegoPending- InProgress-

VC1: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=1 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

VC2: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=2 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

VC3: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=3 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

Capabilities: [5c0 v1] Address Translation Service (ATS)

ATSCap: Invalidate Queue Depth: 01

ATSCtl: Enable-, Smallest Translation Unit: 00

Capabilities: [640 v1] Page Request Interface (PRI)

PRICtl: Enable- Reset-

PRISta: RF- UPRGI- Stopped+

Page Request Capacity: 00000001, Page Request Allocation: 00000000

Capabilities: [900 v1] L1 PM Substates

L1SubCap: PCI-PM_L1.2+ PCI-PM_L1.1+ ASPM_L1.2+ ASPM_L1.1+ L1_PM_Substates+

PortCommonModeRestoreTime=255us PortTPowerOnTime=26us

L1SubCtl1: PCI-PM_L1.2- PCI-PM_L1.1- ASPM_L1.2- ASPM_L1.1-

T_CommonMode=0us LTR1.2_Threshold=0ns

L1SubCtl2: T_PwrOn=10us

Kernel driver in use: pcieport

lspci: Unable to load libkmod resources: error -2

0000:01:00.0 Memory controller: Xilinx Corporation Device 8031

Subsystem: Xilinx Corporation Device 0007

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin ? routed to IRQ 606

Region 0: Memory at 10100000 (32-bit, non-prefetchable) [size=4K]

Capabilities: [80] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot-,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [90] MSI: Enable+ Count=2/2 Maskable- 64bit+

Address: 0000000001000400 Data: 0000

Capabilities: [c0] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 1024 bytes, PhantFunc 0, Latency L0s <64ns, L1 <1us

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset- SlotPowerLimit 0.000W

DevCtl: CorrErr+ NonFatalErr+ FatalErr+ UnsupReq+

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop+

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x4, ASPM not supported

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s (ok), Width x4 (ok)

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range B, TimeoutDis+ NROPrPrP- LTR-

10BitTagComp- 10BitTagReq- OBFF Not Supported, ExtFmt- EETLPPrefix-

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- TPHComp- ExtTPHComp-

AtomicOpsCap: 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR- OBFF Disabled,

AtomicOpsCtl: ReqEn-

LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS-

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance De-emphasis: -6dB

LnkSta2: Current De-emphasis Level: -3.5dB, EqualizationComplete+ EqualizationPhase1+

EqualizationPhase2+ EqualizationPhase3+ LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [100 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES+ TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap- ECRCGenEn- ECRCChkCap- ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

Capabilities: [300 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

Capabilities: [3c0 v1] Virtual Channel

Caps: LPEVC=0 RefClk=100ns PATEntryBits=1

Arb: Fixed- WRR32- WRR64- WRR128-

Ctrl: ArbSelect=Fixed

Status: InProgress-

VC0: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable+ ID=0 ArbSelect=Fixed TC/VC=ff

Status: NegoPending- InProgress-

Kernel driver in use: xdma

0001:00:00.0 PCI bridge: Texas Instruments Device b013 (prog-if 00 [Normal decode])

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 502

Bus: primary=00, secondary=01, subordinate=01, sec-latency=0

I/O behind bridge: [disabled]

Memory behind bridge: 18100000-181fffff [size=1M]

Prefetchable memory behind bridge: [disabled]

Secondary status: 66MHz- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- <SERR- <PERR-

BridgeCtl: Parity- SERR+ NoISA- VGA- VGA16- MAbort- >Reset- FastB2B-

PriDiscTmr- SecDiscTmr- DiscTmrStat- DiscTmrSERREn-

Capabilities: [80] Power Management version 3

Flags: PMEClk- DSI- D1+ D2- AuxCurrent=0mA PME(D0+,D1+,D2-,D3hot+,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [90] MSI: Enable+ Count=1/1 Maskable+ 64bit+

Address: 0000000001040000 Data: 0000

Masking: 00000000 Pending: 00000000

Capabilities: [b0] MSI-X: Enable- Count=1 Masked-

Vector table: BAR=0 offset=00000000

PBA: BAR=0 offset=00000008

Capabilities: [c0] Express (v2) Root Port (Slot+), MSI 00

DevCap: MaxPayload 256 bytes, PhantFunc 0

ExtTag- RBE+

DevCtl: CorrErr+ NonFatalErr+ FatalErr+ UnsupReq+

RlxdOrd+ ExtTag- PhantFunc- AuxPwr- NoSnoop+

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x2, ASPM L1, Exit Latency L1 <8us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s (ok), Width x2 (ok)

TrErr- Train- SlotClk- DLActive- BWMgmt- ABWMgmt-

SltCap: AttnBtn- PwrCtrl- MRL- AttnInd- PwrInd- HotPlug- Surprise-

Slot #0, PowerLimit 0.000W; Interlock- NoCompl-

SltCtl: Enable: AttnBtn- PwrFlt- MRL- PresDet- CmdCplt- HPIrq- LinkChg-

Control: AttnInd Off, PwrInd Off, Power+ Interlock-

SltSta: Status: AttnBtn- PowerFlt- MRL+ CmdCplt- PresDet- Interlock-

Changed: MRL- PresDet- LinkState-

RootCap: CRSVisible-

RootCtl: ErrCorrectable- ErrNon-Fatal- ErrFatal- PMEIntEna+ CRSVisible-

RootSta: PME ReqID 0000, PMEStatus- PMEPending-

DevCap2: Completion Timeout: Range B, TimeoutDis+ NROPrPrP- LTR+

10BitTagComp+ 10BitTagReq- OBFF Via message, ExtFmt+ EETLPPrefix+, MaxEETLPPrefixes 1

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- LN System CLS Not Supported, TPHComp- ExtTPHComp- ARIFwd-

AtomicOpsCap: Routing- 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR+ OBFF Disabled, ARIFwd-

AtomicOpsCtl: ReqEn- EgressBlck-

LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS-

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance De-emphasis: -6dB

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete+ EqualizationPhase1+

EqualizationPhase2+ EqualizationPhase3+ LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [100 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES+ TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap+ ECRCGenEn- ECRCChkCap+ ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

RootCmd: CERptEn+ NFERptEn+ FERptEn+

RootSta: CERcvd- MultCERcvd- UERcvd- MultUERcvd-

FirstFatal- NonFatalMsg- FatalMsg- IntMsg 0

ErrorSrc: ERR_COR: 0000 ERR_FATAL/NONFATAL: 0000

Capabilities: [140 v1] Alternative Routing-ID Interpretation (ARI)

ARICap: MFVC- ACS-, Next Function: 1

ARICtl: MFVC- ACS-, Function Group: 0

Capabilities: [150 v1] Device Serial Number 00-00-00-00-00-00-00-00

Capabilities: [160 v1] Power Budgeting <?>

Capabilities: [1b8 v1] Latency Tolerance Reporting

Max snoop latency: 0ns

Max no snoop latency: 0ns

Capabilities: [1c0 v1] Dynamic Power Allocation <?>

Capabilities: [200 v1] Single Root I/O Virtualization (SR-IOV)

IOVCap: Migration-, Interrupt Message Number: 000

IOVCtl: Enable- Migration- Interrupt- MSE- ARIHierarchy-

IOVSta: Migration-

Initial VFs: 4, Total VFs: 4, Number of VFs: 0, Function Dependency Link: 00

VF offset: 6, stride: 1, Device ID: 0100

Supported Page Size: 00000553, System Page Size: 00000001

Region 0: Memory at 0000000018400000 (64-bit, non-prefetchable)

VF Migration: offset: 00000000, BIR: 0

Capabilities: [300 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

Capabilities: [400 v1] Vendor Specific Information: ID=0001 Rev=1 Len=010 <?>

Capabilities: [440 v1] Process Address Space ID (PASID)

PASIDCap: Exec+ Priv+, Max PASID Width: 14

PASIDCtl: Enable+ Exec+ Priv+

Capabilities: [4c0 v1] Virtual Channel

Caps: LPEVC=0 RefClk=100ns PATEntryBits=1

Arb: Fixed- WRR32- WRR64- WRR128-

Ctrl: ArbSelect=Fixed

Status: InProgress-

VC0: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable+ ID=0 ArbSelect=Fixed TC/VC=ff

Status: NegoPending- InProgress-

VC1: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=1 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

VC2: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=2 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

VC3: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=3 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

Capabilities: [5c0 v1] Address Translation Service (ATS)

ATSCap: Invalidate Queue Depth: 01

ATSCtl: Enable-, Smallest Translation Unit: 00

Capabilities: [640 v1] Page Request Interface (PRI)

PRICtl: Enable- Reset-

PRISta: RF- UPRGI- Stopped+

Page Request Capacity: 00000001, Page Request Allocation: 00000000

Capabilities: [900 v1] L1 PM Substates

L1SubCap: PCI-PM_L1.2+ PCI-PM_L1.1+ ASPM_L1.2+ ASPM_L1.1+ L1_PM_Substates+

PortCommonModeRestoreTime=255us PortTPowerOnTime=26us

L1SubCtl1: PCI-PM_L1.2- PCI-PM_L1.1- ASPM_L1.2- ASPM_L1.1-

T_CommonMode=0us LTR1.2_Threshold=0ns

L1SubCtl2: T_PwrOn=10us

Capabilities: [a20 v1] Precision Time Measurement

PTMCap: Requester:+ Responder:- Root:-

PTMClockGranularity: Unimplemented

PTMControl: Enabled:- RootSelected:-

PTMEffectiveGranularity: Unknown

Kernel driver in use: pcieport

0001:01:00.0 Memory controller: Xilinx Corporation Device 5031

Subsystem: Xilinx Corporation Device 0007

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin ? routed to IRQ 608

Region 0: Memory at 18100000 (32-bit, non-prefetchable) [size=4K]

Capabilities: [80] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot-,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [90] MSI: Enable+ Count=2/2 Maskable- 64bit+

Address: 0000000001040400 Data: 0000

Capabilities: [c0] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 1024 bytes, PhantFunc 0, Latency L0s <64ns, L1 <1us

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset- SlotPowerLimit 0.000W

DevCtl: CorrErr+ NonFatalErr+ FatalErr+ UnsupReq+

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop+

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x2, ASPM not supported

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s (ok), Width x2 (ok)

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range B, TimeoutDis+ NROPrPrP- LTR-

10BitTagComp- 10BitTagReq- OBFF Not Supported, ExtFmt- EETLPPrefix-

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- TPHComp- ExtTPHComp-

AtomicOpsCap: 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR- OBFF Disabled,

AtomicOpsCtl: ReqEn-

LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS-

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance De-emphasis: -6dB

LnkSta2: Current De-emphasis Level: -3.5dB, EqualizationComplete+ EqualizationPhase1+

EqualizationPhase2+ EqualizationPhase3+ LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [100 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES+ TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap- ECRCGenEn- ECRCChkCap- ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

Capabilities: [300 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

Capabilities: [3c0 v1] Virtual Channel

Caps: LPEVC=0 RefClk=100ns PATEntryBits=1

Arb: Fixed- WRR32- WRR64- WRR128-

Ctrl: ArbSelect=Fixed

Status: InProgress-

VC0: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable+ ID=0 ArbSelect=Fixed TC/VC=ff

Status: NegoPending- InProgress-

Kernel driver in use: xdma

0002:00:00.0 PCI bridge: Texas Instruments Device b00d (prog-if 00 [Normal decode])

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 506

Bus: primary=00, secondary=01, subordinate=01, sec-latency=0

I/O behind bridge: [disabled]

Memory behind bridge: 00100000-001fffff [size=1M]

Prefetchable memory behind bridge: [disabled]

Secondary status: 66MHz- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- <SERR- <PERR-

BridgeCtl: Parity- SERR+ NoISA- VGA- VGA16- MAbort- >Reset- FastB2B-

PriDiscTmr- SecDiscTmr- DiscTmrStat- DiscTmrSERREn-

Capabilities: [80] Power Management version 3

Flags: PMEClk- DSI- D1+ D2- AuxCurrent=0mA PME(D0+,D1+,D2-,D3hot+,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [90] MSI: Enable+ Count=1/1 Maskable+ 64bit+

Address: 00000000010c0000 Data: 0000

Masking: 00000000 Pending: 00000000

Capabilities: [b0] MSI-X: Enable- Count=1 Masked-

Vector table: BAR=0 offset=00000000

PBA: BAR=0 offset=00000008

Capabilities: [c0] Express (v2) Root Port (Slot+), MSI 00

DevCap: MaxPayload 256 bytes, PhantFunc 0

ExtTag- RBE+

DevCtl: CorrErr+ NonFatalErr+ FatalErr+ UnsupReq+

RlxdOrd+ ExtTag- PhantFunc- AuxPwr- NoSnoop+

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x2, ASPM L1, Exit Latency L1 <8us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s (ok), Width x2 (ok)

TrErr- Train- SlotClk- DLActive- BWMgmt- ABWMgmt-

SltCap: AttnBtn- PwrCtrl- MRL- AttnInd- PwrInd- HotPlug- Surprise-

Slot #0, PowerLimit 0.000W; Interlock- NoCompl-

SltCtl: Enable: AttnBtn- PwrFlt- MRL- PresDet- CmdCplt- HPIrq- LinkChg-

Control: AttnInd Off, PwrInd Off, Power+ Interlock-

SltSta: Status: AttnBtn- PowerFlt- MRL+ CmdCplt- PresDet- Interlock-

Changed: MRL- PresDet- LinkState-

RootCap: CRSVisible-

RootCtl: ErrCorrectable- ErrNon-Fatal- ErrFatal- PMEIntEna+ CRSVisible-

RootSta: PME ReqID 0000, PMEStatus- PMEPending-

DevCap2: Completion Timeout: Range B, TimeoutDis+ NROPrPrP- LTR+

10BitTagComp+ 10BitTagReq- OBFF Via message, ExtFmt+ EETLPPrefix+, MaxEETLPPrefixes 1

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- LN System CLS Not Supported, TPHComp- ExtTPHComp- ARIFwd-

AtomicOpsCap: Routing- 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR+ OBFF Disabled, ARIFwd-

AtomicOpsCtl: ReqEn- EgressBlck-

LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS-

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance De-emphasis: -6dB

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete+ EqualizationPhase1+

EqualizationPhase2+ EqualizationPhase3+ LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [100 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES+ TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap+ ECRCGenEn- ECRCChkCap+ ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

RootCmd: CERptEn+ NFERptEn+ FERptEn+

RootSta: CERcvd- MultCERcvd- UERcvd- MultUERcvd-

FirstFatal- NonFatalMsg- FatalMsg- IntMsg 0

ErrorSrc: ERR_COR: 0000 ERR_FATAL/NONFATAL: 0000

Capabilities: [140 v1] Alternative Routing-ID Interpretation (ARI)

ARICap: MFVC- ACS-, Next Function: 1

ARICtl: MFVC- ACS-, Function Group: 0

Capabilities: [150 v1] Device Serial Number 00-00-00-00-00-00-00-00

Capabilities: [160 v1] Power Budgeting <?>

Capabilities: [1b8 v1] Latency Tolerance Reporting

Max snoop latency: 0ns

Max no snoop latency: 0ns

Capabilities: [1c0 v1] Dynamic Power Allocation <?>

Capabilities: [200 v1] Single Root I/O Virtualization (SR-IOV)

IOVCap: Migration-, Interrupt Message Number: 000

IOVCtl: Enable- Migration- Interrupt- MSE- ARIHierarchy-

IOVSta: Migration-

Initial VFs: 4, Total VFs: 4, Number of VFs: 0, Function Dependency Link: 00

VF offset: 6, stride: 1, Device ID: 0100

Supported Page Size: 00000553, System Page Size: 00000001

Region 0: Memory at 0000000000400000 (64-bit, non-prefetchable)

VF Migration: offset: 00000000, BIR: 0

Capabilities: [300 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

Capabilities: [400 v1] Vendor Specific Information: ID=0001 Rev=1 Len=010 <?>

Capabilities: [440 v1] Process Address Space ID (PASID)

PASIDCap: Exec+ Priv+, Max PASID Width: 14

PASIDCtl: Enable+ Exec+ Priv+

Capabilities: [4c0 v1] Virtual Channel

Caps: LPEVC=0 RefClk=100ns PATEntryBits=1

Arb: Fixed- WRR32- WRR64- WRR128-

Ctrl: ArbSelect=Fixed

Status: InProgress-

VC0: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable+ ID=0 ArbSelect=Fixed TC/VC=ff

Status: NegoPending- InProgress-

VC1: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=1 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

VC2: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=2 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

VC3: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=3 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

Capabilities: [5c0 v1] Address Translation Service (ATS)

ATSCap: Invalidate Queue Depth: 01

ATSCtl: Enable-, Smallest Translation Unit: 00

Capabilities: [640 v1] Page Request Interface (PRI)

PRICtl: Enable- Reset-

PRISta: RF- UPRGI- Stopped+

Page Request Capacity: 00000001, Page Request Allocation: 00000000

Capabilities: [900 v1] L1 PM Substates

L1SubCap: PCI-PM_L1.2+ PCI-PM_L1.1+ ASPM_L1.2+ ASPM_L1.1+ L1_PM_Substates+

PortCommonModeRestoreTime=255us PortTPowerOnTime=26us

L1SubCtl1: PCI-PM_L1.2- PCI-PM_L1.1- ASPM_L1.2- ASPM_L1.1-

T_CommonMode=0us LTR1.2_Threshold=0ns

L1SubCtl2: T_PwrOn=10us

Capabilities: [a20 v1] Precision Time Measurement

PTMCap: Requester:+ Responder:- Root:-

PTMClockGranularity: Unimplemented

PTMControl: Enabled:- RootSelected:-

PTMEffectiveGranularity: Unknown

Kernel driver in use: pcieport

0002:01:00.0 Memory controller: Xilinx Corporation Device 7031

Subsystem: Xilinx Corporation Device 0007

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin ? routed to IRQ 610

Region 0: Memory at 4410100000 (32-bit, non-prefetchable) [size=4K]

Capabilities: [80] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot-,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [90] MSI: Enable+ Count=4/4 Maskable- 64bit+

Address: 00000000010c0400 Data: 0000

Capabilities: [c0] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 1024 bytes, PhantFunc 0, Latency L0s <64ns, L1 <1us

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset- SlotPowerLimit 0.000W

DevCtl: CorrErr+ NonFatalErr+ FatalErr+ UnsupReq+

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop+

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x2, ASPM not supported

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s (ok), Width x2 (ok)

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range B, TimeoutDis+ NROPrPrP- LTR-

10BitTagComp- 10BitTagReq- OBFF Not Supported, ExtFmt- EETLPPrefix-

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- TPHComp- ExtTPHComp-

AtomicOpsCap: 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR- OBFF Disabled,

AtomicOpsCtl: ReqEn-

LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS-

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance De-emphasis: -6dB

LnkSta2: Current De-emphasis Level: -3.5dB, EqualizationComplete+ EqualizationPhase1+

EqualizationPhase2+ EqualizationPhase3+ LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [100 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES+ TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap- ECRCGenEn- ECRCChkCap- ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

Capabilities: [300 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

Capabilities: [3c0 v1] Virtual Channel

Caps: LPEVC=0 RefClk=100ns PATEntryBits=1

Arb: Fixed- WRR32- WRR64- WRR128-

Ctrl: ArbSelect=Fixed

Status: InProgress-

VC0: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable+ ID=0 ArbSelect=Fixed TC/VC=ff

Status: NegoPending- InProgress-

Kernel driver in use: xdma

root@j784s4-evm:/# cd lib/

root@j784s4-evm:/lib# find -name pci_endpoint*

./modules/6.1.46-g5892b80d6b/kernel/drivers/misc/pci_endpoint_test.ko

root@j784s4-evm:/lib# uname -a

Linux j784s4-evm 6.1.46-nw-00.00.00.16-rt13 #7 SMP PREEMPT Fri Sep 12 11:03:45 CST 2025 aarch64 aarch64 aarch64 GNU/Linux

root@j784s4-evm:/lib#

4 READ 1024000 1514445 12.11556 5 READ 1024001 1504268 12.03414

Your test result is even lower then my result , our test throughput is 1.34GB/s ~ 1.81GB/s. and we expect more than 2GB/s. can it be achieved and how? would you help to achieve it on your side with my use case?

Hi Chen,

Thanks for the new logs. Now I see where the discrepancy was in our conversation. Looks like the xdma kernel driver is in-use on the SoC-side.

We didn’t load pci_endpoint_test.ko , and our scheme don‘t use function of pci_endpoint_test.ko, log as below. Do you think is it necessary for my use case? will it help to improve throughput?

No, pci_endpoint_test.ko should not improve throughput.

our test throughput is 1.34GB/s ~ 1.81GB/s. and we expect more than 2GB/s.

Regards,

Takuma

Hi.

Can you confirm that it is this driver: https://git.ti.com/cgit/ti-linux-kernel/ti-linux-kernel/tree/drivers/dma/xilinx/xdma.c?h=ti-linux-6.12.y

This is our development of driver for FPGA function。 it didnot send data use 4VH's DMA.

On which Xilinx device are you seeing these numbers? 8031, 5031, or 7031, or all 3?

0x8031 , PCIE0 x4 8G

Hi Chen,

Understood. Two questions:

PCIe interface itself should theoretically support 4GB/s. However, depending on how data is prepared and fed to PCIe interface, there may be bottlenecks causing throughput to get lowered. 4GB/s is theoretical value, so it will not be reached, so below are practical examples and numbers that I have observed.

For example, the pci_endpoint_test is most likely bottlenecked by DMA transfer speeds. If multiple transfers are started by creating multiple PCIe functions on the same EP, then we were seeing throughput scale linearly with the number of functions, because each function was using its own DMA channel.

As another example, using fio benchmarking tool with SSD and x4 width, we saw around 2.5GB/s throughput. Below are logs:

/run/media/nvme0n1/test-pcie-1: (g=0): rw=read, bs=(R) 4096KiB-4096KiB, (W) 4096KiB-4096KiB, (T) 4096KiB-4096KiB, ioengine=libaio, iodepth=4

fio-3.17-dirty

Starting 1 process

/run/media/nvme0n1/test-pcie-1: (groupid=0, jobs=1): err= 0: pid=1383: Mon Mar 13 16:09:44 2023

read: IOPS=605, BW=2421MiB/s (2538MB/s)(142GiB/60006msec)

slat (usec): min=40, max=1994, avg=58.59, stdev=28.59

clat (usec): min=6122, max=10257, avg=6548.78, stdev=120.19

lat (usec): min=6181, max=12247, avg=6607.70, stdev=124.32

clat percentiles (usec):

| 1.00th=[ 6259], 5.00th=[ 6390], 10.00th=[ 6390], 20.00th=[ 6456],

| 30.00th=[ 6456], 40.00th=[ 6521], 50.00th=[ 6521], 60.00th=[ 6587],

| 70.00th=[ 6587], 80.00th=[ 6652], 90.00th=[ 6718], 95.00th=[ 6718],

| 99.00th=[ 6783], 99.50th=[ 6849], 99.90th=[ 6915], 99.95th=[ 6980],

| 99.99th=[ 9634]

bw ( MiB/s): min= 2344, max= 2432, per=99.99%, avg=2420.41, stdev=10.49, samples=120

iops : min= 586, max= 608, avg=605.08, stdev= 2.62, samples=120

lat (msec) : 10=99.99%, 20=0.01%

cpu : usr=0.22%, sys=3.61%, ctx=36317, majf=0, minf=542

IO depths : 1=0.1%, 2=0.1%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=36312,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=4

Run status group 0 (all jobs):

READ: bw=2421MiB/s (2538MB/s), 2421MiB/s-2421MiB/s (2538MB/s-2538MB/s), io=142GiB (152GB), run=60006-60006msec

Disk stats (read/write):

nvme0n1: ios=144984/0, merge=0/0, ticks=873731/0, in_queue=873731, util=99.87%

In above case the fio command options were: –ioengine=libaio –iodepth=4 –numjobs=1 –direct=1 –runtime=60 –time_based --bs=4M

In this case, fio is doing asynchronous read/writes and queuing up to 4 simultaneous I/O transactions in parallel.

Regards,

Takuma

hi

What is the throughput seen on the other two FPGA devices with x2 width?

PCIe x2 's throughput between FPGA is 1.33GB/S too. it is almost equal to PCIe x4 throughput between VH and FPGA.

Could you share the code that runs during transfer to get an idea of how the throughput is obtained, and transfer is occurring?

1.Our test code is alloc DMA memory;

2.tell FPGA the phyaddr of memory, and than FPGA move the data itself ;

3.FPGA will record the time of the data moving, and than we calculate the throughput.

In above case the fio command options were: –ioengine=libaio –iodepth=4 –numjobs=1 –direct=1 –runtime=60 –time_based

This is read operation, can you show "Write iops" data (PCIE tx); throughput from VH PCIe to outside is critical for our application. Maybe SSD is the bottleneck of write operation, anyway, we need TI prove the PCIe can send out data >2GB/s at 4 Lane.

Could you share the code that runs during transfer to get an idea of how the throughput is obtained, and transfer is occurring?

We think should not make thing confusing. our support needs is simple, if you don't understand, I repeat it again.

#1. Background: VH connects FPGA over 4x PCIe, VH acts as RC, FPGA acts as EP, FPGA read data from VH. now test result shows throughput is 40% of theoretical throughput.

#2. Does TI has throughput data of this use case? is below the throughput data, it is even lower than my test result. can it be improved?

#3. If not, can TI setup similar test environment to measure the throughput and improve the throughput?

Hi Chen,

PCIe x2 's throughput between FPGA is 1.33GB/S too. it is almost equal to PCIe x4 throughput between VH and FPGA.

This indicates bottleneck from somewhere that is not PCIe interface. PCIe interface itself should be able to support double the x2 speeds when in x4 lane mode.

Does TI has throughput data of this use case? is below the throughput data, it is even lower than my test result. can it be improved?

No. I just checked now with SSD card write. I am seeing around 1.5GB/s write. Same device supports around 2.5GB/s read.

root@j784s4-evm:~# fio --name=/run/media/nvme0n1/test-pcie-1 --ioengine=libaio --iodepth=4 --numjobs=1 --direct=1 --runtime=60 --time_based --rw=write --bs=4M

/run/media/nvme0n1/test-pcie-1: (g=0): rw=write, bs=(R) 4096KiB-4096KiB, (W) 4096KiB-4096KiB, (T) 4096KiB-4096KiB, ioengine=libaio, iodepth=4

fio-3.36-117-gb2403

Starting 1 process

Jobs: 1 (f=1): [W(1)][100.0%][w=1440MiB/s][w=360 IOPS][eta 00m:00s]

/run/media/nvme0n1/test-pcie-1: (groupid=0, jobs=1): err= 0: pid=1288: Thu May 29 22:23:37 2025

write: IOPS=361, BW=1447MiB/s (1518MB/s)(84.8GiB/60008msec); 0 zone resets

slat (usec): min=625, max=11471, avg=1085.96, stdev=197.98

clat (usec): min=3333, max=22024, avg=9959.97, stdev=2249.70

lat (usec): min=4400, max=23087, avg=11045.93, stdev=2241.27

clat percentiles (usec):

| 1.00th=[ 8455], 5.00th=[ 8586], 10.00th=[ 8717], 20.00th=[ 8717],

| 30.00th=[ 8848], 40.00th=[ 8848], 50.00th=[ 8848], 60.00th=[ 8979],

| 70.00th=[ 9241], 80.00th=[ 9503], 90.00th=[14353], 95.00th=[15270],

| 99.00th=[15664], 99.50th=[15795], 99.90th=[16188], 99.95th=[16712],

| 99.99th=[21890]

bw ( MiB/s): min= 1208, max= 1464, per=100.00%, avg=1447.47, stdev=23.87, samples=120

iops : min= 302, max= 366, avg=361.87, stdev= 5.97, samples=120

lat (msec) : 4=0.01%, 10=81.19%, 20=18.79%, 50=0.02%

cpu : usr=32.17%, sys=6.00%, ctx=22411, majf=0, minf=21

IO depths : 1=0.1%, 2=0.1%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,21712,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=4

Run status group 0 (all jobs):

WRITE: bw=1447MiB/s (1518MB/s), 1447MiB/s-1447MiB/s (1518MB/s-1518MB/s), io=84.8GiB (91.1GB), run=60008-60008msec

Disk stats (read/write):

nvme0n1: ios=0/346708, sectors=0/177503496, merge=0/5429, ticks=0/3018122, in_queue=3018174, util=99.95%

root@j784s4-evm:~# lspci -vv

0000:00:00.0 PCI bridge: Texas Instruments Device b012 (prog-if 00 [Normal decode])

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 538

Bus: primary=00, secondary=01, subordinate=01, sec-latency=0

I/O behind bridge: [disabled] [32-bit]

Memory behind bridge: 10100000-101fffff [size=1M] [32-bit]

Prefetchable memory behind bridge: [disabled] [64-bit]

Secondary status: 66MHz- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- <SERR- <PERR-

BridgeCtl: Parity- SERR+ NoISA- VGA- VGA16- MAbort- >Reset- FastB2B-

PriDiscTmr- SecDiscTmr- DiscTmrStat- DiscTmrSERREn-

Capabilities: [80] Power Management version 3

Flags: PMEClk- DSI- D1+ D2- AuxCurrent=0mA PME(D0+,D1+,D2-,D3hot+,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [90] MSI: Enable+ Count=1/1 Maskable+ 64bit+

Address: 0000000001000000 Data: 0000

Masking: 00000000 Pending: 00000000

Capabilities: [b0] MSI-X: Enable- Count=1 Masked-

Vector table: BAR=0 offset=00000000

PBA: BAR=0 offset=00000008

Capabilities: [c0] Express (v2) Root Port (Slot+), IntMsgNum 0

DevCap: MaxPayload 256 bytes, PhantFunc 0

ExtTag- RBE+

DevCtl: CorrErr+ NonFatalErr+ FatalErr+ UnsupReq+

RlxdOrd+ ExtTag- PhantFunc- AuxPwr- NoSnoop+

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L1, Exit Latency L1 <8us

ClockPM- Surprise- LLActRep- BwNot+ ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, LnkDisable- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s, Width x4

TrErr- Train- SlotClk- DLActive- BWMgmt- ABWMgmt-

SltCap: AttnBtn- PwrCtrl- MRL- AttnInd- PwrInd- HotPlug- Surprise-

Slot #0, PowerLimit 0W; Interlock- NoCompl-

SltCtl: Enable: AttnBtn- PwrFlt- MRL- PresDet- CmdCplt- HPIrq- LinkChg-

Control: AttnInd Off, PwrInd Off, Power+ Interlock-

SltSta: Status: AttnBtn- PowerFlt- MRL+ CmdCplt- PresDet- Interlock-

Changed: MRL- PresDet- LinkState-

RootCap: CRSVisible-

RootCtl: ErrCorrectable- ErrNon-Fatal- ErrFatal- PMEIntEna+ CRSVisible-

RootSta: PME ReqID 0000, PMEStatus- PMEPending-

DevCap2: Completion Timeout: Range B, TimeoutDis+ NROPrPrP- LTR+

10BitTagComp+ 10BitTagReq- OBFF Via message, ExtFmt+ EETLPPrefix+, MaxEETLPPrefixes 1

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- LN System CLS Not Supported, TPHComp- ExtTPHComp- ARIFwd+

AtomicOpsCap: Routing- 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- ARIFwd-

AtomicOpsCtl: ReqEn- EgressBlck-

IDOReq- IDOCompl- LTR+ EmergencyPowerReductionReq-

10BitTagReq- OBFF Disabled, EETLPPrefixBlk-

LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS-

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance Preset/De-emphasis: -6dB de-emphasis, 0dB preshoot

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete+ EqualizationPhase1+

EqualizationPhase2+ EqualizationPhase3+ LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [100 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES+ TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap+ ECRCGenEn- ECRCChkCap+ ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

RootCmd: CERptEn+ NFERptEn+ FERptEn+

RootSta: CERcvd- MultCERcvd- UERcvd- MultUERcvd-

FirstFatal- NonFatalMsg- FatalMsg- IntMsgNum 0

ErrorSrc: ERR_COR: 0000 ERR_FATAL/NONFATAL: 0000

Capabilities: [150 v1] Device Serial Number 00-00-00-00-00-00-00-00

Capabilities: [300 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

Capabilities: [4c0 v1] Virtual Channel

Caps: LPEVC=0 RefClk=100ns PATEntryBits=1

Arb: Fixed- WRR32- WRR64- WRR128-

Ctrl: ArbSelect=Fixed

Status: InProgress-

VC0: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable+ ID=0 ArbSelect=Fixed TC/VC=ff

Status: NegoPending- InProgress-

VC1: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=1 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

VC2: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=2 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

VC3: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=3 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

Capabilities: [5c0 v1] Address Translation Service (ATS)

ATSCap: Invalidate Queue Depth: 01

ATSCtl: Enable-, Smallest Translation Unit: 00

Capabilities: [640 v1] Page Request Interface (PRI)

PRICtl: Enable- Reset-

PRISta: RF- UPRGI- Stopped+ PASID+

Page Request Capacity: 00000001, Page Request Allocation: 00000000

Capabilities: [900 v1] L1 PM Substates

L1SubCap: PCI-PM_L1.2+ PCI-PM_L1.1+ ASPM_L1.2+ ASPM_L1.1+ L1_PM_Substates+

PortCommonModeRestoreTime=255us PortTPowerOnTime=26us

L1SubCtl1: PCI-PM_L1.2- PCI-PM_L1.1- ASPM_L1.2- ASPM_L1.1-

T_CommonMode=255us LTR1.2_Threshold=481280ns

L1SubCtl2: T_PwrOn=220us

Kernel driver in use: pcieport

0000:01:00.0 Non-Volatile memory controller: Phison Electronics Corporation PS5013-E13 PCIe3 NVMe Controller (DRAM-less) (rev 01) (prog-if 02 [NVM Express])

Subsystem: Phison Electronics Corporation PS5013-E13 PCIe3 NVMe Controller (DRAM-less)

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 0

Region 0: Memory at 10100000 (64-bit, non-prefetchable) [size=16K]

Capabilities: [80] Express (v2) Endpoint, IntMsgNum 0

DevCap: MaxPayload 256 bytes, PhantFunc 0, Latency L0s unlimited, L1 unlimited

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ SlotPowerLimit 0W

DevCtl: CorrErr+ NonFatalErr+ FatalErr+ UnsupReq+

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop+ FLReset-

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #1, Speed 8GT/s, Width x4, ASPM L1, Exit Latency L1 unlimited

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, LnkDisable- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s, Width x4

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range ABCD, TimeoutDis+ NROPrPrP- LTR+

10BitTagComp- 10BitTagReq- OBFF Not Supported, ExtFmt+ EETLPPrefix-

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- TPHComp- ExtTPHComp-

AtomicOpsCap: 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis-

AtomicOpsCtl: ReqEn-

IDOReq- IDOCompl- LTR+ EmergencyPowerReductionReq-

10BitTagReq- OBFF Disabled, EETLPPrefixBlk-

LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS-

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance Preset/De-emphasis: -6dB de-emphasis, 0dB preshoot

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete+ EqualizationPhase1+

EqualizationPhase2+ EqualizationPhase3+ LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [d0] MSI-X: Enable+ Count=9 Masked-

Vector table: BAR=0 offset=00002000

PBA: BAR=0 offset=00003000

Capabilities: [e0] MSI: Enable- Count=1/8 Maskable+ 64bit+

Address: 0000000000000000 Data: 0000

Masking: 00000000 Pending: 00000000

Capabilities: [f8] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot-,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [100 v1] Latency Tolerance Reporting

Max snoop latency: 0ns

Max no snoop latency: 0ns

Capabilities: [110 v1] L1 PM Substates

L1SubCap: PCI-PM_L1.2+ PCI-PM_L1.1+ ASPM_L1.2+ ASPM_L1.1+ L1_PM_Substates+

PortCommonModeRestoreTime=10us PortTPowerOnTime=220us

L1SubCtl1: PCI-PM_L1.2- PCI-PM_L1.1- ASPM_L1.2- ASPM_L1.1-

T_CommonMode=0us LTR1.2_Threshold=481280ns

L1SubCtl2: T_PwrOn=220us

Capabilities: [200 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES- TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap- ECRCGenEn- ECRCChkCap+ ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

Capabilities: [300 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

Kernel driver in use: nvme

Kernel modules: nvme

0001:00:00.0 PCI bridge: Texas Instruments Device b012 (prog-if 00 [Normal decode])

Control: I/O- Mem- BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 540

Bus: primary=00, secondary=01, subordinate=01, sec-latency=0

I/O behind bridge: [disabled] [32-bit]

Memory behind bridge: [disabled] [32-bit]

Prefetchable memory behind bridge: [disabled] [64-bit]

Secondary status: 66MHz- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- <SERR- <PERR-

BridgeCtl: Parity- SERR+ NoISA- VGA- VGA16- MAbort- >Reset- FastB2B-

PriDiscTmr- SecDiscTmr- DiscTmrStat- DiscTmrSERREn-

Capabilities: [80] Power Management version 3

Flags: PMEClk- DSI- D1+ D2- AuxCurrent=0mA PME(D0+,D1+,D2-,D3hot+,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [90] MSI: Enable+ Count=1/1 Maskable+ 64bit+

Address: 0000000001040000 Data: 0000

Masking: 00000000 Pending: 00000000

Capabilities: [b0] MSI-X: Enable- Count=1 Masked-

Vector table: BAR=0 offset=00000000

PBA: BAR=0 offset=00000008

Capabilities: [c0] Express (v2) Root Port (Slot+), IntMsgNum 0

DevCap: MaxPayload 256 bytes, PhantFunc 0

ExtTag- RBE+

DevCtl: CorrErr+ NonFatalErr+ FatalErr+ UnsupReq+

RlxdOrd+ ExtTag- PhantFunc- AuxPwr- NoSnoop+

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x2, ASPM L1, Exit Latency L1 <8us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, LnkDisable- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 2.5GT/s, Width x4 (overdriven)

TrErr- Train- SlotClk- DLActive- BWMgmt- ABWMgmt-

SltCap: AttnBtn- PwrCtrl- MRL- AttnInd- PwrInd- HotPlug- Surprise-

Slot #0, PowerLimit 0W; Interlock- NoCompl-

SltCtl: Enable: AttnBtn- PwrFlt- MRL- PresDet- CmdCplt- HPIrq- LinkChg-

Control: AttnInd Off, PwrInd Off, Power+ Interlock-

SltSta: Status: AttnBtn- PowerFlt- MRL+ CmdCplt- PresDet- Interlock-

Changed: MRL- PresDet- LinkState-

RootCap: CRSVisible-

RootCtl: ErrCorrectable- ErrNon-Fatal- ErrFatal- PMEIntEna+ CRSVisible-

RootSta: PME ReqID 0000, PMEStatus- PMEPending-

DevCap2: Completion Timeout: Range B, TimeoutDis+ NROPrPrP- LTR+

10BitTagComp+ 10BitTagReq- OBFF Via message, ExtFmt+ EETLPPrefix+, MaxEETLPPrefixes 1

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- LN System CLS Not Supported, TPHComp- ExtTPHComp- ARIFwd-

AtomicOpsCap: Routing- 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- ARIFwd-

AtomicOpsCtl: ReqEn- EgressBlck-

IDOReq- IDOCompl- LTR+ EmergencyPowerReductionReq-

10BitTagReq- OBFF Disabled, EETLPPrefixBlk-

LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS-

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance Preset/De-emphasis: -6dB de-emphasis, 0dB preshoot

LnkSta2: Current De-emphasis Level: -3.5dB, EqualizationComplete- EqualizationPhase1-

EqualizationPhase2- EqualizationPhase3- LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [100 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES+ TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap+ ECRCGenEn- ECRCChkCap+ ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

RootCmd: CERptEn+ NFERptEn+ FERptEn+

RootSta: CERcvd- MultCERcvd- UERcvd- MultUERcvd-

FirstFatal- NonFatalMsg- FatalMsg- IntMsgNum 0

ErrorSrc: ERR_COR: 0000 ERR_FATAL/NONFATAL: 0000

Capabilities: [140 v1] Alternative Routing-ID Interpretation (ARI)

ARICap: MFVC- ACS-, Next Function: 1

ARICtl: MFVC- ACS-, Function Group: 0

Capabilities: [150 v1] Device Serial Number 00-00-00-00-00-00-00-00

Capabilities: [160 v1] Power Budgeting <?>

Capabilities: [1b8 v1] Latency Tolerance Reporting

Max snoop latency: 0ns

Max no snoop latency: 0ns

Capabilities: [1c0 v1] Dynamic Power Allocation <?>

Capabilities: [200 v1] Single Root I/O Virtualization (SR-IOV)

IOVCap: Migration- 10BitTagReq- IntMsgNum 0

IOVCtl: Enable- Migration- Interrupt- MSE- ARIHierarchy- 10BitTagReq-

IOVSta: Migration-

Initial VFs: 4, Total VFs: 4, Number of VFs: 0, Function Dependency Link: 00

VF offset: 6, stride: 1, Device ID: 0100

Supported Page Size: 00000553, System Page Size: 00000001

Region 0: Memory at 0000000018400000 (64-bit, non-prefetchable)

VF Migration: offset: 00000000, BIR: 0

Capabilities: [300 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

Capabilities: [400 v1] Vendor Specific Information: ID=0001 Rev=1 Len=010 <?>

Capabilities: [440 v1] Process Address Space ID (PASID)

PASIDCap: Exec+ Priv+, Max PASID Width: 14

PASIDCtl: Enable+ Exec+ Priv+

Capabilities: [4c0 v1] Virtual Channel

Caps: LPEVC=0 RefClk=100ns PATEntryBits=1

Arb: Fixed- WRR32- WRR64- WRR128-

Ctrl: ArbSelect=Fixed

Status: InProgress-

VC0: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable+ ID=0 ArbSelect=Fixed TC/VC=ff

Status: NegoPending- InProgress-

VC1: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=1 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

VC2: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=2 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

VC3: Caps: PATOffset=00 MaxTimeSlots=1 RejSnoopTrans-

Arb: Fixed- WRR32- WRR64- WRR128- TWRR128- WRR256-

Ctrl: Enable- ID=3 ArbSelect=Fixed TC/VC=00

Status: NegoPending- InProgress-

Capabilities: [5c0 v1] Address Translation Service (ATS)

ATSCap: Invalidate Queue Depth: 01

ATSCtl: Enable-, Smallest Translation Unit: 00

Capabilities: [640 v1] Page Request Interface (PRI)

PRICtl: Enable- Reset-

PRISta: RF- UPRGI- Stopped+ PASID+

Page Request Capacity: 00000001, Page Request Allocation: 00000000

Capabilities: [900 v1] L1 PM Substates

L1SubCap: PCI-PM_L1.2+ PCI-PM_L1.1+ ASPM_L1.2+ ASPM_L1.1+ L1_PM_Substates+

PortCommonModeRestoreTime=255us PortTPowerOnTime=26us

L1SubCtl1: PCI-PM_L1.2- PCI-PM_L1.1- ASPM_L1.2- ASPM_L1.1-

T_CommonMode=0us LTR1.2_Threshold=0ns

L1SubCtl2: T_PwrOn=10us

Capabilities: [a20 v1] Precision Time Measurement

PTMCap: Requester+ Responder- Root-

PTMClockGranularity: Unimplemented

PTMControl: Enabled- RootSelected-

PTMEffectiveGranularity: Unknown

Kernel driver in use: pcieport

root@j784s4-evm:~#

Let me check with broader team if they have seen higher transfer speeds.

Regards,

Takuma

Hi,

I understand the discussion moved over e-mail after this.

But, encourage you to provide update or summary here.

It will help wider audience to follow up the discussion.

Regards

Ashwani

Hi Ashwani,

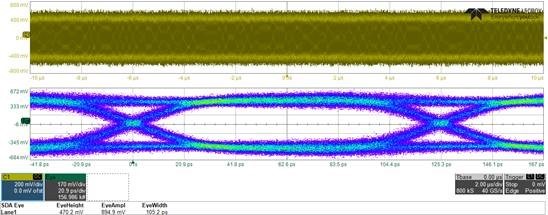

Will update thread once I can get better info. Currently, suspicion is some bottleneck due to available "credits" for transmitting. Similar issue was seen with the CPSW switch that also shares the serdes phy with PCIe.

Regards,

Takuma

Hi Ashwani,

Apologies for the delayed response. I got a couple of updates. Long story short, I have confirmed logs for a 2.3GB/s TX from SoC RC to a SSD EP.

This optimizes the MPS (Maximum Payload Size) and MRRS (Maximum Read Request Size) of the PCIe connection. Attached are logs that show the logs without the U-Boot args that show around 2.3GB/s RX and 1.8GB/s TX:

root@am69-sk:~# lspci -vvv -s 0000:01:00.0

0000:01:00.0 Non-Volatile memory controller: Samsung Electronics Co Ltd NVMe SSD Controller SM981/PM981/PM983 (prog-if 02 [NVM Express])

Subsystem: Samsung Electronics Co Ltd SSD 970 EVO/PRO

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 0

Region 0: Memory at 10100000 (64-bit, non-prefetchable) [size=16K]

Capabilities: [40] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot-,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [50] MSI: Enable- Count=1/1 Maskable- 64bit+

Address: 0000000000000000 Data: 0000

Capabilities: [70] Express (v2) Endpoint, IntMsgNum 0

DevCap: MaxPayload 256 bytes, PhantFunc 0, Latency L0s unlimited, L1 unlimited

ExtTag- AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ SlotPowerLimit 0W

DevCtl: CorrErr+ NonFatalErr+ FatalErr+ UnsupReq+

RlxdOrd+ ExtTag- PhantFunc- AuxPwr- NoSnoop+ FLReset-

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x4, ASPM L1, Exit Latency L1 <64us

ClockPM+ Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, LnkDisable- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s, Width x4

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range ABCD, TimeoutDis+ NROPrPrP- LTR+

10BitTagComp- 10BitTagReq- OBFF Not Supported, ExtFmt- EETLPPrefix-

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- TPHComp- ExtTPHComp-

AtomicOpsCap: 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis-

AtomicOpsCtl: ReqEn-

IDOReq- IDOCompl- LTR+ EmergencyPowerReductionReq-

10BitTagReq- OBFF Disabled, EETLPPrefixBlk-

LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS-

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance Preset/De-emphasis: -6dB de-emphasis, 0dB preshoot

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete+ EqualizationPhase1+

EqualizationPhase2+ EqualizationPhase3+ LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [b0] MSI-X: Enable+ Count=33 Masked-

Vector table: BAR=0 offset=00003000

PBA: BAR=0 offset=00002000

Capabilities: [100 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES+ TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap+ ECRCGenEn- ECRCChkCap+ ECRCChkEn-

MultHdrRecCap+ MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

Capabilities: [148 v1] Device Serial Number 00-00-00-00-00-00-00-00

Capabilities: [158 v1] Power Budgeting <?>

Capabilities: [168 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

Capabilities: [188 v1] Latency Tolerance Reporting

Max snoop latency: 0ns

Max no snoop latency: 0ns

Capabilities: [190 v1] L1 PM Substates

L1SubCap: PCI-PM_L1.2+ PCI-PM_L1.1+ ASPM_L1.2+ ASPM_L1.1+ L1_PM_Substates+

PortCommonModeRestoreTime=10us PortTPowerOnTime=10us

L1SubCtl1: PCI-PM_L1.2- PCI-PM_L1.1- ASPM_L1.2- ASPM_L1.1-

T_CommonMode=0us LTR1.2_Threshold=287744ns

L1SubCtl2: T_PwrOn=26us

Kernel driver in use: nvme

Kernel modules: nvme

root@am69-sk:~# fio --name=/run/media/ssd-nvme0n1p1/test-pcie-1 --size=10GB --ioengine=libaio --iodepth=4 --numjobs=1 --direct=1 --runtime=60 --time_based --bs=4M --rw=read

/run/media/ssd-nvme0n1p1/test-pcie-1: (g=0): rw=read, bs=(R) 4096KiB-4096KiB, (W) 4096KiB-4096KiB, (T) 4096KiB-4096KiB, ioengine=libaio, iodepth=4

fio-3.36-117-gb2403

Starting 1 process

Jobs: 1 (f=1): [R(1)][100.0%][r=2258MiB/s][r=564 IOPS][eta 00m:00s]

/run/media/ssd-nvme0n1p1/test-pcie-1: (groupid=0, jobs=1): err= 0: pid=1528: Wed Jan 8 19:28:37 2025

read: IOPS=564, BW=2259MiB/s (2368MB/s)(132GiB/60007msec)

slat (usec): min=29, max=2169, avg=57.10, stdev=30.14

clat (usec): min=2212, max=12089, avg=7024.42, stdev=75.77

lat (usec): min=3814, max=12121, avg=7081.51, stdev=65.50

clat percentiles (usec):

| 1.00th=[ 6980], 5.00th=[ 6980], 10.00th=[ 6980], 20.00th=[ 6980],

| 30.00th=[ 6980], 40.00th=[ 7046], 50.00th=[ 7046], 60.00th=[ 7046],

| 70.00th=[ 7046], 80.00th=[ 7046], 90.00th=[ 7046], 95.00th=[ 7046],

| 99.00th=[ 7046], 99.50th=[ 7046], 99.90th=[ 7111], 99.95th=[ 7111],

| 99.99th=[11994]

bw ( MiB/s): min= 2168, max= 2264, per=100.00%, avg=2258.87, stdev= 9.61, samples=120

iops : min= 542, max= 566, avg=564.72, stdev= 2.40, samples=120

lat (msec) : 4=0.01%, 10=99.98%, 20=0.01%

cpu : usr=0.25%, sys=3.30%, ctx=33913, majf=0, minf=538

IO depths : 1=0.1%, 2=0.1%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=33883,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=4

Run status group 0 (all jobs):

READ: bw=2259MiB/s (2368MB/s), 2259MiB/s-2259MiB/s (2368MB/s-2368MB/s), io=132GiB (142GB), run=60007-60007msec

Disk stats (read/write):

nvme0n1: ios=135282/4, sectors=277056008/40, merge=0/1, ticks=882153/39, in_queue=882203, util=96.43%

root@am69-sk:~# fio --name=/run/media/ssd-nvme0n1p1/test-pcie-1 --size=10GB --ioengine=libaio --iodepth=4 --numjobs=1 --direct=1 --runtime=60 --time_based --bs=4M --rw=write

/run/media/ssd-nvme0n1p1/test-pcie-1: (g=0): rw=write, bs=(R) 4096KiB-4096KiB, (W) 4096KiB-4096KiB, (T) 4096KiB-4096KiB, ioengine=libaio, iodepth=4

fio-3.36-117-gb2403

Starting 1 process

Jobs: 1 (f=1): [W(1)][100.0%][w=1754MiB/s][w=438 IOPS][eta 00m:00s]

/run/media/ssd-nvme0n1p1/test-pcie-1: (groupid=0, jobs=1): err= 0: pid=1663: Wed Jan 8 19:29:39 2025

write: IOPS=438, BW=1754MiB/s (1839MB/s)(103GiB/60007msec); 0 zone resets

slat (usec): min=551, max=6895, avg=978.68, stdev=173.26

clat (usec): min=4589, max=19071, avg=8134.22, stdev=234.26

lat (usec): min=6818, max=20353, avg=9112.90, stdev=175.66

clat percentiles (usec):

| 1.00th=[ 7832], 5.00th=[ 7898], 10.00th=[ 7963], 20.00th=[ 8029],

| 30.00th=[ 8029], 40.00th=[ 8094], 50.00th=[ 8094], 60.00th=[ 8094],

| 70.00th=[ 8160], 80.00th=[ 8160], 90.00th=[ 8455], 95.00th=[ 8586],

| 99.00th=[ 8586], 99.50th=[ 8586], 99.90th=[10552], 99.95th=[11469],

| 99.99th=[16712]

bw ( MiB/s): min= 1704, max= 1768, per=100.00%, avg=1756.50, stdev= 6.72, samples=119

iops : min= 426, max= 442, avg=439.13, stdev= 1.68, samples=119

lat (msec) : 10=99.86%, 20=0.14%

cpu : usr=38.38%, sys=4.72%, ctx=28221, majf=0, minf=19

IO depths : 1=0.1%, 2=0.1%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,26314,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=4

Run status group 0 (all jobs):

WRITE: bw=1754MiB/s (1839MB/s), 1754MiB/s-1754MiB/s (1839MB/s-1839MB/s), io=103GiB (110GB), run=60007-60007msec

Disk stats (read/write):

nvme0n1: ios=0/105067, sectors=0/215132936, merge=0/11, ticks=0/786321, in_queue=786379, util=83.93%

root@am69-sk:~# uname -a