Other Parts Discussed in Thread: 66AK2E05

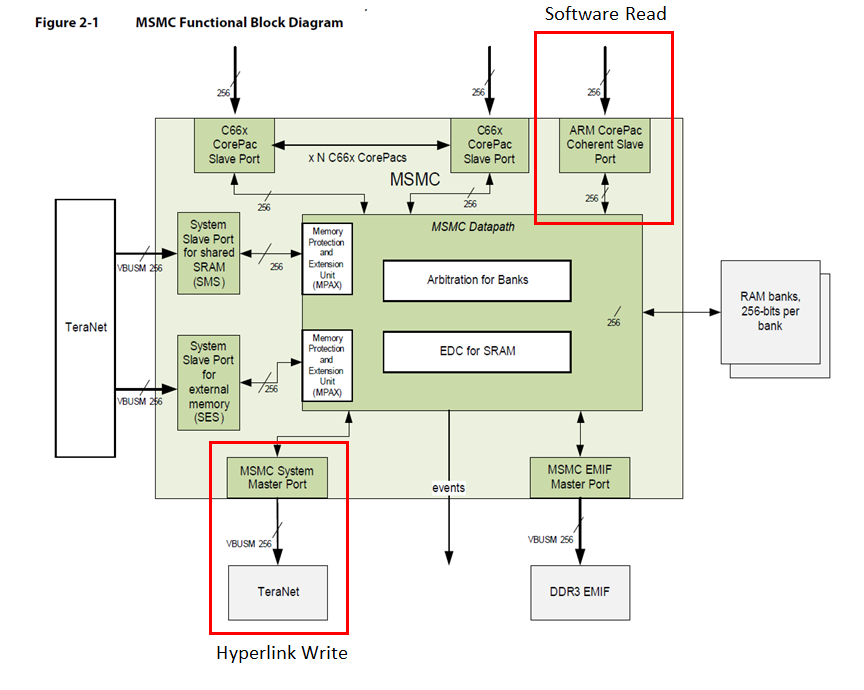

I was able to make the Hyperlink Memory mapped example work between two 66AK2E05 on the A15 side. I am concerned about the concurrency topic now. I have seen almost nothing on the forum.

How can I safely lock access to the databuffer when reading/writing through Hyperlink?

My use case is the following: one 66AK2E05 is writing remotely a smalll set of integers (less than 10) to the other 66Ak2E05 through Hyperlink. On the other side, the 66AK2E05 is just reading the incoming data.

As basis, I am using the CPU block transfer from the memory mapped example of the MCSDK.

As fas as I know, I can use the 2 following solutions:

- hyperlink interrupt packet

- hardware semaphore

In order to be exhaustive, I want to know if there are other possibilities and what would be the most efficient way? For example:

- Since it is a CPU block transfer, can I use the data buffer as an atomic structure? Can I assume that there won't be any conccurrent read and write?

- Is polling on an Hyperlink register to know if there is a pending transfer possible (any flow control register?) ?