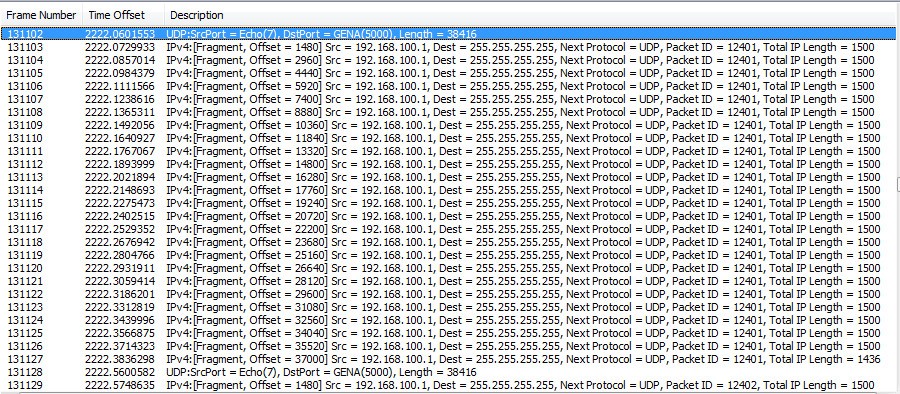

I'm sending the same 32KB packet via both TCP and UDP for comparison, looking at the timing with Microsoft Network Monitor. The TCP fragments are all sent in about 15ms while the UDP fragments are taking about 15ms EACH, about a factor of 20 slower. Is there a setting I can adjust to send UDP fragments at a higher rate? Thanks!

-- Carl