- Ask a related questionWhat is a related question?A related question is a question created from another question. When the related question is created, it will be automatically linked to the original question.

This thread has been locked.

If you have a related question, please click the "Ask a related question" button in the top right corner. The newly created question will be automatically linked to this question.

I am trying to collect data to detect a human where limbs are identifiable in the short range of 1-2 metres. I have been using the online estimator to attempt to tune chirp profiles more specific to my use case. I have however found that the noise cloud seems to just be a big cluster and the detection & angle estimation results only give 1-2 points if it detects an object.

Can anyone recommend ideal chirp parameters for a short range detection of my specified distance?

Is there also a way to limit the distance range on the graphs?

Thanks for your help,

Hana

Hi,

You can check the TI resource explorer for example projects on people detection, motion detection, presence detection etc to get further idea for the starting chirp parameters. You can always fine tune them afterwards. I am not exactly sure what you mean by limb identification but i assume you mean gesture recognition which is also covered in the example projects on the TIREX.

Thanks,

Pradipta.

Hi,

I have already gone through the documentation available. There is no files that I can see that defines chirps used for this recognition. There is only a prebuilt firmware which only works for creating doppler visuals.

My aim is to detect a human through the visual of a point cloud. The doppler graphs in this demo are not applicable to my use case. Hence, I have been using the mmwave online estimator for starting chirp parameters and then manually changing the values when I have errors.

What I am asking for is guidance on better chirp parameters as the estimator seems to give me chirps that receive errors and I have to alter the chirp based upon my software error knowledge.

I am currently going through iterations of chirp profiles in attempt to improve my point cloud to be able to identify a human body via the point cloud. This has proven a tedious task and I was hoping that you may have some guidance on doing this more efficiently?

Thanks,

Hana

Hi,

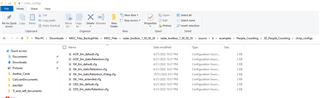

The TI resource explorer provides various labs for these purposes. This below snapshot is form the radar toolbox itself for the people counting demo. Were you able to check this.

Thanks,

Pradipta.

Thank you, it seems I have a different version of the toolbox so the files were located differently, however upon using the 6m config I have found that it is still too long range for my use case and I require a higher resolution point cloud to be able to identify the person at a maximum of 2 metres away.

To clarify, I would like to be able to identify the arms and legs of a person not just see a moving clump of points. Do you have any guidance for this? I had a look at the programming chirp parameters guide and the document seems to define the parameters in the radar API that can be configured but maybe could use a little more explanation on the effects of changing the parameters? I have tried decreasing the CFAR threshold which increases the number of points I can see however, the resolution doesn't show me more points around the arms and legs but rather just noise around the person I am trying to detect.

Do you have any advice to get around this?

I appreciate your help.

Hana

Hi Hana,

You can check this people detection in elevator experiment which will be closest to your application requirement to see the chirp configurations and fine tune them.

People Detection In An Elevator

After presence detection, what you are looking for is to identify the arms and legs of a person. It will require more advanced signal chain processing to be able to achieve this. We do not have a direct example or reference for this. I will suggest you read more on gesture recognition and tracking algorithms to get started. You can refer to the documentation as below for reference.

Thanks,

Pradipta.

Hi,

I have already looked into the config, do you have any advice on fine tuning?

What method of signal chain processing does the mmwave studio use? Is there a way I can access this to alter it beyond changing the CFAR value?

I have read about the gesture recognition and pattern recognition and what I understand is that these gestures are recognised via preprogrammed rules on the movement the program detects as opposed to being able to identify the movements from the point clouds received. Is the way to do this via the point clouds without applying AI from the beginning related to the algorithms the studio uses?

Which base algorithms does the mmwave studio use beneath the user controls?

Thanks,

Hana