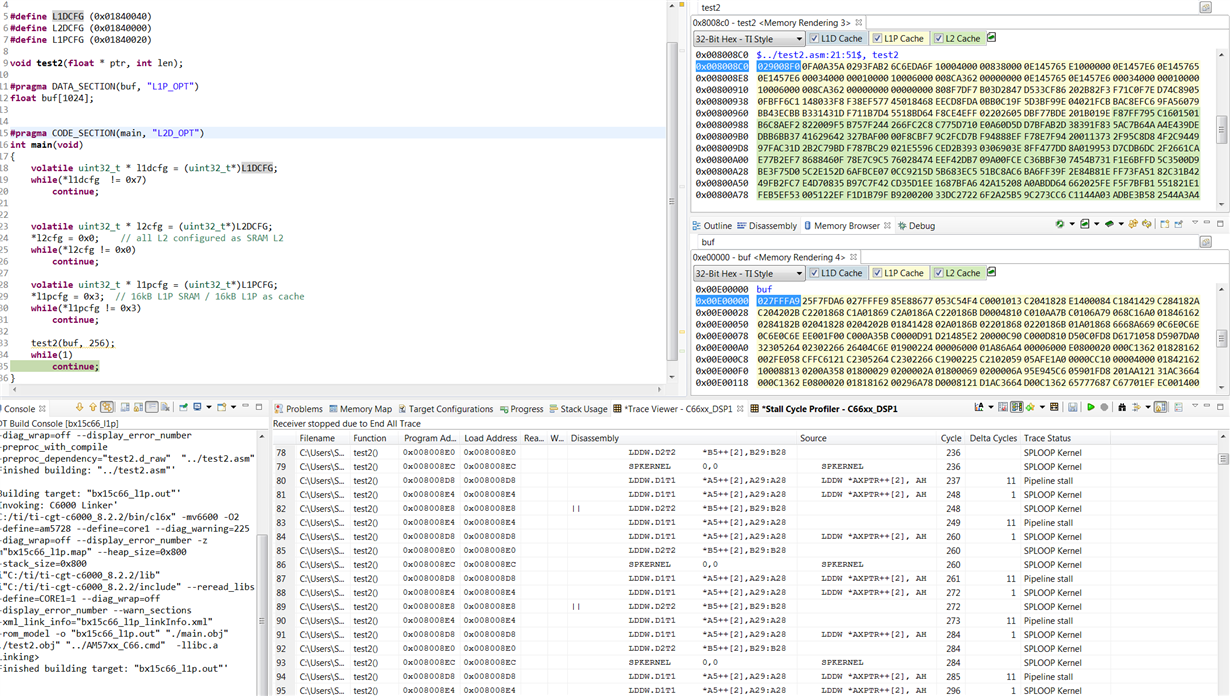

I am trying to implement time critical algorithm on C66x that requires to process about 32kB of data. The algorithm is relatively simple and clearly bonded by the width of data bus. Because in the target environment there are also other, more complex tasks running in the background, L1 memory has to be configured as cache only. L2 is configured as 128kB of cache and remaining part as SRAM. To better analyse the issue that I am observing, I am using the following assembly code:

SPLOOP 2 LDDW *AYPTR++[2], AH || LDDW *BYPTR++[2], BH LDDW *AYPTR++[2], AH || LDDW *BYPTR++[2], BH SPKERNEL NOP 9

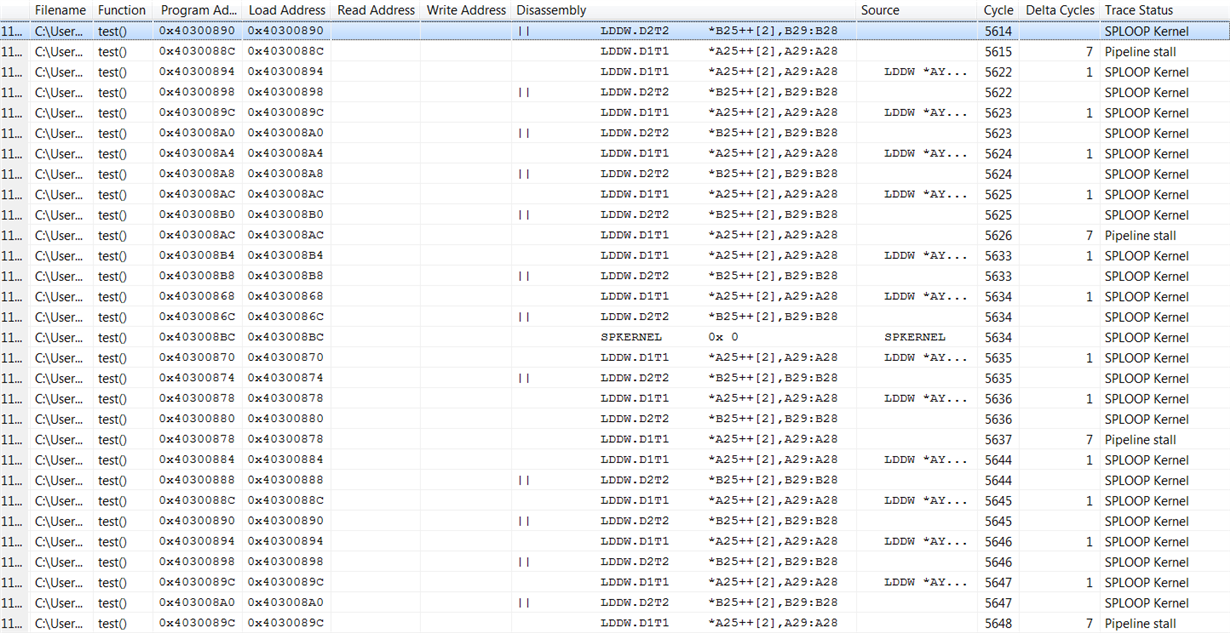

Before running this code whole content of L1 data cache is invalidated. The trace for the SPLOOP is below.

Data accessed in loop is placed in L2 SRAM (BYPTR = 8 + AYPTR). It is easy to notice a lot of pipeline stalls occurring when access to the next L1 cache line happens. The stalls actually takes more time than actual processing. Is there a way to improve performance for this kind of algorithm? I know about the touch function, but it gives mixed results where no all data are evicted between consecutive runs. I also assume, that to use IDMA I need to configure L1 as SRAM? What else can be done to reduce the number of cycles wasted on pipeline stalls?