Part Number: PROCESSOR-SDK-DRA8X-TDA4X

Tool/software: WEBENCH® Design Tools

Hi,

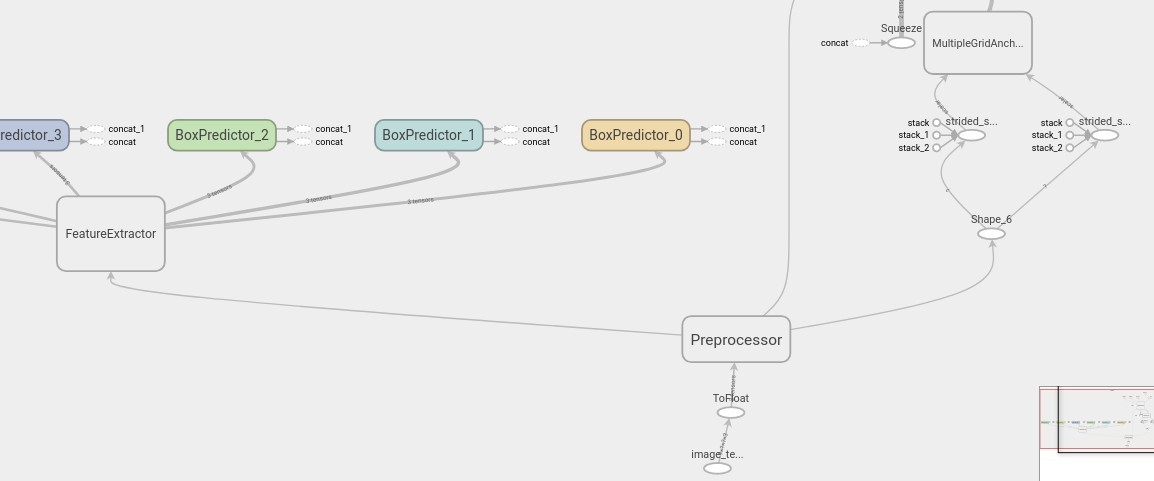

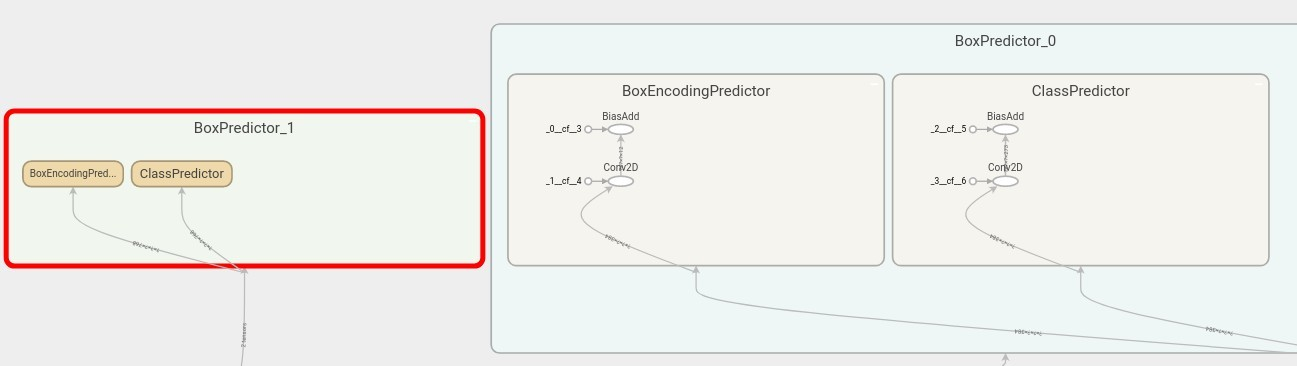

I try to convert out tensor flow model and the actual .bin TI model is not created.

I get the following error: DW Convolution with Depth multiplier > 1 is not suported now

We tried to import the original Tensorflow model and found the following discrepancies:

- we downloaded the orginal model from your official site : ssd_mobilenet_v1_coco_2018_01_28 with depth multiplier of 1.

- under path ti_dl/testvecs/config/import/public/tensorflow/ we can't find any configuration file for this model.

When will depth wise convolution layers with depth multiplier greater or equal to 1 be supported by TI_DL import tool ?