Hi folks,

I am trying to do some simple, polled (not interrupt) write and read transfers using the EUSCI_B1 module on the MSP432 with DriverLib. However, I have run into behavior that I cannot explain, and hope that one of you might have some ideas to try.

I'm communicating with a TI TMP007 temperature sensor. To get a baseline and some ideas on communicating with the device, I purchased a module from Adafruit and connected it to an Arduino using their TMP007 library. I then connected the I2C lines to my Intronix LogicPort LA1034 Logic Analyzer, which has a built in I2C decoder. The resulting capture from initialization is attached as ArduinoI2CStartup.PNG.

It's a bit of an eye test to show all of the initial data, but here is a summary. The TMP007 is at I2C address 0x40. There are 4 key transactions:

1) Configure the TMP007 by writing 0x1140 to configuration register 0x02. This results in a write of 0x40, 0x02, 0x11, 0x40.

2) Configure the status mask register 0x05 by writing 0xC000 to it. This results in a write of 0x40, 0x05, 0xC0, 0x00.

3) Select the device ID register 0x1F by writing that to the TMP007. This results in a write of 0x40, 0x1F.

4) Read the device ID, which should be 0x0078. This results in a read of 0x40, 0x00, 0x78.

All of this looks great on the Arduino. However, when I try to do the same using polled DriverLib methods, the transfers do not take place correctly, and I cannot figure out why.

My master configuration structure looks like this:

masterConfig.selectClockSource = EUSCI_B_I2C_CLOCKSOURCE_SMCLK; masterConfig.i2cClk = 48000000; masterConfig.dataRate = EUSCI_B_I2C_SET_DATA_RATE_100KBPS; masterConfig.byteCounterThreshold = 0; masterConfig.autoSTOPGeneration = EUSCI_B_I2C_NO_AUTO_STOP;

So far so good. Then I tried wading through the documentation for DriverLib regarding the I2C master mode operation, and came up with the following (very brute force, but I wanted to keep it simple:

// 1. Configure TMP007

/* Making sure the last transaction has been completely sent out */

while (MAP_I2C_masterIsStopSent(EUSCI_B1_BASE) == EUSCI_B_I2C_SENDING_STOP);

MAP_I2C_setSlaveAddress(EUSCI_B1_BASE, 0x40);

MAP_I2C_setMode(EUSCI_B1_BASE, EUSCI_B_I2C_TRANSMIT_MODE);

MAP_I2C_masterSendMultiByteStart(EUSCI_B1_BASE, 0x02);

MAP_I2C_masterSendMultiByteNext(EUSCI_B1_BASE, 0x11);

MAP_I2C_masterSendMultiByteNext(EUSCI_B1_BASE, 0x40);

MAP_I2C_masterSendMultiByteStop(EUSCI_B1_BASE);

/* Making sure the last transaction has been completely sent out */

while (MAP_I2C_masterIsStopSent(EUSCI_B1_BASE) == EUSCI_B_I2C_SENDING_STOP);

// 2. Set status mask

MAP_I2C_masterSendMultiByteStart(EUSCI_B1_BASE, 0x05);

MAP_I2C_masterSendMultiByteNext(EUSCI_B1_BASE, 0xC0);

MAP_I2C_masterSendMultiByteNext(EUSCI_B1_BASE, 0x00);

MAP_I2C_masterSendMultiByteStop(EUSCI_B1_BASE);

/* Making sure the last transaction has been completely sent out */

while (MAP_I2C_masterIsStopSent(EUSCI_B1_BASE) == EUSCI_B_I2C_SENDING_STOP);

// 3. Point to device ID

MAP_I2C_masterSendSingleByte(EUSCI_B1_BASE, 0x1F);

/* Making sure the last transaction has been completely sent out */

while (MAP_I2C_masterIsStopSent(EUSCI_B1_BASE) == EUSCI_B_I2C_SENDING_STOP);

// 4. Read device ID

MAP_I2C_setMode(EUSCI_B1_BASE, EUSCI_B_I2C_RECEIVE_MODE);

MAP_I2C_masterReceiveStart(EUSCI_B1_BASE);

uint8_t msb = MAP_I2C_masterReceiveMultiByteNext(EUSCI_B1_BASE);

uint8_t lsb = MAP_I2C_masterReceiveMultiByteFinish(EUSCI_B1_BASE);

MAP_I2C_masterReceiveMultiByteStop(EUSCI_B1_BASE);

/* Making sure the last transaction has been completely sent out */

while (MAP_I2C_masterIsStopSent(EUSCI_B1_BASE) == EUSCI_B_I2C_SENDING_STOP);

uint16_t data = ((uint16_t)msb << 8) | lsb;

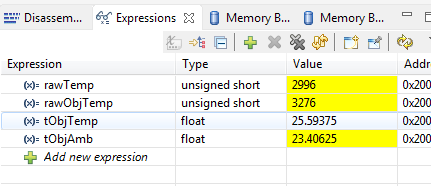

This _seems_ straightforward. It sets the slave address, selects 0x40 as the slave address, and selects master transmit mode. It then tries to write the same 1st 3 transactions as the Arduino example. Then it changes to master receive mode, and tries to read the two bytes representing the device ID.

Here is the data captures with my logic analyzer (sorry, another eye test!).

The first two transfers look about the same, 1) a write of 0x40, 0x02, 0x11, 0x40, then 2) a write of 0x40, 0xC0, 0x00. However, in the 2nd transaction, the write of 0x05 after the slave address of 0x40 is completely missing! Then it appears that the 3) transaction is a write of 0x40, 0xFF, which is NOT the address of the device ID, 0x1F.

This code seems so simple, but the examples do not provide much help for polled operation of the I2C master. Instead, they use interrupts and go to sleep, which just complicates the example and detracts from understanding how the code works.

Further, there don't seem to be any good examples where there are multibyte writes, then a single byte write, followed by a multibyte read, so it's very hard to tell if I am using DriverLib as expected.

I know this is a lot of information, but I am truly stuck, and hope that someone in the community who knows the I2C interface and DriverLib can help me out.

Many thanks for your help!

Scott