Hi there

I have a neural network design that I have created using Tensorflow v2 and Keras. I have converted the network to a Tensorflow Lite version using the convert function within Tensorflow:

# Convert the model.

converter = tf.lite.TFLiteConverter.from_keras_model(model)

tflite_model = converter.convert()

# Save the TF Lite model.

with tf.io.gfile.GFile('model.tflite', 'wb') as f:

f.write(tflite_model)

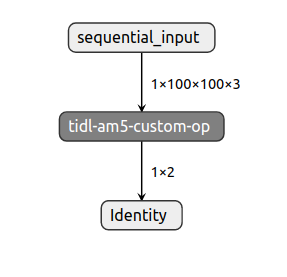

I wish to run my network on the AM5729 found on the BeagleBone AI board. I understand that the ti-processor-sdk-linux-am57xx-06.03.00.106 does not yet support the BeagleBone AI tool. However, the Debian image distributed for the BeagleBone AI has all the TIDL libraries packaged with it.

Therefore the only thing I need to be able to do is convert the tensorflow lite network into the TIDL format using the TIDL import tool. I assume I can do this using the following flow:

1. download the ti-processor-sdk-linux-am57xx-06.03.00.106-linux-x86-Install.bin from TI

2. run the installer on a linux (Ubuntu) host

3. navigate to the tidl_model_import.out tool

4. run the tidl_model_import.out tool on an appropriate configuration file (below)

I run the following command to invoke the tidl_model_import tool

"/home/alex/ti-processor-sdk-linux-am57xx-evm-06.03.00.106/linux-devkit/sysroots/x86_64-arago-linux/usr/bin/tidl_model_import.out" model_config.txt

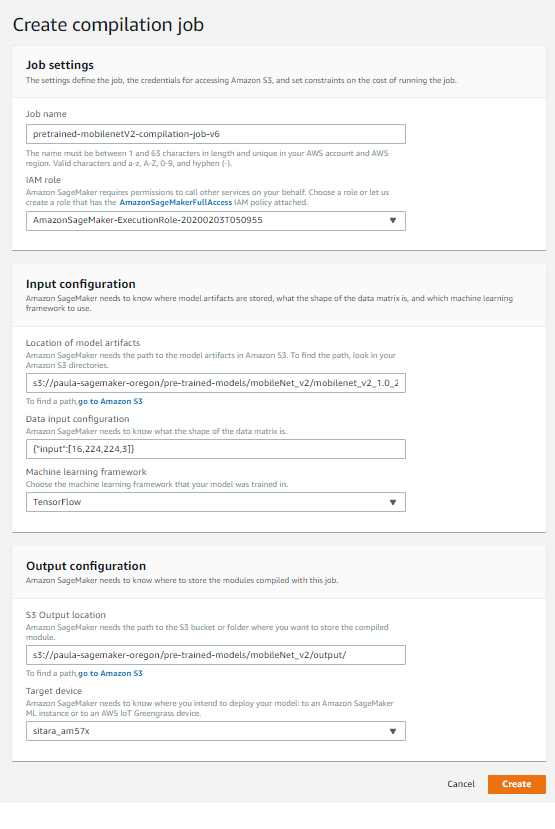

With the contents of my configuration being the following:

randParams = 0 modelType = 1 quantizationStyle = 1 quantRoundAdd = 50 numParamBits = 8 inputNetFile = "/home/alex/ti-processor-sdk-linux-am57xx-evm-06.03.00.106/model.tflite.pb" inputParamsFile = "NA" outputNetFile = "/home/alex/ti-processor-sdk-linux-am57xx-evm-06.03.00.106/model_Net.bin" outputParamsFile = "/home/alex/ti-processor-sdk-linux-am57xx-evm-06.03.00.106/model_params.bin" sampleInData = "/home/alex/ti-processor-sdk-linux-am57xx-evm-06.03.00.106/img_0.png" tidlStatsTool = "/home/alex/ti-processor-sdk-linux-am57xx-evm-06.03.00.106/linux-devkit/sysroots/x86_64-arago-linux/usr/bin/eve_test_dl_algo_ref.out"

I get the following output from the import tool:

alex@alex-lapamatop:~/ti-processor-sdk-linux-am57xx-evm-06.03.00.106$ ./convert.sh =============================== TIDL import - parsing =============================== TF Model (Proto) File : /home/alex/ti-processor-sdk-linux-am57xx-evm-06.03.00.106/model.tflite.pb TIDL Network File : /home/alex/ti-processor-sdk-linux-am57xx-evm-06.03.00.106/model_Net.bin TIDL Params File : /home/alex/ti-processor-sdk-linux-am57xx-evm-06.03.00.106/model_params.bin ERROR: Reading binary proto file Num of Layer Detected : 0 -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- Num|TIDL Layer Name |Out Data Name |Group |#Ins |#Outs |Inbuf Ids |Outbuf Id |In NCHW |Out NCHW |MACS | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- Total Giga Macs : 0.0000 -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- =============================== TIDL import - calibration =============================== Processing config file ./tempDir/qunat_stats_config.txt ! Running TIDL simulation for calibration. Processing Frame Number : 0 End of config list found ! alex@alex-lapamatop:~/ti-processor-sdk-linux-am57xx-evm-06.03.00.106$ ./convert.sh | log.txt log.txt: command not found alex@alex-lapamatop:~/ti-processor-sdk-linux-am57xx-evm-06.03.00.106$ ./convert.sh | log.txt ./convert.sh: line 1: log.txt: command not found log.txt: command not found alex@alex-lapamatop:~/ti-processor-sdk-linux-am57xx-evm-06.03.00.106$ ./convert.sh ./convert.sh: line 1: log.txt: command not found alex@alex-lapamatop:~/ti-processor-sdk-linux-am57xx-evm-06.03.00.106$ ./convert.sh alex@alex-lapamatop:~/ti-processor-sdk-linux-am57xx-evm-06.03.00.106$ ./convert.sh =============================== TIDL import - parsing =============================== TF Model (Proto) File : /home/alex/ti-processor-sdk-linux-am57xx-evm-06.03.00.106/model.tflite.pb TIDL Network File : /home/alex/ti-processor-sdk-linux-am57xx-evm-06.03.00.106/model_Net.bin TIDL Params File : /home/alex/ti-processor-sdk-linux-am57xx-evm-06.03.00.106/model_params.bin ERROR: Reading binary proto file Num of Layer Detected : 0 -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- Num|TIDL Layer Name |Out Data Name |Group |#Ins |#Outs |Inbuf Ids |Outbuf Id |In NCHW |Out NCHW |MACS | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- Total Giga Macs : 0.0000 -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- =============================== TIDL import - calibration =============================== Processing config file ./tempDir/qunat_stats_config.txt ! Running TIDL simulation for calibration. Processing Frame Number : 0 End of config list found !

Is anyone able to tell me where I have gone wrong?

Thanks