SDK 8.6

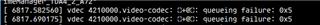

I used an nv12 image with random values for encoding. I found that wave5 will report an error

What does this 0x05 mean?

This thread has been locked.

If you have a related question, please click the "Ask a related question" button in the top right corner. The newly created question will be automatically linked to this question.

SDK 8.6

I used an nv12 image with random values for encoding. I found that wave5 will report an error

What does this 0x05 mean?

Thank you for your answer, I have a few questions to ask.

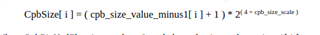

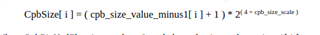

Is the MaxCpbSize formula the one in the picture below?

I did not find a method to set or read cpb_size_value_minus1 in the wave5 driver. Can you tell me the specific MaxCpbSize formula and how to calculate it?

If the allocated imagesize is less than MaxCpbSize. It is possible that the output size is larger than imagesize. Am I understanding this correctly?

Hello,

As I mentioned in the previous response, I do not recommend you to implement this algorithm. According to CnM, it will drastically increase the memory consumption which is the opposite of what you want at this point. There is no support and function references in CnM Wave5 driver because of how much it would increase the memory consumption.

Could you clarify what you mean by output size here? For encoder, "output" tends to refer to raw frames and "capture" are the compressed buffers. The plane[0].sizeimage that we have been discussing is referring to the size of the CPB. With these terms being clarified, the answer to your question is that the output size (raw frames) should be bigger than the sizeimage. But I assume with the MaxCpb algorithm and setting a image quality, the buffers allocated could be bigger than the output size. I don't think that is likely though.

Sorry, my expression may not be that rigorous. Let me rephrase

If the capture sizeimage is smaller than MaxDpbSize. The h265 size encoded by the encoder may be larger than the capture sizeimage? Is my understanding correct?

If this happens, can I just discard the frame? How do I do it?

Is MaxDpbSize a typo? Is this supposed to be MaxCpbSize?

I believe the situation you are looking at is this bug, the size the driver is setting for capture sizeimage (remember capture refers to encoded buffers here) is actually smaller than what the encoder wants. The encoder is trying to write the data, but the buffer it is given is too small.

Im sure there could be a check added in irq process where size returned from encoder could be checked against the allocated size. But why would you want to drop a frame in this case? Given current driver that could happen frequently.

I also wanted to update you on progress with CnM. I had another meeting with the yesterday and the conclusion regarding fixing this in the driver is that is seems unlikely it can be done. As I have highlighted, there is no guarantee that any of the encode parameters besides the resolution and framerate are known before buffer allocation is done. We both recommend that this is something that has to change on host side and not in the driver. Since you are using your own application and have access to all encode parameters, you can calculate the size before TRY_FMT/S_FMT ioctls are called and can tell the driver which size to use by removing the width*height*3/8 and just using the size set by host (which is your own application).

Thanks, there is another situation with the current problem, that is, after this problem occurs, other images queued to the driver will no longer trigger irq. Is there a way to fix this? We can accept discarding this frame to ensure that other images can be encoded normally. But the current situation is that after the problem occurs, other images cannot trigger irq. Is there a way to fix this?

Although TRY_FMT/S_FMT ioctls can be called to set size. But my understanding is that the buf size is allocated before encoding and cannot change dynamically during the encoding process. Is this understanding correct?

You are correct in that there is no mechanism currently in place to dynamically change the buffer size during the actual encoding process. The driver currently expects that enough will already be given.

As for the first question regarding triggering IRQs, first I want to clarify on how you are dropping the frames. Are you making sure that when the frame is dropped, that you are clearing the interrupt flag? Are other frames successfully being scheduled after you drop a frame?

My putting in images and getting frames are asynchronous, in two threads respectively. After a problem occurs, I will continue to put in images until there is no free buf. In other words, there will still be a few free bufs that can be put in after a problem occurs. I added printing to the driver and found that once the problem mentioned above occurs, subsequent images placed will no longer trigger irq. I'm not sure why irq is not triggered.

Did you go into the irq function and make sure to clear the flag after you make the decision to drop a picture?

Sorry, because this question is quite old, there is something wrong with my description. Let me describe it again.

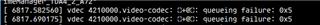

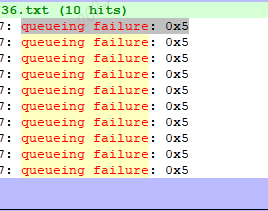

Once a "queueing failure: 0x5" error occurs, a "queueing failure: 0x5" error will also occur when subsequent frames are placed. The following log

Why do subsequent frames also have problems after "queueing failure: 0x5" appears? How do I abandon the frame with the error and allow subsequent frames to continue encoding?

Its important to distinguish that this queuing failure is not a result from just queuing frames. I mentioned this earlier in thread. The queuing error comes from commands being written to the command queue. There are a couple of places where this occurs. The fact that queuing and removing buffers from the encoder are not synchronous could tell me that maybe you are queuing too fast in the first place.

Can this same behavior be seen in situations that don't involve the compressed buffer size problem we have been discussing?

Thanks for your answer, let me explain.

This queue full problem is caused by the previous buffer size problem. Because the larger frame cannot be encoded and is stuck, subsequent frames cannot be encoded.

In the absence of buffer size issues, this queue full problem does not occur.

Thank you for elaboration. I understand the problem now. If you look through driver code, every time you start encoding a frame, the driver will call wave5_bit_issue_command(inst, W5_ENC_PIC). This call is what I am referring to when I mention the command queue. The internal firmware operates off of servicing jobs placed in the command queue. My assumption at this point is when this buffer size issue occurs, the firmware is hanging and jobs are being stuck in the command queue. Our driver keeps the depth of that queue quite small as the goal is to keep memory consumption as low as possible. When you are submitting new jobs, they are failing because the old ones with the buffer size negotiation problem are not able to be serviced meaning they are still in the queue.

Anyways, there should be mechanism in place that clears the queue if this issue occurs so that more data can be placed in it. I am not aware of any function, but I will share this behavior with CnM and we will provide an answer to if user can clear this queue or if its an edit that needs to be made in firmware. I do not have any access or control over the firmware, this is something I will have to consult with CnM team.

Based on initial response from CnM, they share my same idea. They have also shared that clearing the internal queue is not simple because it requires the device to be in a stable status which is not the case when this error occurs. Their plan at this point in time is to find a way to make sure the queue will not be full to stop an unexpected status. They have said this is not a simple problem to solve but they are working towards a solution. Again, I do feel as though this will more than likely be a fix in firmware. If that is the case, I will share it with you once it becomes available to me.

No fixes available at this time. I will update this post once I have something to share.

There is no timeline in place at this time. I have asked chip manufacturer and I will provide an approximate date when I have one.

Hello,

I appreciate your patience as discussions with chip manufacturer have been ongoing. Unfortunately, they have shared with me the conclusion they reached with their R&D department. That conclusion being that the Wave5 operates under the assumption that encoder buffer size is going to be big enough at all times. The hardware and firmware do not have any mechanism to avoid this assumption or handle the case in which the buffer is too small for the encoder to write to.

As we have discussed extensively in this thread, it is also quite difficult to assume what the size of the buffer needs to be before encoding in the driver context given that the driver does not have all properties necessary to make that accurate approximation. The only viable solution is you, on the host side, determine for yourself the buffer size you need to set that limits memory consumption, but still offers enough size so that the encoder does not hang.

Thanks,

Brandon

Thank you for your answer. But I'm not sure what buffer size is needed to satisfy that, can you provide a way to calculate the maximum value of imagesize,

Hello,

There is no function that we have to accurately calculate the image size in the driver. It is not a function we plan to implement either, because at this time there is not a safe method to do so since we do not have all of the parameters at time of buffer allocation. If the method that the driver uses, allocating either 2MB or using the width*height*3/8, does not work for your use case, then you must select your own buffer size that works. Earlier in this thread, you mentioned that you found the buffer size that worked and was less than the 2MB, so you saved memory in your use case.

Best,

Brandon

I'm not sure if there is a specific formula to calculate the maximum size. For example, I saw this formula in the manual

I would like to know if there is such a formula and how it is calculated?

Could you share which manual this formula was found in? Was this in manufacturer data sheet that was shared with you?

I found it in the h265 standard manual <<T-REC-H.265-202108-S!!PDF-E.pdf>>T-REC-H.265-202108-S!!PDF-E.pdf

This is not a function that TI has or plans to implement in the driver. I am asking chip manufacturer now if there is any such implementation in their reference SW.

Also, the manufacturer is still working towards viable solution to address this issue. We apologize that it has taken this long to address, it becomes quite complicated with how v4l2 works and the constraints on the Wave5 hardware itself. It will take more time, but there will be a way Wave5 gracefully handles this case in the future.

Best,

Brandon

Thanks for your hard work, if this problem is not easy to solve. I also want to know what size I should set the imagesize to to ensure that this problem does not occur. In other words, what is the maximum imagesize that should be set to?

Do you still see this issue even when the imagesize is set to 2MB? With this value, I have not seen any of the driver/device hang ups that you are seeing. This is why this value was selected for default in gstreamer. You should be able to go up to 4K resolution and not notice any issues.

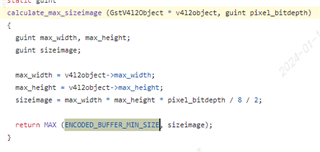

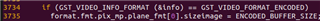

I would like to ask where this 2MB data is. I just checked the gstreamer code and found its size as shown below.

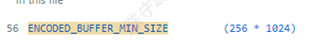

2MB

Minimum allocation of 256k

I have modified the code according to this algorithm before, and the test found that the same problem still occurs.

(gstreamer/subprojects/gst-plugins-good/sys/v4l2/gstv4l2object.c)

(gstreamer/subprojects/gst-plugins-good/sys/v4l2/gstv4l2object.c)

That macro is found to be:

Which is the 2MB default that should be set in gstreamer. I could not find the function you pasted in the gstreamer code anywhere. Which version were you looking at? We currently ship v1.20.7 in our SDK. Previous version was 1.16 and I do not see that function in there either.

I found it from github. I looked at the commit record. A few versions ago, gstreamer did use 2M, but the latest version has modified it.

I just tested using 2M and found that it cannot meet our project needs due to cma memory limitations.

I understand. Our SDK does not use the latest versions of gstreamer. The version of gstreamer that gets shipped in our SDKs are decided by the version of yocto being used. 8.x used dunfell and 9.x is currently using kirkstone. Therefore, our SDK is using the 2M default.

As I've said, if the 2M does not meet your custom needs, you will need to find the value that does. Recently you have asked for formula to calculate max imagesize, but from discussions with manufactures and looking through h265 spec, I do not think this will help reduce memory consumption at all.

This is taken from the h265 specification. The MaxCpb size is dependent on profile and level. Codec expert shared with me an example:

"In case of level 5, high tier stream, MaxCPB size = 100,000 CpbNalfactor bits = 100,000x1100 bits =: 13M"

I do not think using these algorithms will help you save memory.

Thank you for your answer

Can I understand that In case of level 5, high tier stream, buf size should be allocated to 13Mbit. If it is smaller than this value, there may be problems, but the probability is very small?

If not, I would like to know how the maximum imagesize should be calculated. If I know the algorithm, I will try to see if I can optimize some values from the project perspective.

I believe your understanding is correct.

There is no method that TI or chip manufacturer has to calculate the maximum imagesize. The gstreamer default that ships with the SDK is sufficient so there has been no need to develop such a function in the driver or userspace. If this value does not meet your use case, then you must find one that does. You shared this information with me a couple months ago:

"The problem is this, I am using a 1024*640 image (later found out that the frame size generated by encoding is 778635), which is much larger than the sizeimage=height*width/8*3 in the encoding driver or even higher than the height and width."

It seems you have already determined the size of buffer that you need for your use case and it is less than that of the 2M that is set by default. Why can you not use this value? Or if factors have changed, perform similar tests to determine the value that works for your case. I do not know all the limitations of your use case and, as discussed earlier, the v4l2 driver cannot make any calculations to accurately predict what the buffer size needs to be because all of the information required to make such a prediction is not guaranteed to be exposed to the driver.

It is okay to use 2M, but in our project scenario, 2M will cause insufficient memory. I now change the sizeimage to height*width instead of height*width/8*3, and test again to see if there is any problem.

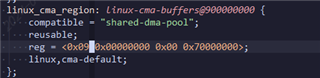

I found the starting address of cma in sdk 8.6 is 0x0900000000 and the size is 0x70000000

Yes, this is what the CMA region was forced to when 48-bit addressing support was added into the driver. There is hardware limitation that doesn't let memory access of the IP switch between lower 4GB and upper. Therefore, it has been hardcoded to upper since we allocate such a large size to meet device capabilities.

If you keep this size in your project scenario, then there should be no issue for insufficient memory.