SDK 8.6

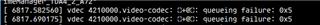

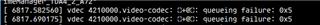

I used an nv12 image with random values for encoding. I found that wave5 will report an error

What does this 0x05 mean?

This thread has been locked.

If you have a related question, please click the "Ask a related question" button in the top right corner. The newly created question will be automatically linked to this question.

SDK 8.6

I used an nv12 image with random values for encoding. I found that wave5 will report an error

What does this 0x05 mean?

Could you elaborate on what you mean by random values?

Is this in Linux or some other high level OS? Can you share the video you are encoding so I can recreate? Are you using gstreamer or some other application?

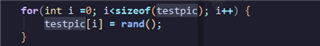

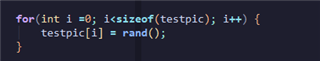

Random values generated using rand.

The program uses v4l2 written by me instead of gstreamer. It can be used normally with normal images.

Enguo,

I am not sure what exactly that error means. I also am not sure I fully understand what you are trying to do.

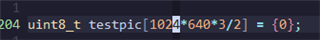

Is testpic a singular picture array that you are inserting random values into? You say this is nv12 image, but how does this method separate the luminance and chrominance components of that format? It looks like a one-dimensional array rather than two-dimensional which I believe would be the proper way to right a random image.. Filling in each entry of width x height x 1.5 with valid values that are in range of nv12 format.

If it can be used with normal images as you say, then I believe the issue would have to be how you are generating this random image, and not how the encoder is being queued.

Best,

Brandon

The length of the testpic is width x height x 1.5. The definition is this

The logic is probably like this

This generates an image consisting of random values

g_width = 1024; g_height=640

g_width = 1024; g_height=640

Then copy this image to v4l2_buf for encoding

If I don't use random numbers. Instead, use a normal image to copy to the testpic, and the encoding will be normal without errors.

So I think there might be something wrong with the encoding due to this image of random values. But I don't know what this error message might mean

I wanted to test the change in bitrate when the image changed greatly, so I used random values for testing.

Then I encountered this problem

Hi Enguo,

We are working to get an answer to your query. Thank you for your patience.

Thanks,

Sarabesh S

Hi Enguo,

Our expert is currently OOO so please expect a delay in the answer.

Thank you,

Sarabesh S

Hi Enguo,

Apologies for that - was out of all last week.

As I mentioned in above response, can you try doing this with a two dimensional array rather than a one dimensional array? I'm not sure what that error code maps back to. I do not have all the mappings at my disposal.

Please try making the testpic a two-dimensional array with your random value method making sure that the value range is between 16-235 as well. I believe this the set of possible values in nv12.

Thanks,

Brandon

I think the allocation of memory space for two-dimensional arrays and one-dimensional arrays is the same. But I can give it a try.

Why is there a range limit of 16-235? I don’t quite understand this. Can you please explain it?

I got those numbers from the NV12 spec. Each component is given 8-bits, so you would expect range to be from 0-255. However, this is not actually the case from what I have read.

I checked some information and found that the range of 16-235 is derived from BT.601-5 and BT.709-5. This is the specification for rgb and yuv conversion.

I also tried images in the range of 16-235 and found that there is still a problem.

I saved the image with a resolution of 1024*640. Can you test it over there?

However, I cannot upload image files to e2e. Is there any other way to upload image files?

I uploaded it to the link below

https://tidrive.ext.ti.com/u/zs0vdnXNLx_czS9-/be05e7f1-198f-48ce-9b1f-20e46e62352f?l

access code: redj282,

Thank you for sharing that Enguo. Are you only testing a simple encode? Any other parameters or features being enabled that I should be aware of?

I just tried it with the following command and got the same error.

gst-launch-1.0 filesrc location=./testpic ! rawvideoparse width=1024 height=640 format=nv12 framerate=30/1 ! v4l2h265enc ! filesink location=./video sync=true

So you can also use gstreamer to debug it

I am not able to recreate your issue where my encoder is producing queue errors. However, I am seeing the issue where the device hangs up. Again, I'm not really sure how the encoder is supposed to work in a single frame case when using a random generated picture. I am seeing issues when just trying to encode a single frame by itself that doesn't use random values. I will be having a discussion with CnM and will ask about what these error values translate to in order to help you.

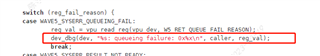

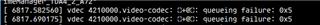

Didn't you get a queue error? This is the print

Is it possible that this printing is due to dev_dbg not printing out, but it has actually happened?

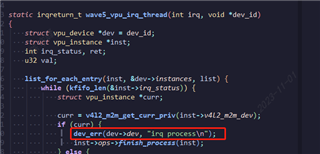

I use sdk8.6 and the evm board will print this when running the command. Of course, I made a small modification and changed dev_dbg to dev_err.

I have changed the log to do a pr_info instead. If it hits there, I would know. But I am not getting any logs from trying to encode this single random frame. The pipeline I am using is:

gst-launch-1.0 filesrc location=testpic blocksize=983040 ! video/x-raw,width=1024,height=640,framerate=30/1,interlace-mode=progressive,colorimetry=bt601,format=NV12 ! v4l2h265enc ! fakesink

The pipeline you shared with me was not working. I have been using 9.0 SDK because the driver is very similar, the most drastic difference is in user space. Particularly, the gstreamer version. Please give me time to work with CnM (wave5 manufacturer) and I will get back to you with further steps.

Apologies for not updating this ticket. I have been discussing with them single frame encoding bug that this has shown me.

Even without using your random value image, and instead using a single frame from h264 file that I have, it can decode successfully into yuv raw frame. However, the encode process for this singular frame will hang. This bug is still ongoing on and we are working towards a solution.

Do you mean that encoding a single frame will cause hangup? No matter what the picture in this frame looks like?

By the way, can you help me ask what the 0x05 error code means? After I know what it means, I can try to solve it myself.

Enguo,

I just heard back from CnM. This error is a result of the command queue being full. Wave5 has an internal command queue - our default depth in driver for this command queue is 2. It can be increased, but it will increase memory consumption. If you are sending frames faster than encoder can process them, then the device will throw this error.

In this case, you would need to wait and send command again once slot is open.

Thanks, I'll try to modify it

But I only input one frame. I don’t understand why the command queue is full.

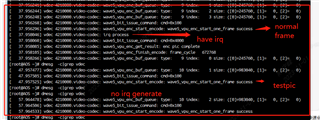

I know why there is a queuing failure: 0x05 error report

Because when I use testpic for encoding, I cannot trigger an interrupt. When I input other frames later, the queue will be full (testpic is a random value picture)

I added print in interrupt

From the above log, it can be seen that after I enter testpic, no interruption can be generated.

In order to facilitate testing, I modified the test file. An error will occur in the sixth frame. You can also try it here.

gst-launch-1.0 filesrc location=./testyuv ! rawvideoparse width=1024 height=640 format=nv12 framerate=30/1 ! v4l2h265enc ! filesink location=./video sync=true

文件名为testyuv,

https://tidrive.ext.ti.com/u/zs0vdnXNLx_czS9-/be05e7f1-198f-48ce-9b1f-20e46e62352f?l

access code: redj282,

I found that there will be no problem when using the wave5 driver of sdk8.6, but there will be problems when using the wave5 driver of sdk9.0.

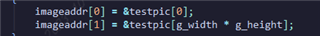

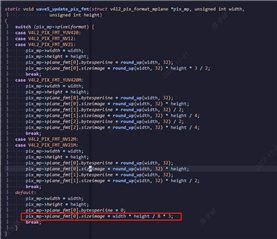

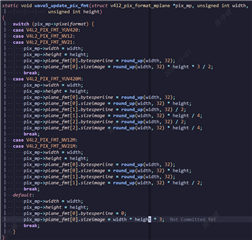

I seem to have found the problem. I put the

Change to

It ran successfully

And I found that the size of this frame is 774169=756KB. (If you run it with gstreamer, you will find that the size of this frame is smaller than mine. Because I set the bitrate, it will be larger than the size generated by the gstreamer command execution.)

But what size should I set the sizeimage to?

Not sure if the driver is the difference here in the SDK versions. Between 8.6 and 9.0, the driver is actually very similar. The difference is most likely gstreamer versions because of how drastic the core architecture of gstreamer changed.

I recently sent in this patch to our kernel: https://git.ti.com/cgit/ti-linux-kernel/ti-linux-kernel/commit/?h=ti-linux-6.1.y-cicd&id=12598d7a3ca52f5166b67cc714329029b4502543.

Could you apply it to your tree and see if it fixes the issue here?

Thanks,

Brandon

I understand that this patch allows sizeimage to be set externally. But I want to know what value sizeimage should be set to. Is there any relevant standard?

I am not sure of what the standard is for this. Since you are writing your own application, I would recommend you go through gstreamer layer to see what size it requests. Our driver either uses default size calculation, or whatever gstreamer requests us to allocate.

I found that the imagesize set by gstreamer in sdk8.6 is 2M, This may cause problems with large images.Can you help me ask what the safest sizeimage should be set to?

Or are there any suggestions for image quality modifications to ensure that the image size is not larger than the current value?

Hello,

I am a bit confused with your questions. Could you clarify on what you mean by "ensure that the image size is not larger than the current value"? What do you mean by current value? Is this in reference to gstreamer value or driver value. Is it requesting 2M on output (raw stream) side or capture (encoded stream) side?

What is your current issue? Not enough memory being allocated for the bitstream buffer?

The patch I have shared for image size comes directly from CnM. Either they use what gstreamer requests, or they calculate a value based on what they think it needs. However, gstreamer can sometimes request more because of other parameters that the CnM algorithm does not account for, hence why we take the max. I will ask CnM team if they have any other calculations for image size that do account for additional parameters.

Best,

Brandon

I tested and found that gstreamer sets a fixed size of 2M on the capture (encoding stream) side. This is no problem when the original graphic is a small resolution image, but a problem may occur when the original graphic is a large resolution image

The problem is this, I am using a 1024*640 image (later found out that the frame size generated by encoding is 778635), which is much larger than the sizeimage=height*weight/8*3 in the encoding driver or even higher than the height and weight. Causes the encoding driver to get stuck (there will be no interruption after subsequent input of other images)

I tried changing the size of the encoded stream to the same size as the input stream, which is height*weight*3/2, and it worked fine. However, this resulted in an excessive memory footprint that was unable to meet the business needs of our coding. So I want to ask the following questions

1.What sizeimage should be set to ensure that the encoding stream will not exceed the buf size?

2.The relationship between encoding stream size and image quality. Can this problem be solved by modifying the image quality? But I don’t know exactly what value to set it to.

3.Is there a way to limit such large image generation?

4.If this problem occurs, is there any way to recover later? The interrupt will no longer be triggered.

Sorry, I accidentally wrote weight instead of width in the text above. should be width

Hello,

Thank you for the clarification. Before I provide you with an answer, I want to discuss this with the team at chips-n-media (CnM). The reason being that, even in their upstream submission, they had made the design decision to set capture (encoded data) size image with the same mathematical expression as in original driver from previous versions. However, the decoder output (incoming encoded data to be decoded) takes the max of either the mathematical expression, or whatever gstreamer requests. I want to report this to them and hear what they have to say so we can come up with a more efficient algorithm so your memory doesn't have to blow up so much.

I will also make a comment on the upstream submission regarding this. Please give me a couple of days to respond to this again. I will make a ticket with them now, and will have meeting with them tomorrow where I can get more details.

Thanks,

Brandon

There is another question, will the size limits of CBR and VBR be different?

I found that in the case of VBR, the size of the encoded stream output hardly exceeds height*weight, but when CBR and bitrate are set to high values, it can easily exceed height*weight. Is there a way to limit the maximum size of a CBR?

Will add this to discussion points in meeting. I am not too familiar with the relationship is between resolution, rate control mode, and encoded bit stream size is.

Could you share you with me an example of this behavior?

To put it simply, bitrate is the size of encoded data per second. Setting bitrate in VBR mode has no effect. VBR will give priority to ensuring image quality. At this time, the size of encoded data output per second is a random value. In CBR mode, the data size will follow the set bitrate. At this time, there is a high probability that the encoded data size per second is a fixed value.

But this doesn't all represent the size of any one frame. Because assuming there are 30 frames in one second, bitrate is the sum of the sizes of these 30 frames

Enguo,

I have received a message that this issue has been raised to high priority. We are still working on solution with CnM to have proper negotiation for compressed buffer size. There is not much of an update at this point. I have another meeting with them this evening so I will ask for updates regarding this issue.

Best,

Brandon

Hi Enguo,

There has been a discussion going on between me and the engineers at Chips-N-Media (CnM) in regards to deciding on proper algorithm for image size. The problem is the v4l2 API makes it a bit difficult. When the S_FMT ioclts are called on the output and capture queues, the driver is not yet made aware of the encoding parameters that are being set for that particular stream context. What I have now asked CnM is if there can be a call to this wave5_update_pix_fmt (which sets sizeimage) after all the s_ctrl calls are made - or after each s_ctrl call is made since I am not sure if there is a way v4l2 has means of communicating which control is the last one to the driver.

Another solution CnM has offered has been to just use the MaxCpbSize formula used in the codec specifications. However, this will allocate too much memory in most cases and would not fit your use case.

I do want to let you know I have recently ported the latest version of the upstream driver to match our kernel version (6.1). As mentioned earlier in this thread, I was seeing issues in general single frame encode cases using this pipeline:

gst-launch-1.0 filesrc location=testpic blocksize=983040 ! video/x-raw,width=1024,height=640,framerate=30/1,interlace-mode=progressive,colorimetry=bt601,format=NV12 ! v4l2h265enc ! filesink location=video.265

With updated driver, single frame encoding is being handled fine. But again, this version of the driver is still using the previous method of allocating compressed buffer sizes based on patch I have shared earlier in this thread. I just wanted to share this with you because this version of the driver was the one that has been accepted in upstream. It is believed to be more robust and reliable. It will become part of our public kernel very soon.

I will urge to CnM that this is high priority issue so we can work on getting a solution quicker. But it is complicated given that not all information is available at time of buffer allocation due to how v4l2 api is done. I have looked through a couple other upstream drivers to get an idea of how they do it, and I have not found it done this way anywhere. From what I have seen, a lot of drivers are using the default sizeimage (that comes with whatever their application allocates).

For your use case, are you using a set resolution and bitrate to encode? If that is the case, then I recommend you to hard code the size image in your driver to fit your use case if the default is allocating too much memory at this point. I know that this thread has just discussed 1024x640, but I was not sure if there are other resolutions that need to be supported.

Since you are not using gstreamer as your host application, couldn't use just use your own algorithm to set the sizeimage there since you will know all the parameters you want to set already? I am looking to find examples of such an algorithm, but I have not found any expression that relates all of these parameters into an appropriate compressed buffer size yet. I will update this thread if I am able to find one or if CnM offers me any suggestions on one that would work for their hardware.

Best,

Brandon